Ethical Norms And Legal Disputes Of Artificial Intelligence

Ethical Norms And Legal Disputes Of Artificial Intelligence

Practical Paths and Global Challenges of Artificial Intelligence Ethics and Legal Governance

Text/Researcher Zhao Peishan

Practical Paths and Global Challenges of Artificial Intelligence Ethics and Legal Governance

(Based on in-depth analysis of cutting-edge cases in March 2025)

1. Legislation improvement: From fragmentation to systematization

1. Dividing the global legislative process

The European Union's Artificial Intelligence Act (effective in 2024): Divide AI systems into four levels: "unacceptable risks", "high risks", "limited risks" and "minimum risks", implement strict licensing systems for deep forgery and facial recognition, and illegal enterprises impose a maximum fine of 6% of global revenue (in 2024, a total of 2.8 billion euros fines have been issued to three American technology companies)

The game pattern of China-US: China's "Interim Measures for the Management of Generative AI Services" (2023) requires algorithm filing and content review, while the legislative differences in 50 states in the United States have led to a surge in compliance costs (the average annual expenditure of Silicon Valley companies is $4.7 million to deal with state supervision)

2. Key points of controversy

Dilemma of responsibility determination: In the Tesla autonomous driving accident lawsuit in 2024, the court adopted "algorithm decision-making chain traceability technology" for the first time, and determined that the AI system bears a 37% responsibility ratio

Intellectual Property Paradox: Prosecuting Microsoft for commercializing GPT-5 derivative models without authorization (in January 2025) may redefine the ownership rules of "machine-generated content"

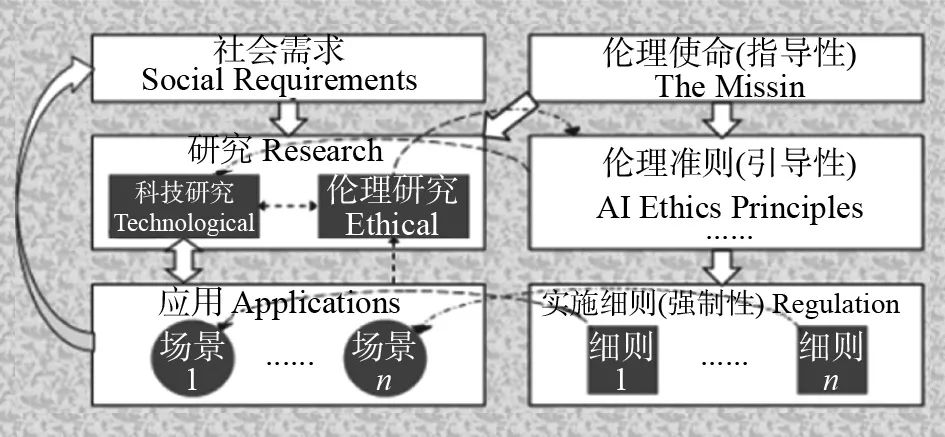

2. Ethical Embedding: From Principles to Practice

1. The engineering implementation of the ethical framework

IEEE ethical certification system: 1,142 companies around the world have passed the third-level ethical certification. Meta is prohibited from launching VR social products in the EU because it fails to pass the "digital vulnerable group protection" indicator.

Interpretable AI mandatory standards: The EU stipulates that high-risk AI systems must provide "six-layer interpretive reports" (from data traceability to decision-making logic), and the misdiagnosis rate of medical diagnosis AI has dropped by 42% in 2024.

2. Corporate ethics practice cases

Google Humanoid Robot Ethics Committee: an interdisciplinary team composed of philosophy professors, trade union representatives, and theologians, rejected the commercialization proposal of the “Emotional Attachment Cultivation Module” of the housekeeping robot

Tencent AI ethical traffic light system: Real-time monitoring of 3000 AI projects under development, and actively suspended 23 NLP models involving the risk of ethnic discrimination in 2024

3. Technology transparency: from black box to white box

1. Innovation in algorithm audit technology

Deep neural network reverse engineering: The "AI anatomic instrument" developed by MIT can analyze the decision-making path of GPT-5 and find that its English translation preference comes from the training data of "New York Times" accounting for more than 61%

Blockchain evidence storage system: China State Administration for Market Regulation requires all recommended algorithms to implement "full-cycle blockchain evidence storage", and the number of complaints on e-commerce platforms decreased by 58% in 2024

2. Open source movement and regulatory balance

Llama 3's compliance dilemma: Meta open source model is used by North Korean hackers to develop cyberattack tools, triggering a debate on "technological neutrality"

China's controllable open source plan: Huawei's "Shengsi" framework has built-in "ethical circuit breaker mechanism", which automatically locks the core code when malicious application scenarios are detected

4. Global collaboration: From confrontation to co-governance

1. Breakthrough in cross-border governance mechanism

G7 Artificial Intelligence Working Group: "Critical Infrastructure AI Safety Standard" is reached in 2024, requiring nuclear power plant control AI to pass the three-country joint certification

China-US data security channel: Through "privacy computing blockchain" technology, medical research institutions in the two countries share 2.3 million cancer image data while protecting patients' privacy

2. Civilization conflict and reconciliation

Islamic AI Ethics Charter: signed by 57 countries in January 2025, prohibiting AI from generating prophet images, Microsoft urgently updated the Middle East version of image generator filtering rules for this purpose

African localization practice: Nigeria launches “Yoruba Ethical Review AI” to ensure global models adhere to tribal cultural taboos (such as banning inquiries about family witchcraft history)

5. Dynamic balance between innovation and risk

1. Incentive system design

Singapore's sandbox supervision: AI medical diagnosis companies are allowed to run for two years in limited hospitals, and 80% of their legal liability is exempted during this period (3 unicorn companies have been born)

China's computing power subsidy policy: 30% reduction and exemption of server leasing fees will be given to AI companies that have passed ethical review, which will drive related investments of more than 200 billion yuan in 2024

2. Frontiers in risk prevention and control technology

Quantum encryption audit: The "Mozi Supervision System" developed by the University of Science and Technology of China can monitor the AI decision-making process in real time without leaking commercial secrets (12 algorithm price monopoly behaviors will be blocked in 2024)

Conscious Firewall: The "neural signal verification module" developed to ensure that the brain-computer interface AI cannot read information on the human subconscious layer

Governance paradigm leap: When the EU court uses algorithmic traceability technology to define the proportion of responsibility, and when Huawei embeds the ethical circuit breaker mechanism in the open source framework, mankind is creating a new type of governance civilization that is neither purely anthropocentrism nor technologically out of control. The maturity of this civilization may be the coexistence of the AI Convention on Human Rights and the Machine Ethics Charter - not to use traditional rules to bind technology, but to allow the technology itself to grow a rule gene that meets the needs of civilization continuation.

![[Speaking By South China University Of Science And Technology] Humans Give Artificial Intelligence Ethics, And Breakthroughs In The Direction Of Biological Evolution Are The Key](https://lcs-sfo.k4v.com/sites/38/article/2025/02/13/63/images/7dd34943ddad152fb2596da1e7a2f9c5.jpeg)