Experts Talk About AIGC Copyright Dispute: Artificial Intelligence Safety Ethics Goes Far Beyond The Scope Of Technology

Experts Talk About AIGC Copyright Dispute: Artificial Intelligence Safety Ethics Goes Far Beyond The Scope Of Technology

Source: Global Network [Global Network Technology Reporter Li Wenyao] Recently, discussions about adding an "AI training supplementary agreement" to the Tomato Novel platform are still ongoing, and have even triggered a collective boycott by platform authors.

In this regard, Professor Zhang Ping, professor at Peking University Law School and director of the AI Security and Governance Center of Peking University Artificial Intelligence Research Institute, told Global Network technology reporters at the China Artificial Intelligence Industry Development Alliance (hereinafter referred to as "AIIA") Security Governance Committee Results Conference that copyright issues and patent issues brought about by the development of artificial intelligence technology are in a state of urgent need to be resolved.

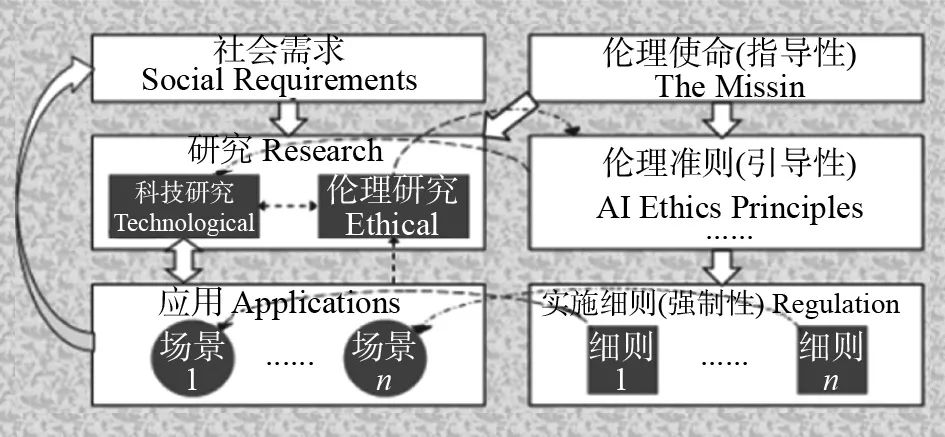

"In the final analysis, artificial intelligence is not a technical issue." Generative artificial intelligence has led a new round of development of the artificial intelligence industry, but while it has brought opportunities, it has also raised safety and ethics issues. According to industry experts Zhang Pingping, artificial intelligence is not only a technical issue, but also involves ethics, law, society, culture and other levels. This is also an important reason for industry, country and other levels to jointly promote artificial intelligence governance.

Artificial intelligence: from technical issues to ethical and safety issues

Data corpus is an indispensable and important "raw material" for training AI.

According to the "AI Training Supplementary Agreement" issued by Tomato Novel this time, "Party A (Tomato Novel) can use all or part of the content of the contracted work and related information (such as work title, introduction, outline, chapters, characters, author personal information, cover image, etc.) as data, corpus, text, materials, etc. for annotation, synthetic data/database construction, AI artificial intelligence research and development, machine learning, model training, deep synthesis, algorithm research and development and other new technology research and development/application fields that are currently known or developed in the future."

This also means that after the online article author signs the agreement, the platform can use the author’s online article corpus, related work information, and author information for large model training. At the same time, the platform emphasized: "After signing, the training and production content is protected by the platform's copyright, and plagiarism or piracy is not allowed."

This also caused dissatisfaction among online article authors. The first is that the content currently produced after training has strong personal writing characteristics of the author. Without special marking, it is almost impossible to determine whether it was written by the author himself or generated by a machine.

This is similar to the "AI face-changing onomatopoeia" that has been discussed recently. In April this year, the Beijing Internet Court pronounced its verdict on the country's first AI-generated voice moral rights infringement case. The first-instance judgment found that the defendant's use of the plaintiff's voice and development of the AI text-to-speech product involved in the case did not obtain legal authorization and constituted infringement. The defendant apologized in writing and compensated the plaintiff for various losses of 250,000 yuan.

Overseas, lawsuits between actors and AI companies are also attracting attention. In May of this year, Scarlett Johansson, who plays the Marvel superhero "Black Widow", will go to court, accusing the illegal use of her voice and demanding that the AI synthesized voice be removed from the market.

Shi Lin, director of the AIIA Security Governance Committee Office, told reporters: "As AIGC content becomes more and more, we need to distinguish to a certain extent which ones are artificially generated and which ones are generated by artificial intelligence."

On the other hand, the determination of plagiarism or piracy also causes concerns among authors. Some authors reported that after information such as novel outlines and online articles they wrote were "fed" to the large AI model for training, the platform instead judged that their original content was plagiarized, while the content produced by the machine was original.

This has also triggered industry concerns about AI content security governance. In this regard, Yu Xiaohui, Secretary-General of China’s Artificial Intelligence and President of the China Academy of Information and Communications Technology, told reporters that the technological innovation, application and development of artificial intelligence itself are very uncertain. How to unleash the greatest ability of artificial intelligence to benefit mankind and promote our economic and social development requires further promotion by all countries in the world.

"Carrying out artificial intelligence safety governance is not only a very important task for our country to promote the development and safety of artificial intelligence, but it is also a major issue that the world and we humans need to deal with."

Artificial intelligence governance becomes a new challenge

How to better conduct artificial intelligence security governance? Shi Lin said that the AIIA Security Governance Committee hopes to establish a platform for unified content identification to achieve content traceability and traceability, thereby improving the artificial intelligence governance capabilities of the organization.

At present, my country has promulgated and implemented relevant laws such as the "Regulations on the Management of Deep Synthesis of Internet Information Services" and "Interim Measures for the Management of Generative Artificial Intelligence Services". However, there is still room for further refinement in judicial governance in the field of AI.

Bi Maning, chairman of the AIIA Security Governance Committee and former deputy director and researcher of the Information Security Level Protection Assessment Center of the Ministry of Public Security, said that in the past year, the rapid development of large models and the wide scope of empowerment have allowed us to see the path and dawn of the realization of general artificial intelligence, but the seriousness and urgency of artificial intelligence security issues cannot be ignored. When we examine artificial intelligence security issues from different levels, we can find that the security challenges brought by artificial intelligence have gradually spread from traditional security issues such as data, computing power, and systems caused by the technology itself to derivative security issues for individuals, organizations, countries, societies, and human ecology.

In Yu Xiaohui’s view, to carry out artificial intelligence security governance work, we can think about it from the following aspects: First, improve the artificial intelligence security risk identification methodology. At present, artificial intelligence technology is increasingly integrated into the entire process of various fields of economic and social development, and its security risks continue to expand. It is necessary to establish a more agile and accurate security risk identification mechanism.

The second is to strengthen risk assessment and prevention, focusing on assessment from aspects such as artificial intelligence infrastructure, algorithm models, upper-layer applications, and industrial chains, so as to detect risks as early as possible.

The third is to strengthen the governance of artificial intelligence security technology, strengthen the research on evaluation technology tools for the toxicity, robustness, fairness and other aspects of algorithm models, and adopt technical governance technology.

Fourth, international cooperation needs to be strengthened. China needs to work with the world to jointly research and promote global artificial intelligence. We must reach a broader consensus to jointly release the potential of our artificial intelligence and guard against governance risks.

At present, the China Academy of Information and Communications Technology has carried out large model security benchmark testing (AI) work, and has cooperated with more than 30 units from industry and academia to conduct security tests on domestic and foreign open source and commercial large models to help the industry understand the safety level of large models; it has also released large model security reinforcement solutions to provide practical measures to improve security capabilities.

Artificial intelligence safety has become a global issue

In terms of AI governance regulations, many major countries around the world have begun to take action. In November last year, the UK held the first Global Artificial Intelligence Security Summit, attended by representatives from the United States, the United Kingdom, the European Union, China, India and other parties. In May this year, the European Parliament approved the EU Artificial Intelligence Act, which is the world's first bill to comprehensively regulate artificial intelligence.

Bimaning said that currently, Singapore has launched the "Generative Artificial Intelligence Governance Model Framework" based on the original governance framework, proposing nine dimensions that need to be considered in the assessment of artificial intelligence. Japan released the "Guidelines for Artificial Intelligence Operators" to formulate a code of conduct for developers, providers, and users. From the "Internet Information Service Algorithm Recommendation Management Regulations" to the "Interim Measures for the Management of Generative Artificial Intelligence Services", my country has provided relevant basis for precise management of artificial intelligence technology.

AI governance is showing a trend of framework and collaboration, and our country is also actively expanding international cooperation. On July 1, the 78th United Nations General Assembly adopted the China-sponsored resolution "Strengthening International Cooperation in Artificial Intelligence Capacity Building" by consensus, with more than 140 countries participating in the joint signature. At the regular press conference of the Ministry of Foreign Affairs on July 2, Ministry of Foreign Affairs spokesperson Mao Ning said that this fully reflects the broad consensus of countries in strengthening artificial intelligence capacity building and demonstrates the willingness of countries to strengthen capacity building and bridge the intelligence gap through unity and cooperation.

In Zhang Ping’s view, artificial intelligence governance can be dismantled from three dimensions: From the most macro level, at the United Nations level, we advocate the solution to AI security that is faced by all mankind, so we must emphasize people-oriented and human interests. At the meso level, starting from the country, we focus on national security, network security, and infrastructure security; at the micro level, we further promote personal information security.

It is understood that the AIIA Security Governance Committee has launched the "Artificial Intelligence Security Guard Plan" to build the AI Guard brand. The China Academy of Information and Communications Technology has conducted bilateral and multilateral discussions and exchanges with international organizations to jointly promote the global development and security governance of artificial intelligence.

Facing the uncertainties, security risks and challenges brought by artificial intelligence, the international community needs to work together to jointly promote artificial intelligence security governance.

Zeng Yi, a researcher at the Institute of Automation of the Chinese Academy of Sciences, a member of the National New Generation Artificial Intelligence Governance Special Committee, and an expert at the United Nations High-Level Artificial Intelligence Advisory Body, said that artificial intelligence safety and capabilities are not mutually restrictive. Safer systems actually have stronger cognitive capabilities. At the same time, artificial intelligence safety work should not just fix problems, but should be constructive, shifting from passive reactions to proactive designs. “The development and security of artificial intelligence have become global issues and require joint efforts from the international community,” he said.