[Cyber Security] Be Wary Of Cyber Security Risks Arising From Artificial Intelligence Technology

[Cyber Security] Be Wary Of Cyber Security Risks Arising From Artificial Intelligence Technology

The rapid development and application of artificial intelligence has brought mankind into an era driven by artificial intelligence. A large number of AI software systems such as face-changing software, AI replacement of voices, and AI drawing are used by a large number of user groups. As this technology develops, it also brings network security risks, which must be paid attention to. Only by solving these risks can we better protect users and their data.

The rapid development and application of artificial intelligence (AI) has brought mankind into an era driven by artificial intelligence. A large number of AI software systems such as face-changing software, AI replacement of voices, and AI drawing are used by a large number of user groups. As this technology develops, it also brings network security risks, which must be paid attention to. Only by solving these risks can we better protect users and their data.

1. Data leakage risk

In the process of using AI technology, users may disclose some sensitive personal information, such as basic personal information, psychological state and preferences, and may also mention financial information, medical information, education information, family information, etc. This information may be used to provide users with better services, but it may also be used inappropriately. If malicious users use AI technology to query or generate text, this information may be exposed or reconstructed, resulting in information leakage. In fact, it is difficult to completely delete data during the process of training AI. AI obtains data to train the model, but this process is like a black box, and it is difficult to completely delete traces during the automated process. Therefore, users should avoid providing sensitive information about countries, companies, and individuals to artificial intelligence chatbots.

2. Risk of infringement of personal intellectual property rights

"AI face-changing" uses information technology such as artificial intelligence to manipulate and modify video and image data to replace someone's face with another person's face, but retain body movements and other torso features, and form highly realistic images, videos, etc. on this basis. "AI face-changing" embodies a strong identity deconstruction ability, decomposing an individual's identity traits, separating facial image, physical features and even voice and intonation, etc., and integrating them with other people's identity traits, thereby reconstructing identity traits. This kind of deconstruction destroys the identity of the portrait and the identity subject, and constitutes an infringement of the portrait rights of others.

For example, content such as "AI Stefanie Sun" and "AI Painting" that are popular all over the Internet are based on existing knowledge and information. The knowledge or information sources they refer to or utilize may include the original works of others. If it involves other people's works and does not fall within the scope of fair use or statutory permission in the sense of copyright law, then it may infringe the copyright of others, and you will need to bear corresponding responsibilities.

3. Risks of misuse of artificial intelligence technology:

On May 24, 2023, the Internet Society of China’s public account issued an article warning about the new “AI face-changing” scam. Since 2023, with the open source of deep synthesis technology, deep synthesis products and services have gradually increased. It is not uncommon to use false audio and video such as "AI face-changing" and "AI voice-changing" to commit fraud and defamation. In 2023, the Telecommunications Cybercrime Investigation Bureau of the Public Security Bureau of Baotou City, Inner Mongolia Autonomous Region announced a case of telecom fraud using intelligent AI technology. Mr. Guo, the legal representative of a technology company in Fuzhou City, was defrauded of 4.3 million yuan in 10 minutes. The scammer used intelligent AI face-changing and onomatopoeia technology to pretend to be a friend and defrauded him.

Please increase your awareness of security precautions, be sure to verify and confirm through phone calls, meetings, etc., and remind your relatives and friends around you to improve your security awareness and ability to deal with high-tech fraud, so as to prevent being deceived together.

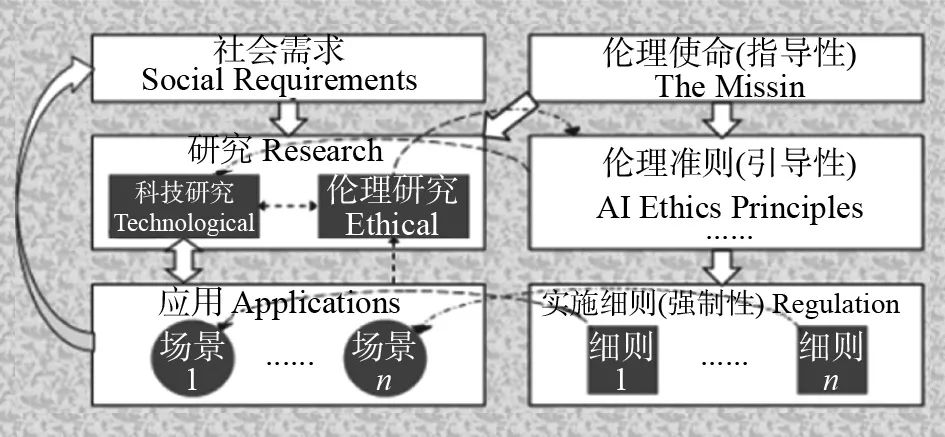

In December last year, the "Internet Information Services Deep Synthesis Management Regulations" were released, which have clear restrictions on face generation, replacement, manipulation, synthesis of human voices, imitation of voices, etc. On April 11 this year, the Cyberspace Administration of China drafted the "Measures for the Administration of Generative Artificial Intelligence Services" (draft for comments), proposing that AI content must be true and accurate. It also clearly mentions that illegal acquisition, disclosure, and use of personal information, privacy, and business secrets are prohibited. As the system design becomes more accurate and relevant legislation becomes more complete, AI technology will better serve users and better limit the abuse of AI technology.

![[Cyber Security] Be Wary Of Cyber Security Risks Arising From Artificial Intelligence Technology](https://lcs-sfo.k4v.com/assets/public/default_cover.jpg)