Analysis And Countermeasures Of The Ethical Supervision Of Artificial Intelligence In My Country

Analysis And Countermeasures Of The Ethical Supervision Of Artificial Intelligence In My Country

The literature analyzes and compares the role of various ethical systems in artificial intelligence scenarios, and demonstrates the effectiveness and feasibility of the ethical guide for artificial intelligence. Literature believes

1. Preface

As a supporting technology for emerging industries, artificial intelligence (AI) has a high degree of uncertainty in its development; while the industry emphasizes "technology first", it often ignores the hidden ethical risks. In the context of promoting the construction of new infrastructure, artificial intelligence technology has brought coherence and cohesion across industries and fields; while this revolutionary change amplifies the advantages of the industry, it will also simultaneously amplify the social security crisis brought about by the application of technology. At present, the ethical risks of artificial intelligence have gradually emerged. For example, the abuse of large amounts of user information by Internet platforms and excessive commercial processing have accompanied different degrees of social trust crises; artificial intelligence technology can help humans get rid of general intelligent activities, but the employment substitution effect may cause structural imbalance in the labor market; a series of traffic accident disputes highlight the ethical threshold for the introduction of driverless cars based on artificial intelligence to the market.

The literature analyzes and compares the role of various ethical systems in artificial intelligence scenarios, and demonstrates the effectiveness and feasibility of the ethical guide for artificial intelligence. The literature believes that artificial intelligence risks are a process of gradually reducing from profit-driven to technological development and in terms of field and frequency. Taking autonomous driving as an example, the literature proposes a unified governance principle to ensure public safety and encourage scientific and technological innovation and development. These studies mostly analyze the application of artificial intelligence from the perspective of ethics and market applications. Correspondingly, this article focuses on facing the dilemma of ethical governance and put forward multi-dimensional suggestions for combining laws, policies and ethical supervision, and conducts comprehensive research and judgment from multiple perspectives such as scientific research, industry, and the public, in order to provide theoretical reference for improving the national engineering and science and technology ethical governance system.

2. Ethical focus issues in the field of artificial intelligence

(I) Machine rights and human rights

The advancement of artificial intelligence technology has greatly promoted social development, but it has also led to increasingly complex social relations. Represented by the "Ethical Code of Artificial Intelligence Design" issued by the Institute of Electrical and Electronic Engineers (IEEE), many ethical regulatory recommendation documents in the information field focus on the relationship between machine rights and human values and human rights; it is generally believed that the design and operation of artificial intelligence systems should be ensured that human dignity, rights, freedom, and culture should be in line with human dignity, rights, freedom, and culture, and human rights shall not be violated.

In terms of machine "rights" and "obligations", the European Parliament's Legal Affairs Committee (JURI) issued the "EU Civil Law Rules for Machines", advocating the granting of high-level intelligent robots with electronic human subject qualifications, which is the same subject status as natural persons; in 2017, the Kingdom of Saudi Arabia granted robots citizenship. The international community has discussed the right to grant parts of the personality of machines, but while claiming the rights of machines, it has proposed to impose obligations (including legal and moral guarantees).

In terms of ownership of control, the ethical dilemma of "unmanned driving" has become a typical representative of the distribution of manipulation rights between humans and machines. The exaggeration of the capabilities of the "assisted driving system" and the abandonment of human control over driving have led to the continuous occurrence of such autonomous driving accidents. The civil aviation aircraft autonomous driving system that appeared in 2019 had errors (the flight control computer had a "data error"), which caused the pilot to be unable to regain control of the flight and eventually led to an accident.

In terms of changes in the labor structure, with the upgrading of the industrial structure brought about by digitalization, automated machines have replaced duplicate labor, and intelligent equipment can assist in relevant decision-making; although in the long run, the construction of digital infrastructure is conducive to the cultivation of high-quality talents and the creation of new employment opportunities, it has led to more and more people with poor skills facing the re-choice of the labor market, and structural employment contradictions are inevitable.

(II) Social trust crisis

First, "virtual reality" is not reality. The fifth generation of mobile communications (5G) virtual reality (VR)/Augmented reality (AR)/Extended reality (XR) scenario applications in the construction of digital infrastructure bring an experiential revolution in the field of medicine, architectural design, etc., and humans can seamlessly shuttle between "virtual" and "reality". A large number of psychological experiments such as the "Stanford Prison Experiment" and the "Milgren Experiment" have confirmed that VR may have an impact on human behavior, and this impact will continue into the real world. In the case of "meeting and not knowing each other" in the virtual society, moral subjects will experience a sense of social indifference, which is easy to break through the moral bottom line and "overwhelming" behavior; while it may cause large-scale dissemination of online violence and malicious information, it will seriously impact the social integrity system.

The second is voice forgery and face change. The personal handwriting, sound, animation and video forged by artificial intelligence algorithms have extremely high simulation, which makes it difficult to identify anti-counterfeiting and identification of contracts, contracts, certificates, and legal texts in daily social activities, and poses a potential serious threat to all levels of social order. Various intelligent fraud methods such as politicians who promote false political views, forged videos, "virtual agents" generated by the network, and "strongest fake news generators" based on the common language model GPT-3, interfered with the normal social order and formed huge public opinion pressure.

The third is the safety hazards of smart home devices. Home smart devices cover a wide range of application scenarios and bring great convenience to residents' lives; it should also be noted that smart home appliances with huge scale and remote control are mostly low-security and vulnerable devices in the Internet of Things. The authentication mechanism of smart home devices, platform data protection system, network port data leakage, etc. may be weak links in the privacy protection of personal information. Cases of hackers such as smart cameras, smart speakers, and children's monitors have occurred, which has brought huge burdens to ethical security and social security.

(III) Data information security

Data security has become a global problem. The loss of data security not only brings risks to national security, but also causes direct or indirect economic losses to enterprises, threatening the information and privacy security of individual users. "Decentralization" brings about a large amount of information to the cyberspace migration, and the liquidity and replicability of data make it very difficult to implement corresponding data supervision. Strongly targeted and destructive external network attacks, ineffective protection measures for internal enterprise data servers, and non-compliant personal privacy protection measures will all cause irreparable data security losses. It should also be noted that with the implementation of cross-industry and integrated information projects, the infrastructure that needs to be protected will increase on a large scale, and the data security challenges will expand from high-tech industries to many horizontal industries; cyber attacks will extend from digital space to physical space, and the hidden danger of "small probability events trigger big problems" still exists.

The intelligent business recommendation system based on big data analysis has brought a new marketing model, and the marketing efficiency has an exponential multiplier effect. In the face of huge temptation of interests, data containing personal privacy and sensitive information are simply regarded as tools for profit and goods that are resold at will, and personal privacy rights are violated due to insufficient reasonable protection. At present, illegal collection of user information has formed a gray interest chain, and privacy leakage has been elevated to an international political issue. For example, the "Prism Gate" incident is a typical representative of the "third eye".

(IV) Algorithm defects

First, the algorithm is not objective. The design purpose, data application, result representation, etc. of intelligent algorithms are all subjective value choices for developers and designers. The biases held by personnel are usually embedded in intelligent algorithms; intelligent algorithms may further amplify or solidify this discriminatory tendency. For example, in the United States, both the national level and states have included algorithms with substantial discrimination impacts into the scope of legal adjustments and conducted judicial review of discriminatory algorithms.

The second is the transparency of the algorithm. Artificial intelligence decision-making based on intelligent algorithms is different from traditional decision-making systems. The public cannot understand the mechanism principles and framework models of complex algorithms; for input data, the calculation model completes the intelligent calculation process by itself, and even the algorithm designer cannot clearly explain the specific operation process of the machine. The "black box" problem thus arises, and the interpretability of the conclusions of independent decision-making systems has become a problem in algorithm utilization.

Third, the "digital divide" and social differentiation have intensified. After entering the era of social media, under the adverse influence of the algorithm recommendation system, the multi-channel communication form is actually not conducive to the free dissemination of information. During the dissemination process, new media will experience "echo chamber effect", "information cocoon", and "network Balkanization", which will lead to people immersion in the information world they prefer, which may cause serious public ideological differentiation and online group polarization.

(V) Rights confirmation and responsibility ownership

First, data rights confirmation in the era of artificial intelligence. The ownership, right to know, right to collect, right to save, right to use the data, etc. have become the new rights and interests of every citizen. From the perspective of data life cycle, the production, collection, transmission, storage, processing and destruction of data, and the processing subject of data continues to differentiate with the subdivision of the information industry chain, and the data content presents highly coupled characteristics on the entire information chain. The boundaries of data's rights and interests are relatively blurred. Under the principles of openness, fairness and justice, giving data subjects the autonomy and resolving ownership disputes among subjects has become new ethical issues.

The second is the capability certification of smart devices. Traditional industries, especially basic industries such as transportation, urban construction, and medical care, in the process of developing from "automation" to "digitalization" and "intelligence", ethical loopholes in intelligent information systems will bring huge security risks to the entire industry and even the society. For example, in specific fields such as autonomous driving, legal decision-making, and medical diagnosis, we face urgent issues such as intelligent machines need to have permission from relevant industries such as driving licenses, lawyer qualifications, and medical qualifications when performing operations, and have relevant professional ethics to protect human rights and interests.

The third is the legal entity qualification of smart devices. Under the current civil legal system, smart devices, as the object of rights and the object of legal control, do not yet meet the conditions for becoming a legal subject. The responsibility of services requires consideration of moral judgment and choice of conflicting interests in specific occasions. For example, the ownership of intellectual property rights of artificial intelligence creation results and the allocation of civil legal liability for unmanned driving accidents, and other practical issues, such as the ownership of intellectual property rights of artificial intelligence creation results and the allocation of civil legal liability for unmanned driving accidents, all of which need to be made as soon as possible.

3. Current status of ethical supervision in the field of artificial intelligence at home and abroad

(I) Foreign ethical policies and regulatory status

The international security and governance issues brought about by the ethical issues of artificial intelligence technology are dual: on the one hand, it is the risks of the technology itself, such as technical capability defects such as algorithmic discrimination, confrontation with sample attacks, or attacks against technology; on the other hand, it is the global crisis caused by the interweaving of intelligent technology and original social problems, such as transnational crimes of big data, the application of lethal autonomous weapons, etc. International organizations and many countries have invested a lot of research resources to actively control the impact of the industrial transformation of artificial intelligence technology on all aspects of society, and strive to make wise policy choices.

The development paths of the artificial intelligence industry in different countries and regions are different, and the focus on related issues is also different. ① The United States focuses on technological development and national security with the help of ethical initiatives; the Executive Order on Maintaining the Leadership of Artificial Intelligence in the United States emphasizes increasing the level of social attention to ethical issues, and requires that the potential of artificial intelligence technology be fully tapped on the basis of protecting civil liberties, privacy, and basic values; the National Science and Technology Commission of the United States issued the "National Artificial Intelligence Research and Development Strategic Plan", requiring that artificial intelligence systems must be trustworthy and should take measures such as maintaining fairness, transparency, and accountability to design an ethical and ethical artificial intelligence system. ② The European Commission proposed the concept of "trust ecosystem"; the senior expert group of artificial intelligence under it issued the "Ethics Guide for Trusted Artificial Intelligence", which proposed four basic ethical principles, 7 basic requirements, and a list of trusted artificial intelligence assessments, hoping to ensure the healthy and stable development of the digital economy and occupy a leading position in the normative level; many European governments are strengthening cooperation in the research and development of artificial intelligence technology and dialogue on ethical policy issues through bilateral or multilateral agreements, such as the "Declaration on Artificial Intelligence Cooperation" signed by 25 countries in 2018.

At present, the United States has jointly established multilateral forums with Japan, South Korea, Germany, Poland, the United Kingdom, Italy and other countries, forming some soft legal and autonomous norms about artificial intelligence. Although these norms do not currently have mandatory legal effect, many of the principles and rules will inevitably become the key direction of supervision when conditions ripen in the future, and are very likely to become mandatory legal provisions, or even international rules in the field of artificial intelligence in the future. Enough attention should be paid to this.

(II) my country's ethical policies and regulatory status

In July 2019, the "Plan for the Establishment of the National Science and Technology Ethics Committee" was reviewed and approved, opening a new process of institutionalizing my country's science and technology ethics governance. This policy document points out that science and technology ethics is a value criterion that must be followed in scientific and technological activities; establish a National Science and Technology Ethics Committee to promote the construction of a comprehensive coverage, clear orientation, standardized and orderly, and coordinated science and technology ethics governance system. In June 2019, the National New Generation Artificial Intelligence Governance Professional Committee issued the "Next Generation Artificial Intelligence Governance Principles - Developing Responsible Artificial Intelligence", which proposed the AI governance framework and action guide; highlighted the theme of developing responsible artificial intelligence, and emphasized eight principles, including harmony, friendship, fairness and justice, inclusiveness and sharing, respect for privacy, security and controllability, shared responsibility, open collaboration, and agile governance.

Relevant scientific research institutions and enterprises are also constantly promoting research on the supervision and governance of artificial intelligence ethics. In May 2019, Beijing Zhiyuan Artificial Intelligence Research Institute, together with Peking University, Tsinghua University, Institute of Automation, Institute of Computing Technology and other units, jointly released the "Beijing Consensus for Artificial Intelligence", proposing 15 principles that should be followed in the research and development, use and governance of artificial intelligence. In July 2019, Tencent Research Institute released the research report "Technical Ethics View in the Intelligent Era - Reshaping Trust in the Digital Society", which includes three aspects: technological trust, individual happiness, and sustainable practice of "technology for good".

Overall, my country has long supported scientific research and industrial development in the field of information technology, and has maintained an attitude of actively paying attention to emerging technologies and promoting development; actively leveraging its institutional advantages at the national level, and promptly issued a number of policies and regulations to promote the coordinated development of "industry, education and research" of digital infrastructure security, network security, and artificial intelligence. It should also be noted that existing policies focus on the promotion of industries at the macro level, and the development guidance for specific professional and technical directions is still insufficient. It is urgent to strengthen the organic combination of national guiding policies, local resource guarantee policies and industrial technical standards. The research and supervision of engineering ethics in the field of information is still in a transitional stage that needs to be deepened.

4. Analysis of the ethical supervision needs of artificial intelligence in my country

(I) Requirements for building ethical defense in the scientific research stage

Technology research and development is the first line of defense for ethical governance. During the R&D stage, it is necessary to integrate governance strategies to avoid original defects in algorithms and procedures, and lay a solid ethical foundation for the demonstration and promotion of technology application. Technical ethics research cannot be satisfied with evaluating or criticizing the current technology and social impacts, but should play a shaping role in the process of technology research and development, integrate more ethical value in technological evolution, and intervene in the construction of science and technology into society as soon as possible. With the in-depth integration of artificial intelligence technology with many application fields such as medical care, automobiles, finance, and education, traditional social ethics methods no longer match the development needs of artificial intelligence technology, and the artificial intelligence ethics research team has diversified and interdisciplinary characteristics; researchers in professional fields such as law, sociology, philosophy, and ethics have jointly formed an ethics research group with compound characteristics. At the same time, computer and artificial intelligence experts, life scientists, and the public have also participated in the reflection on emerging technologies.

(II) Self-discipline requirements for information enterprises and industries

Establishing self-discipline organizations for enterprises and industries and issuing industry self-discipline norms is a necessary condition to ensure the healthy development of related industries. Digital enterprises are an important driving force for promoting the social transformation of artificial intelligence technology achievements. They should abide by correct values and basic principles of scientific and technological ethics in the development, design and application of technical projects. Some domestic enterprises have proposed to firmly grasp the original intention of artificial intelligence application and integrate security, ethics and extensive social care into the development process. Some international companies have established an artificial intelligence ethics committee internally, putting forward their own guidelines for the development of artificial intelligence; and established an independent global advisory committee to provide suggestions on ethical issues related to artificial intelligence and other emerging technologies. However, relevant institutions have aroused public objections due to the unreasonable staffing. This shows that paying attention alone is not enough. Continuous efforts are needed in ethical organization setting, personnel arrangement, neutrality guarantee, employee recognition, etc., and market autonomy and industry self-discipline should also be taken into account under the guidance of the government.

(III) Multi-dimensional regulatory needs for ethics, policies and legislation

Ethical norms are soft measures and are not backed by state coercive forces. It is necessary to comprehensively use various forces such as public opinion, policy guidance, and legal norms to guide the safe and orderly development of artificial intelligence technology. Dynamically evaluate and respond to the technological development situation, conduct interdisciplinary surveys, dialogues and public discussions between various stakeholders, and based on this, we will comprehensively estimate the impact of the information industry on national development and actively formulate response strategies. The technological innovation initiated by artificial intelligence is generally regarded as the "fourth industrial revolution". my country should actively follow up on the international development trend. In this critical technological competition to win the future, it is necessary to maintain the dominant position of domestic enterprises and research institutions in technology research and development and innovation, but also actively participate in the international rule-making process and give play to greater influence, so as to seek greater opportunities for the healthy development of the industry and participate in international cooperation and competition.

(IV) The need to establish public trust

Only by "technology for good" can we build public trust in using it. However, in recent years, the negative impact of privacy leakage, algorithm discrimination, and "information cocoon" related to artificial intelligence applications has reduced the public's trust in data use and artificial intelligence technology; the "digital divide" under the wave of the digital economy has led to the formation of new "rights gap" and "knowledge gap" between different social groups, which will hinder the overall improvement of social capabilities. We should correctly guide the use of public data, advocate freedom, equality, integrity and self-discipline in the use of technology, and simultaneously support the public to adapt to the environmental challenges and changes in the labor market structure brought by the digital age. At the same time, create an open and fair discourse space to adapt to the objective trend of the continuous increase in public participation in technology; use multidisciplinary social science experience description methods to deeply understand the public's expectations for technology. Determine "what is acceptable technology" and "whether the boundaries of technological feasibility are" from the public's perspective, thereby providing an ethical basis and moral defense for the formulation of science and technology policy.

5. Development paths and countermeasures and suggestions for artificial intelligence ethics supervision

(One) Phase-based development path

Research on engineering ethics in the field of artificial intelligence is closely related to the conflicts and disorders of social life caused by the application of artificial intelligence technology. According to the gradual extension of the research scope, the corresponding ethical research can be divided into 3 stages.

The first stage is a study on the ethics of technology itself. Using intelligent technology with a cautious attitude and considering the social consequences of the use of artificial intelligence technology in many aspects is the most basic requirement for the ethics of artificial intelligence technology. Ethical problems such as algorithm "black box" and algorithm alienation due to objective technical defects need to be corrected and improved at the technical level. Researching the technical ethics itself is a key part of implementing "responsible innovation" at the specific technological development level, it is also the most basic research part of artificial intelligence ethics, and it is also the core link in the shaping of artificial intelligence technology progress.

The second stage is a study on cyberspace ethics. "Nowhere is the edge, everywhere is the center", the Internet has brought about comprehensive changes in the methods of information dissemination and interpersonal social interactions; the imbalance in the application of artificial intelligence technology is reflected in different countries, regions, and ethnic groups; in the digital age, while advocating individuality, ethical relations are becoming increasingly complex. Establish a new moral relationship in virtual space and an ethical order based on information network space to achieve comprehensive coverage of the online society as an extension of the real society.

The third stage is an overall study of the social ethics of artificial intelligence. The scope of research on artificial intelligence ethics is no longer limited to technology and networks, but should focus on the ethical issues of information society in the context of the entire economic and social development. Integrate computer science, communication, sociology, philosophy and other disciplines to form a new engineering application ethical system, and overcome the ethical problems of artificial intelligence technologies such as the abuse of new technologies, the financial impact of digital currency, and the application of fatal autonomous weapons.

(II) Countermeasures and suggestions

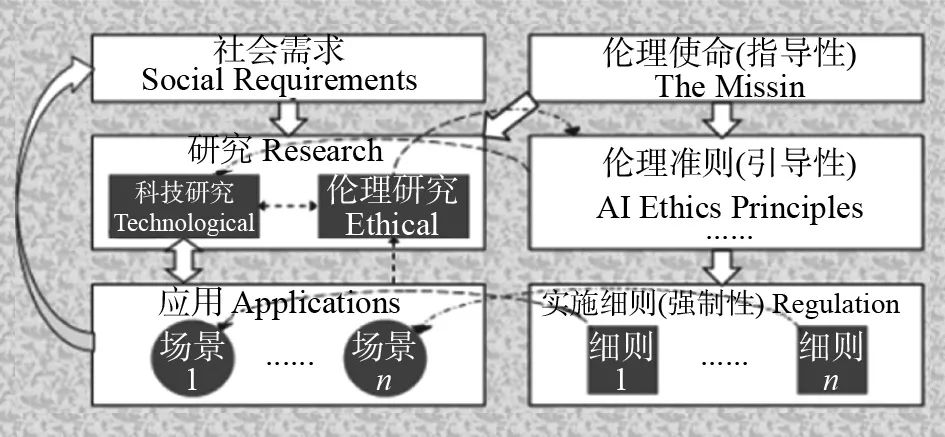

1. Establish a multi-dimensional regulatory framework

Faced with the ethical challenges brought by the artificial intelligence technological revolution, the formulation of an ethical supervision system must not only support it with timely, accurate and comprehensive information, but also achieve effective cooperation in ethics, policies and laws to readjust the social moral order. Ethics is the basis of value choice, and ethics should be the first to reach a consensus on "sustainable development" - to meet the needs of contemporary people without affecting the ability of future generations to meet their needs. Policy is a national governance method that takes into account flexibility and coercion. Policies can be used to regulate the development process of artificial intelligence technology and industries, and promote a moderate balance between safety and efficiency. Law is an ethical bottom line and a fundamental governance means, and should provide sufficient legal guarantees and adhere to the legislative logic of early guidance, medium-term constraints, and late intervention.

2. Try to formulate exemplary policies and regulations

The regulations on relevant systems and policies in the field of artificial intelligence technology should break through the framework of technical concepts, focus on ethical issues in real society, and ensure the feasibility of specific technology applications and the public's acceptability. The application areas of artificial intelligence technology span a large range, and before formulating new laws and regulations, existing policy tools need to be evaluated and used with caution. Excessively strict laws, regulations and management policies may limit the enthusiasm of startups and small businesses to create technology and promote the use of promotion. For current clear and mature technology application scenarios, we can learn from the internationally accepted "regulatory sandbox" system; for emerging products or services, on the premise of ensuring controllable risks, simplify market access, standards and procedures, provide inclusive experimental and demonstration platforms, and provide relevant technologies and industries with conditions to seize the first-mover advantage.

3. Provide sufficient social speech space

It is recommended to maintain a fair and open speech space, so that industry experts, technicians, industry investors, and ordinary people have the opportunity to express their opinions, form a full social consensus on ethics, and avoid miscalculation of risks in the process of forming ethical norms. Under the common social value orientation, establish a theoretical, normative and regulatory system of scientific and technological ethics, and make necessary changes to the original technical system and governance system to match the risk supervision responsibilities of artificial intelligence technology. Based on the existing institutional advantages, we will conduct interdisciplinary surveys, dialogues and public discussions between various stakeholders, and carry out dynamic assessments and policy adjustments based on the impact of artificial intelligence technology.

4. Improve the organizational structure of science and technology ethics supervision

It is recommended to establish a science and technology ethics committee at each level, gather representatives from different fields such as technologies, industries, laws, and social groups related to artificial intelligence, and include them as much as possible in the interests and requirements of all social classes and groups, and reflect them in the decision-making procedures of the science and technology ethics committee. The National Science and Technology Ethics Committee is located at the national height, fully grasping the development status and far-reaching consequences of scientific frontiers and emerging technologies, and coordinate scientific and technological activities with its authority and solemnity; the Industry Engineering Ethics Committee is organized by industry associations or science and technology enterprises, reflecting equal consultations between enterprises internally and showing industry norms and good style externally; the Academic Ethics Committee of scientific research institutions should strengthen the cultivation of ethical awareness of scientific researchers, teachers and students, and encourage relevant personnel to fully understand the value, history, logic and norms of scientific and technological ethics.

5. Participate in the formulation of international rules for artificial intelligence

At present, the laws about artificial intelligence governance have begun to form a diffusion trend in developed countries, promoting the global sharing of artificial intelligence governance rules. Some Internet companies in my country have joined the Artificial Intelligence Partnership (P Artificial Intelligence), and will work with global industry colleagues to promote artificial intelligence practice and optimize the impact of artificial intelligence on humans. In the subsequent research on artificial intelligence governance, my country should continue to pay attention to the trends of international cooperation and competition, and enhance the international competitiveness of the industry through open cooperation; actively participate in the international rule-making process and give full play to strive for greater influence, so as to seek greater development opportunities for my country's industry.