"Theory, Methods And Tools" To Deal With The Ethical Problems Of Artificial Intelligence

"Theory, Methods And Tools" To Deal With The Ethical Problems Of Artificial Intelligence

This article discusses the “theory, method, and tool” that deals with the ethical issues of artificial intelligence.

This article discusses the “theory, method, and tool” that deals with the ethical issues of artificial intelligence.

(I) Theory for dealing with the ethical problems of artificial intelligence

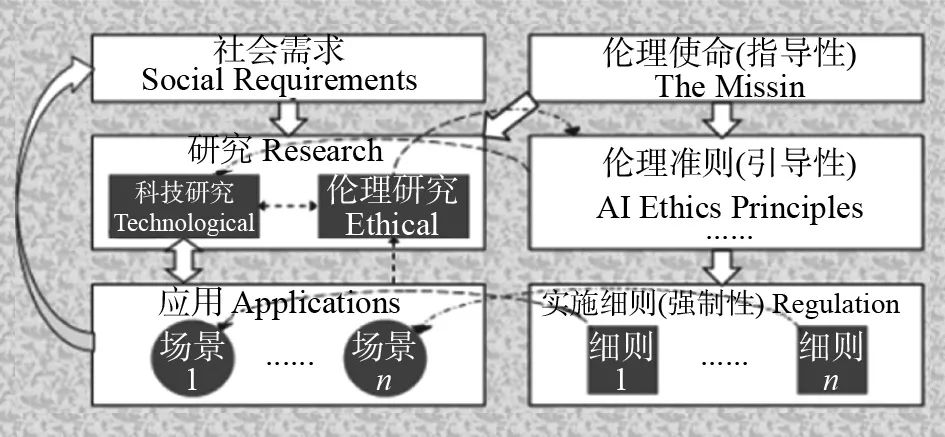

The theory of artificial intelligence ethics involves multiple dimensions, aiming to regulate the development and application of AI technology and ensure that it complies with human values and moral standards. Here are some core theories and their analysis:

1. Basic ethical theory framework Utilitarian perspective Demand theory perspective Virtue ethical perspective II. Specific ethical principles Fairness principle Transparency and interpretability principle Responsibility principle Privacy protection principle III. Application direction of ethical theory Ethical embedding in AI system design AI ethical governance mechanism AI ethical education and public participation

(II) Methods for dealing with the ethical problems of artificial intelligence

To deal with the ethical issues of artificial intelligence, we need to participate in multiple dimensions and multiple subjects in collaboratively. Through technological optimization, institutional design, cultural cultivation and public participation, the potential risks of AI technology to human society are systematically reduced. The following are specific method frameworks and case descriptions:

1. Technical path: Control ethical risks from the source 1. Algorithm fairness optimization case

The AI 360 toolkit developed by IBM can automatically detect and correct gender and racial biases in the algorithm, and has been applied to the financial loan approval system.

2. Explanability enhancement case

The EU's Artificial Intelligence Act requires that high-risk AI systems (such as medical diagnostic AI) must provide "human-understood decision-making logic" or prohibit listing.

3. Privacy protection technology case

Many Chinese hospitals have jointly trained CT imaging diagnostic models for COVID-19 through federal learning technology, while protecting patients' privacy.

2. Institutional path: Building an ethical constraint framework 1. Case studies on legislation and standards formulation

The European Union's Artificial Intelligence Act divides AI systems into four levels: "unacceptable risks", "high risks", "limited risks" and "minimum risks", and implements prohibitions, strict supervision, transparency requirements and voluntary compliance respectively.

2. Ethical Review Mechanism Cases

The UK Office of Artificial Intelligence requires all public sector AI projects to pass ethical review, and those who fail to pass the review will terminate funding.

3. Cases of responsibility traceability system

The California Autonomous Vehicle Responsibility Act stipulates that the responsibility for autonomous driving accidents is shared by the manufacturer, owner or operator according to the degree of fault.

3. Cultural path: Cultivating an ethical-oriented industry ecology 1. Ethical education and training cases

MIT, Stanford and other universities have included AI ethics in compulsory courses and have developed online ethics training platforms for industry personnel to learn.

2. Case of corporate ethics and culture construction

Google has set up an "Artificial Intelligence Ethics Committee", where employees can anonymously report ethical violations through internal platforms, and the company promises to respond within 48 hours.

3. Public participation and supervision cases

Shenzhen has launched the "Artificial Intelligence Ethics Supervision Platform", where the public can report problems such as facial recognition abuse, algorithm discrimination, etc., and the government regularly announces the processing results.

4. Governance path: Multi-party collaborative response to complex challenges 1. Global collaborative governance cases

The G20 artificial intelligence principle proposes ethical principles such as "people-oriented, privacy protection, and fairness" to promote member states to coordinate policies.

2. Dynamic governance mechanism case

The Monetary Authority of Singapore has set up a "regulatory sandbox" that allows fintech companies to test AI products in the sandbox, and regulators adjust policies based on test results.

3. Synergistic cases of ethics and technological innovation

The "reverse course learning" method is proposed, and by gradually increasing the difficulty of the task, AI is trained to make decisions that conform to human ethics in complex environments.

5. Comparison of typical AI ethical problems and treatment methods

Problem type handling method case

Algorithm discrimination

Data debias, fairness constraints, third-party audits

Amazon recruits AI to discriminate against women because of the high proportion of male programmers in historical data, and then corrects it through data balance

Privacy leak

Federated Learning, Differential Privacy, User Authorization Mechanism

Apple collects user input habits through differential privacy technology while protecting personal privacy

Unclear responsibility

Algorithm audit, liability insurance, lifelong liability system

After Tesla's autonomous driving accident, the responsible person is traced through vehicle data records

Technology abuse

Legislative prohibition, ethical review, and public supervision

EU bans real-time large-scale facial recognition, some U.S. cities ban police from using predictive police AI

Lack of public trust

Transparency disclosure, ethical certification, public participation

The EU requires AI systems to provide "ethical compliance labels", and some Chinese cities set up public hearings on AI ethical supervision

6. Implementation of suggestions for stratified governance: Dynamic adjustment: Multi-party collaboration:

Future direction: AI ethics will shift from "passive response" to "active shaping". Through technical governance (such as ethical constraint programming) and institutional governance (such as the Global AI Ethics Convention), AI will be promoted to become a "responsible technology" and ultimately achieve the essential goal of "technology for good".

(III) Tools for dealing with the ethical issues of artificial intelligence

Tools that deal with the ethical problems of artificial intelligence can be divided into evaluation and detection categories, privacy protection categories, fairness optimization categories, interpretability tools categories, ethical review and management categories, etc. The following is a specific introduction:

1. Evaluation and testing category 2. Privacy protection category 3. Fairness optimization category 4. Interpretability tools category 5. Ethical review and management category 6. Other tools