Value First: Trusted Artificial Intelligence Ethical Governance

Value First: Trusted Artificial Intelligence Ethical Governance

Against the backdrop of the rapid development of artificial intelligence technology, its impact on society and politics is becoming increasingly significant. Global tech giants such as Amazon, Apple, etc. have established partnerships to explore best practices for artificial intelligence, promote research and promote public dialogue. However, there are potential risks in relying on private enterprises and non-governmental entities to regulate AI. The EU is actively developing strict ethical standards aimed at laying the foundation for AI legislation, emphasizing people-oriented design, ethical compliance, and trust as the core condition for socially and legally acceptable AI. This article will discuss these key topics in depth.From a regulatory perspective, people-oriented implementation forms are diverse. In most cases, measures need to be taken at the early design stage to follow the

Against the backdrop of the rapid development of artificial intelligence technology, its impact on society and politics is becoming increasingly significant. Global tech giants such as Amazon, Apple, etc. have established partnerships to explore best practices for artificial intelligence, promote research and promote public dialogue. However, there are potential risks in relying on private enterprises and non-governmental entities to regulate AI. The EU is actively developing strict ethical standards aimed at laying the foundation for AI legislation, emphasizing people-oriented design, ethical compliance, and trust as the core condition for socially and legally acceptable AI. This article will discuss these key topics in depth.

1. People-oriented artificial intelligence

People-oriented is the starting point of artificial intelligence ethics. Human-centered AI can be defined as putting humanity at the center in any consideration of artificial intelligence and its development and use. People-centered and technology-centered seem in essence different, which puts the system first in the design process and considers manual operators or users only in the final stages. In this approach, the human role is relatively passive and can only follow the operation of technical equipment. The people-centered approach presents different choices, which places human individuals and their needs and preferences at the center of design, marketing, and business strategies, aiming to design thinking processes using participatory or empathetic mechanisms to improve user experience and satisfaction. In this sense, the purpose of artificial intelligence technology is to better serve humans and create greater value. People-oriented not only needs to be reflected in meeting human needs through new technologies, but also in the concept of protecting individual rights and enhancing human welfare. Therefore, it should play a protective role to prevent the technology industry from violating personal privacy, power and dignity, and even threatening basic rights and values such as human life in order to maximize profits.

People-oriented means not only focusing on individuals, but also on the well-being of society as a whole and the environment in which human life is lived. People-centered AI is closely related to fundamental rights and requires a holistic approach that emphasizes human freedom and personal dignity as meaningful social regulations, rather than focusing on a mere personal-centered description. Therefore, when the labor market is affected and changes due to the popularity of artificial intelligence systems, the importance of people-centeredness should be fully considered.

From a regulatory perspective, people-oriented implementation forms are diverse. In most cases, measures need to be taken at the early design stage to follow the "ethics in design" model and respect basic moral values and rights. The difficulty of this approach is that people-centered implementations rely on algorithmic development entities, and their rights are protected by trade secret regulations or intellectual property regulations. Even if ethical norms or binding laws regulate the correct development and use of artificial intelligence algorithms, the requirements for transparency and interpretability still make it a real challenge to ensure the applicability of legal and regulatory mechanisms. People-centered also involves taking very strict measures to limit and even prohibit the development and deployment of hazardous technologies in certain sensitive industries. One example is the control over the production and use of autonomous weapons systems, which are weapons systems that can select and attack targets without human intervention.

The regulatory approach to people-oriented AI includes adopting policies, legislation and investment incentives for the deployment of AI technologies, which are all beneficial to humans. This regulatory approach can increase the degree of automation of dangerous tasks and jobs that put humans at risk. Responsible development of artificial intelligence technology can increase safety standards and reduce the chances of human beings being injured by work, exposure to harmful substances or dangerous environments.

One of the issues involving people-oriented legal and morality is very important, which is the legal personality right of artificial intelligence. Theoretically speaking, giving artificial intelligence independent legal status requires consideration of many factors. It can be used to learn from the special legal status of children, and some of their rights and obligations are exercised by adults, or the provisions of the Company Law on corporate legal person qualifications, so that the company can own assets, sign contracts and assume corresponding legal responsibilities. In this sense, we can accept the concept of constructing its legal personality based on the obligations of AI rather than rights. In addition to the responsibility gap mentioned above, the consideration of artificial intelligence legal personality rights should also be taken into account related aspects such as the popularization of artificial intelligence creative works, the improvement of innovation level and economic growth. Transferring the concept of "limited liability" to an AI-based system and granting it a limited legal personality, this approach could create a firewall between AI engineers, designers and works. Although logically limited liability, we should remember the principle of "revealing the veil of the company". This principle may also be applied to "digital people", which means that in some cases, the claim may not be directed to "digital people", but rather traced to the individual or legal person behind it.

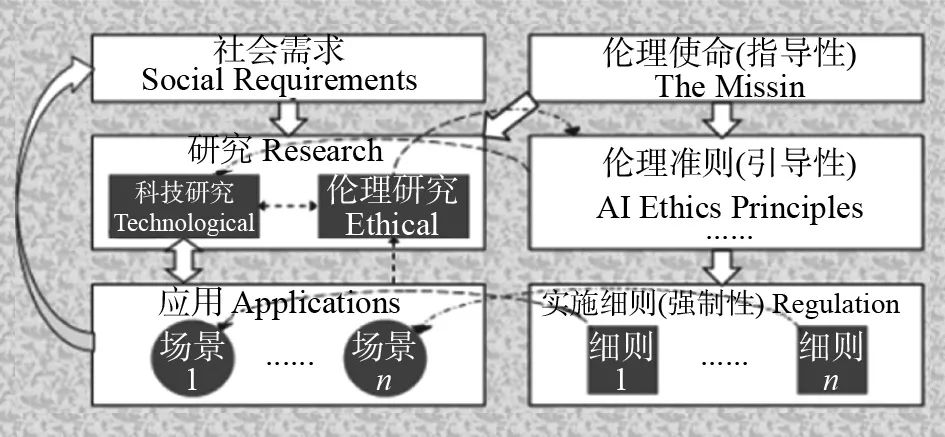

2. Artificial intelligence that conforms to ethical design

Ethics is a key concept in the development of artificial intelligence in Europe, requiring the implementation of the concept of using moral and legal rules to shape trustworthy algorithms from the beginning of design. The concept of "protecting by design..." is not novel, it is a common rule for protecting privacy and data protection. The General Data Protection Regulation (Data) proposes that one of the key components of a data protection system is to protect privacy through design. This is an active and preventive method, which advocates the use of appropriate procedures and data protection standards when designing and selecting data processing methods, and implementing them throughout the data processing process to ensure full-cycle data protection. Applying this approach to technologies related to artificial intelligence means that developers, designers and engineers are primarily responsible for ensuring compliance with ethical and legal standards set by legislative or regulatory agencies.

Similar to the principle of protecting privacy through design, ethical standards should be contained in the design and become the default setting for the design process. As far as artificial intelligence technology is concerned, the ethical task of protecting through design is very arduous and difficult to achieve. This is closely related to design methods and design algorithms. In order for autonomous agents to make decisions that meet people-centered, moral and legal standards in a social environment, we need to provide corresponding solutions for their algorithms or give them the ability to sense physically. To truly implement ethical principles in design in artificial intelligence design, researchers, developers and enterprises need to change their minds and no longer simply pursue higher performance performance, but pursue trust in development technology.

From a regulatory perspective, Europe emphasizes that the regulatory approach to ethical design of AI needs to be based on principles of fundamental rights, consumer protection, product safety and responsibility, or on non-binding trustworthy AI guidelines. Therefore, without a coherent and binding legal framework, ethical rules imposed in designs on AI developers and deployment personnel can have many difficulties in enforcing them. We should explore the establishment of binding force through cooperative promulgation of laws, formulate general ethical standards, formulate mechanisms to ensure compliance, and jointly ensure the successful application of ethical principles in design.

3. Take trust as socially and legally acceptable

Artificial intelligence

Trust is a prerequisite for ensuring that AI is people-oriented. It is generally believed that trust in artificial intelligence can only be achieved by adhering to fairness, accountability, transparency and regulation. Since trust is a prerequisite for ensuring that artificial intelligence is people-oriented, the most important premise of the AI regulatory environment is to believe that the goal of artificial intelligence development should not be artificial intelligence itself, but to become a tool serving human beings, with the ultimate goal of enhancing human well-being. It is believed that trust can be achieved by establishing corresponding legal, ethical and value-based environments around AI, ensuring AI is designed, developed, deployed and used in a trustworthy way.

Obviously, building trust is a long-term and extremely difficult process. Artificial intelligence technologies that use machine learning and reasoning mechanisms can make decisions without human interference, which has raised a series of trust issues. Such artificial intelligence applications will soon become standard for goods and services such as smartphones, autonomous cars, robots and network applications. However, decisions made by algorithms may come from incomplete data and are therefore less reliable. Cyberattackers can manipulate algorithms, and the algorithm itself may also have biases. Therefore, if new technologies are used without reflection during the development process, it will bring some problems, which will lead to users being unwilling to accept or use new technologies. Because of these issues, thinking about ethics and regulation will involve issues such as transparency, interpretability, bias in use, incomplete data or manipulation, which will become the driving force for the development of artificial intelligence.

In Europe, trustworthy AI aims to promote the sustainable development of artificial intelligence innovation, which will ensure that all aspects of artificial intelligence systems from design, development to application are legitimacy, ethics and robust. According to European practice, artificial intelligence should be worthy of personal and collective trust. Ensuring that the design, development and application of artificial intelligence systems are legal, ethical and stable, is the key to ensuring orderly competition. EU guidelines aim to promote the innovation of responsible AI, seeking to make ethics a core pillar of AI development approaches. At the EU level, ethical norms distinguish between the four principles of responsible artificial intelligence, namely respect for human autonomy, prevention of harm, fairness and interpretability. These are typical ethical codes derived from fundamental rights, and in order to ensure that AI systems are trustworthy, fundamental rights must be considered and adhered to. While artificial intelligence systems bring huge benefits to individuals and society, they also bring certain risks and may also have negative effects that are difficult to predict, identify or measure. Therefore, sufficient and proportional, procedural and technical measures should be a prerequisite for mitigating the above risks.

To ensure trustworthiness of artificial intelligence technology, it is not enough to hope to establish the most comprehensive legal, ethical and technical framework. Trusted AI can only be truly achieved if stakeholders correctly implement, accept and internalize the comprehensive framework mentioned above. The main conditions for trustworthy artificial intelligence, namely legitimacy, morality and robustness, can be applied to the entire life cycle of artificial intelligence and artificial intelligence systems. Stakeholders involved in trusted AI implementation include: developers (including researchers and designers), deployers (public and private institutions that operate using AI technology and provide products and services to the public), end users (individuals who interact directly or indirectly with AI systems), and society as a whole, including all those directly or indirectly affected by AI systems. Each group has its own responsibilities, and some groups also have rights. In the initial stage, developers should comply with relevant requirements during the design and development process. Developers are a key link in the stakeholder chain, responsible for executing ethical patterns in design. Deployers need to ensure that the AI systems they use, products and services they provide meet the requirements. Finally, end users and society as a whole should exercise their rights to obtain the right to know the relevant requirements and expect relevant parties to comply with these requirements. Among them, the most important requirements include: human agency and supervision, technical robustness and security, privacy and data governance, transparency, diversity, non-discrimination and fairness, social well-being, environmental well-being and accountability.

4. Evaluate trustworthy artificial intelligence

Trustworthiness assessments are especially suitable for AI systems that interact directly with users, mainly carried out by developers and deployment personnel. Assessment of legitimacy is part of legal compliance review, and other elements of AI systems’ credibility need to be evaluated in a specific operating environment. The evaluation of these elements should be implemented through a governance architecture that includes operational and management levels. Evaluation and governance are a dual process, both qualitative and quantitative. The qualitative process is designed to ensure representation, with in-depth feedback provided by entities of varying sizes from various industries and sizes. The quantitative process of evaluation means that all stakeholders can provide feedback through open discussions. The relevant results will then be integrated into the evaluation list, with the goal of achieving a framework that can span all applications, thereby ensuring that trusted AI can be applied to various fields.

We can manage the evaluation process through various structures. Specifically, it can be achieved by integrating the evaluation process into existing governance mechanisms or adopting new processes. The matching method should always be chosen based on the internal structure, scale and resources of the entity. In addition, more measures should be taken to build efficient communication channels within or between industries, among regulators, opinion leaders and other relevant groups, encourage sharing of best practices, relevant knowledge, experience and proven models, discuss dilemmas or report emerging ethical issues as a supplementary measure to legal supervision and regulatory review.

Unfortunately, there is no universally accepted guidelines for stakeholders on how to ensure trustworthy AI. Similarly, there is no relevant guidance on how to ensure the development of ethical and robust artificial intelligence. Although many laws have met some of these requirements, there is still uncertainty about the scope and effectiveness of these laws. At the same time, there is also a lack of unified standards in the development and use of assessment measures that can ensure the credibility of artificial intelligence systems.