Discussion On Several Issues Concerning The Ethics Of Medical Artificial Intelligence

Discussion On Several Issues Concerning The Ethics Of Medical Artificial Intelligence

The application and practice of artificial intelligence in the medical industry have brought new challenges to ethical issues. New artificial intelligence technologies may pose potential risks to patients. These factors mainly come from algorithm bias, privacy and security, doctor acceptance, responsibilities and technology abuse, etc. The current medical field is also facing the lack of medical artificial intelligence ethical norms and audit mechanisms. Medical artificial intelligence will inevitably have a significant impact on the entire medical and health system in the future, helping to reshape the medical and health system and promoting citizens

The application and practice of artificial intelligence in the medical industry have brought new challenges to ethical issues. New artificial intelligence technologies may pose potential risks to patients. These factors mainly come from algorithm bias, privacy and security, doctor acceptance, responsibilities and technology abuse, etc. The current medical field is also facing the lack of medical artificial intelligence ethical norms and audit mechanisms. Medical artificial intelligence will inevitably have a significant impact on the entire medical and health system in the future, helping to reshape the medical and health system and promoting citizens' health democracy. We look forward to industry organizations or governments formulating ethical norms for medical artificial intelligence.

1. Introduction

Artificial intelligence (AI) has been widely exploratory applications in the field of medical and health, and some have been commercially used. Artificial intelligence will have a wide range of impacts, significantly affecting medical practices, changing patient experiences and doctors’ daily lives. At the same time, advanced AI technologies have also brought some new ethical challenges. Medical ethics has been implemented in all stages of the entire medical development since ancient times. Artificial intelligence, as an innovative technology, will inevitably have an important impact on the medical and health system. This article discusses several issues concerning the ethics of medical artificial intelligence.

2. The main issues of current medical artificial intelligence ethics

2.1. Potential risk factors for patient safety of algorithm bias

The risks involved in patient safety are mainly manifested in the auxiliary diagnosis, treatment advice and operations provided by AI that may mislead doctors to make wrong decisions, and directly or indirectly cause varying degrees of physical harm to the patient. This harm is technically mainly from the system's algorithmic bias (Bias) and vulnerabilities. Algorithm bias refers to the systematic and repeatability errors in AI systems that produce unfair results. The bias may be caused by many factors, including but not limited to the design of the algorithm itself, data programming, collection, selection, or use to train the algorithm. Accidental or unexpected use or decision making related to the method. Computer-generated biases are almost everywhere. The causes of bias are often not in the norms, but in a wider social process. unconscious and institutional deviations into its results. Algorithm bias is mainly divided into three categories: "data-driven bias", "artificial bias" and "bias caused by machine self-learning".

"Data-driven bias" refers to the bias of the original training data, which leads to the algorithm's execution to bring discrimination into the decision-making process. Given that the algorithm itself does not question the data it receives, but simply searches for and mines the structure and patterns implicitly behind the data, if the data input to the algorithm has some bias or preference from the beginning, then the algorithm will obtain it. The output will also be the same as human bias.

For example, image recognition has potential deviations in the process of machine learning and building learning algorithms. Training images are often derived from data from specific cooperative medical institutions, and their image identification process has distinct diagnostic characteristics and habits of the medical institutions.

In addition, the framework of algorithmic conceptualization itself contains the subjective assumptions of the working group, and this data result based on "subjective" becomes the so-called "objective" data input. Although the image quality of international imaging manufacturers converges and homogeneity, more images from different manufacturers also have different device models and digital imaging quality differences. At the same time, the model may cause or intensify by age, gender, race, and region. or other health care populations classified by protected characteristics are unfair. These factors lead to bias in the scope of application.

Artificial bias refers to algorithms designed discriminatory algorithms in order to gain certain benefits or to express some of their own subjective opinions. This includes subjective and objective prejudice, which is caused by problems of people and technology itself, and on the other hand, some people do it deliberately. Some Stanford University scholars believe that the values of artificial intelligence developers used in healthcare applications may not always be consistent with the values of clinicians. For example, there may be temptation to guide the system to take clinical actions to improve quality indicators, but not necessarily to care for patients. These algorithms may distort the data provided for public assessments when reviewed by potential hospital regulators.

There are also artificial prejudices that come from the characteristics of medicine themselves, especially in the treatment plan, there are regional and school differences, and there are artificial prejudices in the selection of treatment plan. Although proof medicine has become the dominant, subjective empirical medicine with subjective color is clinical Still effective in practice. Many times, there is no only "gold standard" for treatment, which is why there are often recommendations of hierarchical programs, with varying standards of expert consensus, clinical guidelines, and clinical norms. In addition, artificial bias may also come from the lack of ethical knowledge of engineers in system development institutions and the lack of industry professional knowledge.

As the algorithm complexity becomes increasingly complex, decisions formed through machine learning process are becoming increasingly difficult to explain the existence of "black boxes" of codes and algorithms within artificial intelligence, resulting in uncontrollable and predicting the results of algorithms. And there is a certain tendency to be unfair in applications.

2.2. Risk of patient privacy security leakage

After entering the information age, the patient's personal health information data (PHI, ) has been paid attention to, and there are also corresponding industry norms and regulations to manage it. With the use of technologies such as data collection, machine learning, and artificial intelligence, personal information leakage occurs frequently. The importance of personal privacy protection and personal health confidence is becoming increasingly prominent. There is currently no clear strategy for the privacy issues, self-protection strategies and acceptability of medical AI applications involving patients. More data leaks are patient data that are training for AI. Among them, the leakage of human genomic data causes more prominent potential biohazards and commercial interests. Reports show that public concerns about personal privacy leakage account for 59.72% of the surveyed population.

2.3. Doctors’ acceptance of medical AI

From a technical perspective, doctors do not understand the limitations or scope of artificial intelligence algorithms. This opaque "black box" effect creates the possibility of blind belief or doubt, and distrust accounts for the majority. Since medical AI is still in a relatively early stage of clinical application, both doctors and the public have not met expectations. Many medical AI systems are not well integrated into the medical workflow, and their medical AI solutions do not run through the entire medical process. Some operations instead increase the existing workload of doctors, and the value they bring is not well reflected. In addition, doctors’ various concerns about new medical AI technologies unconsciously show a mentality of rejection and doubt, which has delayed the rapid promotion and implementation of new technologies to some extent. In terms of acceptance of new technologies, young and middle-aged doctors with high education experience with overseas further training have a more rational attitude towards the application of AI technology. Young doctors are mostly curious. At the same time, some old doctors have doubts and rejections about new technologies. psychology. In addition, the application in different medical scenarios has also led to different evaluations and acceptance of AI. Some scholars have studied that doctors have the highest recognition of AI in medical training and education.

2.4. Medical responsibility and rights issues

Doctors’ concerns about the clinical application of AI systems on the other hand are who is ultimately responsible for the decisions provided by medical AI, and who will bear the consequences if there is a mistake. At this stage, the final results of medical AI still require manual verification and review, and the doctor assumes the responsibility for the patient's diagnosis and treatment outcome. How to achieve accountability after the widespread introduction of medical AI in the future is still unclear.

Whether the AI technology developers or design departments can be held accountable from a technical level when the results of AI application lead to clinical disputes, human ethics or legal conflicts. And establish a reasonable responsibility and compensation system at the application level of artificial intelligence to ensure that medical AI plays its due value in clinical practice without falling into an embarrassing dilemma.

2.5. Over-medical and potential risks caused by abuse of medical AI

Medical AI is already above the human average in specific fields, especially in image recognition, and has high efficiency. As a tool, it also has the risk of clinical abuse, resulting in excessive medical treatment. According to a public report on a website of a hospital in a city, since the hospital introduced the AI technology for lung nodule imaging recognition, the number of people screening for lung nodules increased by 73% in three years, and the direct profits of screening increased by 81%. The total hospital revenue increased by 100% for surgical treatment. With the improvement of efficiency, the hospital's business level has been greatly improved. Judging from the public figures, AI brings rich returns to hospitals. However, according to the health statistics yearbook of the region, the disease spectrum distribution of non-communicable diseases in the region has not changed significantly, and the hospital's pulmonary nodules patients and surgery volume have increased significantly in the short term. It can be questioned that the use of AI tools has been used to overdosed Medical. This excessive medical treatment will undoubtedly cause physical and financial harm to the patient. In the NLST (Lung Trial) study, 96.4% of the positive nodules in the CT screening group were benign, and the false positive rate of early diagnosis and treatment of lung cancer in rural my country was also relatively high. Excessive false positives may lead to overdiagnosis, overtreatment, waste of medical resources and increase the anxiety of the subject.

On July 17, 2019, the American Neural Network Company held a press conference in San Francisco, announcing a new breakthrough in the brain-computer interface system. The company develops a "sewing machine-like" robot that can implant ultra-slim threads deep in the brain. The purpose is to directly implant artificial intelligence into the human cerebral cortex to improve human intelligence (i.e., brain-computer interface technology). The original intention is to help humans solve brain diseases. Researchers have conducted experiments on monkeys to enable monkeys to control computers with their brains. According to the official experimental information, they connected the mouse's head to a USB-C port and passed the mouse's ideas to the computer through wires. When the software analyzes its brain, signals emitted by the mouse's neurons can be heard through the speakers. It is planned to be used for human trials next year. In theory, this technology can achieve two-way control, which means that computers can control the brain and even control human behavior, which will undoubtedly pose great risks.

2.6. Lack of medical ethics review

The State Food and Drug Administration has issued relevant guidance and specifications for medical AI, and has initiated the certification process, which stipulates the scope, risks, and clinical experiments. The hospital ethics committee is mainly responsible for the medical ethics review of clinical trials of drugs and medical devices, organ transplants, medical research involving humans, related technology applications, animal experiments, and medical management activities in this medical institution. At present, there is no corresponding ethical review mechanism for AI in actual applications in hospitals. Some hospitals refer to the ethical review mechanism introduced by drug clinical experiments to conduct ethical review of the introduction of medical AI, which is difficult to fully apply.

In practice, some ethical review committee members narrowly understand "not harming" as not harming the body, and their understanding of risks is still focused on only focusing on life, health and safety, and ignoring the improper processing of personal data, personal information leaked, and infringed. The social and psychological risks brought by privacy. More hospitals have not undergone ethical review of the introduction of medical AI. Some hospitals also try to use patient informed consent forms to avoid some potential risks when applying medical AI systems to patients.

3. Ethical principles of medical artificial intelligence

3.1. Medical ethical principles

The basic norms of medical ethics follow the Hippocrates Declaration until the modern Geneva Declaration, and their basic principles include the principle of non-harm, advantageousness, justice and respect for patients. Any new medical technology is applied in medical care based on medical ethics and regulating the norms and order of medical practice within the scope of ethics. The ultimate goal of its new technology is to serve human health and well-being, not harm humans.

3.2. Ethical Principles of Artificial Intelligence

Many industrial organizations have formulated normative principles in artificial intelligence ethics. At present, two more widely influential consensus on artificial intelligence ethics: the "Asiloma Artificial Intelligence Principles (AI)" and the "Artificial Intelligence Ethics Standards" initiated by the International Association of Electrical and Electronic Engineers (IEEE, of and) to encourage technical personnel to Prioritize considerations in the creation of ethical autonomy and intelligent technology. Many international giants such as IBM, Intel, etc. have formulated product principles and ethical regulations for AI. Most of these principles involve: security, transparency, privacy protection, prevention of abuse, etc., and the core is to fully safeguard the fundamental interests of mankind. It can be seen that they are all people-centered concepts.

3.3. Medical artificial intelligence ethical norms

Currently, the American Medical Association (AMA) has clearly put forward specifications for medical AI, emphasizing the promotion of carefully designed, high-quality, clinically proven medical care artificial intelligence, i.e. a. based on user-centered, especially for Physicians and other medical members, design and evaluation of best practices; b. Transparency; c. Reproducibility of compliance with lead standards; d. Identify and take measures to address bias and avoid introducing or exacerbating health care disparities, including testing of vulnerable populations or when deploying new AI tools; and e. protect the privacy interests of patients and other individuals and save information.

Some Chinese medical industry associations have also established artificial intelligence branches and have also explored the ethical norms of medical AI. However, only the Ultrasonic Physicians Branch of the Chinese Medical Association has issued the "China Ultrasonic Medicine Artificial Intelligence Conduct Code: Beijing Declaration", which is based on "formulation of norms, scientific management; realizing the integration of medicine and industry, promoting transformation; focusing on clinically to maximize patient benefits ”The 13 detailed rules in three aspects elaborate on the guidelines.

At present, there are few ethical research and explorations in medical artificial intelligence in interdisciplinary fields. It is speculated that the reason is that medical AI has not yet been widely used in clinical practice, and the urgent clinical demand is not strong enough. Overall, the ethical norms of medical artificial intelligence are still based on people, strengthening patient safety, protecting patient privacy, and AI technology must be transparent to prevent abuse. The ultimate goal is to promote disease recovery, maintain human health, and achieve healthy democracy.

4. Impact on the medical and health system

Although medical AI cannot replace doctors, a lot of repetitive and regular diagnosis and treatment work can be replaced by AI. In the long run, artificial intelligence will have a wide impact, completely changing medical practice, changing patient experience and doctors' daily life, the professional form of medical staff will surely change. This puts higher requirements on the doctor's clinical skills and pays more attention to humanistic care for patients. A considerable number of doctors face severe career challenges.

As medical AI applications gradually penetrate into the consumer side. It is mainly manifested in individuals and organizations that more conveniently obtain the best and appropriate decision-making suggestions for various professional fields and lifestyle behaviors, bringing open, accessible and democratic medical care, giving the public and doctors more opportunities, and making up for the previous doctors and patients. With the gap between "information asymmetry", patients can participate more actively and proactively in their own diagnosis and treatment process. "Healthy Democracy" has been achieved, thus causing major changes to the entire medical and health system.

5. Strategies and expectations

Creating “laws” or “rules” for the ethics of medical artificial intelligence is challenging because ethical boundaries are difficult to teach software systems or machines, and the complexity of medicine and related influencing factors themselves also determines the definition of medical AI ethical norms. The difficulty. The more core essence is the normative realization of human morality and ethics.

Medical AI systems discover, understand, and point out inconsistencies in human decision-making processes, they may also reveal ways in which humans themselves favoritism, narrowness and cognitive biases, which in turn promote humans to adopt a more just or equal perspective. In the process of forming common values, humans may make more progress than artificial intelligence.

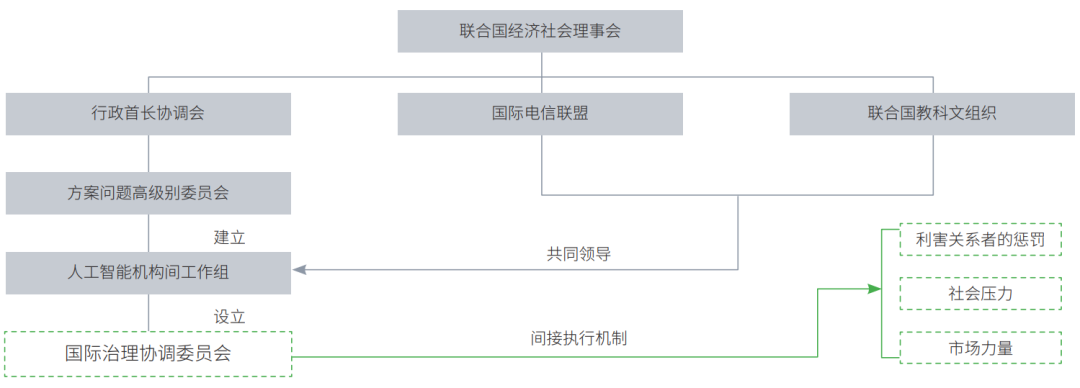

One of the problems faced by people who deploy AI using technical tools to establish ethics in AI is the black box nature of deep learning and neural networks. This makes it difficult to establish transparency and check bias. As technology providers, more and more companies are deploying technology to build platforms to help solve this problem. For example, IBM's AI, open source tools, and solutions from AI startups can provide greater transparency and check for bias. In realization, it is necessary to ensure that an ethical governance structure is created and that the responsibilities of the AI system, clear roles and structures are established, and that the moral responsibility of AI is allocated to key personnel and teams, and empowered. Using the adjustment of existing governance structures to build accountability in specific teams. For example, existing ethics supervisors in an organization (such as the chief ethics officer) can also be entrusted to study ethical issues in artificial intelligence. Establish internal and external committees that are responsible for ethically deploying AI, which are independent and therefore do not be under pressure from those who are rushing to deploy AI.

In addition, for the institutions used, the hospital ethics committee needs to improve the system of medical AI ethics and conduct comprehensive evaluation of products and solutions, which must not only ensure safety and not harm, but also take into account the significance of technological progress.

In July 2019, the Central Committee for Deepening Reform held its ninth meeting and reviewed and passed the "Plan for the Establishment of the National Science and Technology Ethics Committee". The meeting pointed out that scientific and technological ethics are value standards that must be followed in scientific and technological activities. The purpose of establishing the National Science and Technology Ethics Committee is to strengthen overall coordination, standardization, guidance and coordination, and promote the construction of a science and technology ethics governance system that covers comprehensively, has clear orientation, is standardized and orderly, and is coordinated. We must speed up the improvement of institutional norms, improve governance mechanisms, strengthen ethical supervision, refine relevant laws and regulations and ethical review rules, and standardize various scientific research activities. This will be the highest-level organization in the country, guiding all scientific and technological ethical norms.

Looking forward to the future, on the one hand, we hope that the Chinese Medical Association and the Chinese Medical Association will formulate medical AI ethical norms at the national academy level to provide unified guidance. On the other hand, it is necessary for medical AI producers and users to educate and train them to understand the ethical challenges of medical AI, while ensuring that patients fully understand how to use these tools to determine their diagnosis and treatment. Better use of medical AI tools to provide health services to humans.

This article is excerpted from the November 2019 issue of the journal "Medical and Philosophy"

CHIMA project "Research on the acceptance and behavioral response of medical personnel to medical artificial intelligence (AI)"