Ethical Risks And Legal Guarantees Of The “Artificial Intelligence ” Action

Ethical Risks And Legal Guarantees Of The “Artificial Intelligence ” Action

Wu Dan / Cartographer | Editor Zhang Guopeng | Xue Yingjun The text has a total of 3263 words and is expected to take 10 minutes to read. The Central Economic Work Conference held in December 2024 clearly stated that "leading the development of new productive forces with scientific and technological innovation

The text has a total of 3263 words and it is estimated that it will take 10 minutes to read.

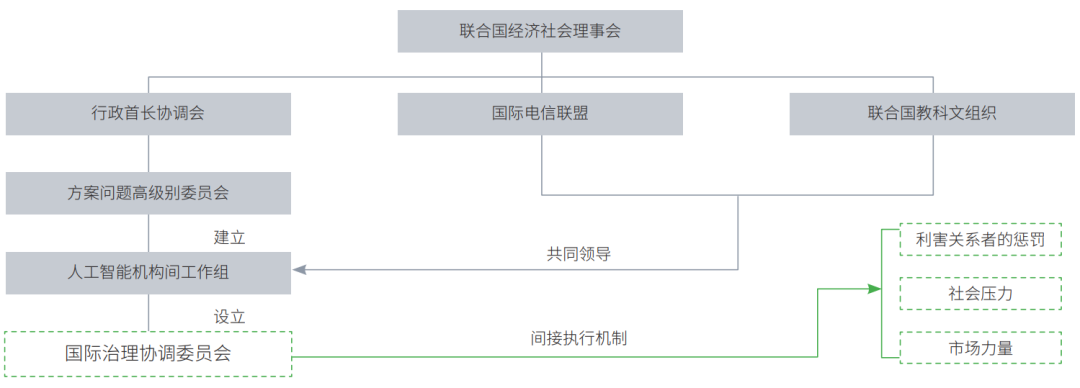

The Central Economic Work Conference held in December 2024 clearly stated that "leading the development of new productive forces through technological innovation and building a modern industrial system" and "carrying out the 'artificial intelligence ' action to cultivate future industries." By deeply integrating artificial intelligence technology with all walks of life, we can achieve cross-domain technological collaboration and innovation while promoting technology iteration and industrial transformation and upgrading, which will help to continuously generate new scenarios, new business formats, new models and new markets, and shape new forms of future industry, business and people's lives. This also represents the cutting-edge direction of a new round of technological revolution and industrial transformation. Among them, in the process of comprehensively empowering and restructuring traditional industries, the "artificial intelligence " action should establish and improve the risk identification system and supervision mechanism for artificial intelligence applications, promote artificial intelligence legislation, prevent and resolve potential safety hazards and risk challenges in the intelligent era, and provide legal protection for the positive interaction between technological innovation and economic and social development. It is necessary to strengthen research on legal, ethical, and social issues related to artificial intelligence, establish and improve laws, regulations, institutional systems, and ethics to ensure the healthy development of artificial intelligence, guide the development of artificial intelligence in a direction conducive to the progress of human civilization, and provide important institutional support and legal guarantee for the implementation of "artificial intelligence " actions in the new era.

Possible ethical risks faced by the “artificial intelligence ” action

Artificial intelligence ethics are the values and behavioral norms that people need to follow when developing artificial intelligence technology and social applications. The development of "artificial intelligence " may expand the scope of this issue, covering various aspects such as law, security, medical care, education, employment, environment, etc., and involving the multi-dimensional relationship between people, people and nature, and people and technological products. In September 2021, the National New Generation Artificial Intelligence Governance Professional Committee issued the "New Generation Artificial Intelligence Ethical Code", which proposed basic ethical requirements such as enhancing human welfare, promoting fairness and justice, protecting privacy and security, ensuring controllability and credibility, strengthening responsibility, and improving ethical literacy. It includes both tangible forms such as specific action norms, and intangible forms such as concepts, emotions, will, and beliefs.

Artificial intelligence ethical risks refer to the possibility of violating ethical principles, damaging social values, impacting personal rights, or destroying ecological balance due to technical characteristics, usage, or management flaws during the development, deployment, and application of artificial intelligence technology. On the one hand, artificial intelligence ethical risks stem from inherent human discrimination, prejudice and other ethical issues, and are embedded in new forms and means through algorithms, big data, etc., making them more complex and hidden. For example, the problem of "killing familiarity" in big data is essentially the abuse of algorithms by operators, taking advantage of consumers' trust and information asymmetry, practicing price discrimination, infringing on consumers' rights and interests, and deviating from the value principle of fairness and integrity. Another example is the "information cocoon room" problem. The recommendation algorithm binds the user's thoughts and behaviors to a certain type of information, and gathers people with similar values and preferences into the same digital space, thereby continuously deepening the user's inherent thoughts and forming the "frog in the well dilemma", hindering the update and development of concepts, and affecting people's comprehensive understanding of the real world. On the other hand, artificial intelligence ethical risks arise from new problems brought to human society by technological development, such as the “digital divide”. It exists not only between different countries, but also between different groups of people within a country. Its essence is a social justice issue caused by the different levels of access to digital resources and mastery of technology in the information age. The "digital divide" has penetrated into all aspects of people's economic, political, and social life, evolving new forms of inequality and social differentiation, and posing hidden dangers to social harmony and stability.

Adhere to "people-oriented" and promote the application of artificial intelligence algorithms for good

To prevent and resolve the ethical risks of artificial intelligence, we need to take proactive prevention and institutional measures to make the "artificial intelligence " action develop in a direction that is beneficial to human society. We must take precautions and continuously improve the safety, reliability, controllability, and fairness of artificial intelligence. In October 2023, the "Global Artificial Intelligence Governance Initiative" released by China proposed the development direction of artificial intelligence that is "people-oriented and intelligent for good". In July 2024, the 78th United Nations General Assembly unanimously adopted a resolution sponsored by China on strengthening international cooperation in artificial intelligence capacity building. The resolution further emphasized that the development of artificial intelligence proposed in my country's "Global Artificial Intelligence Governance Initiative" should adhere to the principles of people-centered, intelligence for good, and benefit to mankind, and actively build a community of a shared future for mankind in the field of artificial intelligence that is "people-centered, intelligence for good".

"People-oriented" emphasizes that technological development cannot deviate from the direction of human civilization progress and should always aim to enhance the common well-being of all mankind. The essence of artificial intelligence is to serve human society and benefit mankind, and the core value of human society is "people-oriented". The legislative purpose of artificial intelligence should implement the fundamental concept of "people-oriented". To promote the development of future industries under the category of "artificial intelligence ", we should also adhere to the "people-oriented" concept, take enhancing the common welfare of all mankind as the ultimate goal of artificial intelligence research and development and social application, safeguard public interests and people's rights, and build a community with a shared future for mankind in the digitalization process.

"Intelligence for Good" stipulates the value orientation of artificial intelligence at the legal, ethical and humanitarian levels, and should uphold the common values of peace, development, fairness, justice, democracy and freedom for all mankind. Algorithms are the "brains" of artificial intelligence. The core of "intelligence for good" is "algorithms for good", and human values are reflected behind algorithm design and optimization. Under the rapid development of artificial intelligence technology, algorithmic discrimination, differential treatment, and information asymmetry may lead to an intelligence "gap" that makes disadvantaged groups face more severe intelligence challenges. The solution is to strictly abide by social fairness and justice, strengthen data supervision, properly eliminate bias, and embed human ethics in technology during the development and application of artificial intelligence. Starting from August 15, 2023, the "Interim Measures for the Management of Generative Artificial Intelligence Services" (hereinafter referred to as the "Interim Measures") jointly issued by the Cyberspace Administration of China and other seven departments will be officially implemented. The "Interim Measures" emphasize that the entire process of development, design, deployment, and use of generative artificial intelligence should respect social morality and ethics, and in particular, adhere to the core socialist values. The generated content should abide by the principles and concepts of positivity, health, and upward goodness, so as to achieve the value goals of technology for good and intelligence for good.

Build a systematic artificial intelligence legal system

Generative artificial intelligence is a high-end product of today's artificial intelligence development. Unlike traditional artificial intelligence, which only processes and analyzes input data, generative artificial intelligence can learn and simulate the inherent laws of things through big data analysis, deep synthesis, natural language processing and other technologies, and generate logical and coherent new content based on user needs. It has human-like capabilities of perception, reasoning, learning, understanding and interaction. It can empower the development of various industries and has broad prospects. At present, my country's governance of generative artificial intelligence has formed a legal system with the "Interim Measures" as the overall regulations and the laws related to data and algorithms as the specific technical regulations. For example, legislation on data includes the "Data Security Law of the People's Republic of China", "Personal Information Protection Law of the People's Republic of China", "Cybersecurity Law of the People's Republic of China", etc.; regulations on algorithms and deep synthesis technology include the "Internet Information Service Algorithm Recommendation Management Regulations", "Internet Information Services Deep Synthesis Management Regulations", etc. Although the introduction of these laws and regulations has greatly improved the disorderly state of artificial intelligence development, it has not yet been systemized, and there are also overlaps in the content of various laws and regulations. In particular, in the face of the complexity, diversity, and variability of public risks that may be caused by generative artificial intelligence technology, the current ethical rules and legal systems still need to be further improved.

Overall, the Interim Measures, as the main regulation regulating the development and application of generative artificial intelligence in my country at this stage, cannot fully cover the various issues caused by artificial intelligence, nor are they sufficiently and effectively connected with existing laws and regulations. Our country still needs to introduce a comprehensive legislation on generative artificial intelligence to comprehensively regulate the legal issues of artificial intelligence. During the National Two Sessions in March 2024, many deputies to the National People’s Congress put forward opinions and suggestions on the formulation of artificial intelligence laws. The draft Artificial Intelligence Law has also been included in the State Council's annual legislative work plan for two consecutive years in 2023 and 2024, as a draft law to be submitted to the Standing Committee of the National People's Congress for review. This reflects the urgent need for the development of my country's artificial intelligence field. In the context of coordinating development and safety and promoting the healthy development of artificial intelligence technology, legislation has become one of the important measures to deal with the risks and challenges brought about by the rapid development of artificial intelligence technology.

However, due to factors such as the rapid iteration of technology and the complexity of risks, my country is more cautious about artificial intelligence legislation, which means that the draft artificial intelligence law has not yet entered the formal legislative process of the National People's Congress Standing Committee. Building a systematic artificial intelligence legal system requires overall planning from multiple dimensions such as legislative principles, institutional design, international collaboration, and technical support. In the process of promoting artificial intelligence legislation, experience can be accumulated through local pilots, temporary regulations, and academic research, and consensus can be gathered to lay the foundation for unified legislation in the future.

For example, in the context of comprehensive legislation that has not yet been introduced, some places in my country have carried out relevant legislative practices. In 2022, Shenzhen and Shanghai respectively promulgated artificial intelligence industry promotion regulations to explore the balance between promoting artificial intelligence development and security governance in the form of local regulations. Through policy innovation and practical exploration, they promote the high-quality development of the artificial intelligence industry, and also provide reference for national legislation. Compared with the unified legislative model of the European Union and the decentralized legislative approach of the United States, in terms of artificial intelligence governance, my country can first introduce the "Artificial Intelligence Promotion Law" to promote regulatory innovation with "small incisions" and gradually improve the detailed rules in practice. At the same time, in view of the problems such as vague concepts and insufficient systematicness in current legislation, the academic community should strengthen research on the legal definition, ethical norms and hierarchical governance mechanisms of artificial intelligence, build a unified legal framework with systematic thinking, and ensure and promote the healthy, safe and high-quality development of "artificial intelligence " actions.

(Author's unit: School of Marxism, Xi'an University of Electronic Science and Technology)