Equipping Artificial Intelligence With An Ethical “navigator”

Equipping Artificial Intelligence With An Ethical “navigator”

Editor's note: Artificial intelligence is profoundly changing the world. But it is like a "double-edged sword", which not only brings huge opportunities, but also causes many challenges - in the fields of medical care, education, transportation and other fields, artificial intelligence has improved efficiency and quality of life; however,

Artificial intelligence is profoundly changing the world. But it is like a "double-edged sword", which not only brings huge opportunities, but also causes many challenges. In the fields of medical care, education, transportation and other fields, artificial intelligence has improved efficiency and improved the quality of life; however, it may also impact employment, invade privacy, and trigger algorithm bias. How to hold this "double-edged sword" well so that artificial intelligence can truly become a force that promotes human progress.

The choices you make now determine your future direction. In this issue, we invite two experts to discuss how to avoid risks and guide technology for good.

Artificial intelligence plays an increasingly important role in improving production efficiency, reducing costs, optimizing resource allocation, and improving quality of life. But there is no doubt that technological dividends and ethical risks coexist in the development of artificial intelligence. To this end, it is urgent to tie ethical "reins" to artificial intelligence with unlimited potential and provide it with a dynamically adjusted ethical "navigator" so that the development of artificial intelligence always moves on the correct path guided by human ethical civilization.

Artificial intelligence provides great convenience, but also causes ethical risks

More and more people have experienced the positive impact of artificial intelligence on the economy, society and personal lives.

While enjoying the great convenience it provides, we have to face up to the many ethical risks caused by artificial intelligence:

Shopping apps use heart rate data to infer consumers' health privacy, and e-commerce platforms accurately predict user purchasing behavior through user browsing records, voice interaction, and other methods. Scattered personal data on application software can be reorganized by artificial intelligence to generate "digital clones". The artificial intelligence system can stitch together massive data to create a life profile that understands you better than you do.

Artificial intelligence subverts the traditional "behavior-responsibility", that is, the ethical concept of responsibility of "whoever makes a mistake is responsible". For example, in different levels of autonomous driving failures, it is difficult to determine exactly which specific technical link has failed or is at fault, and it is difficult to determine the responsible entity.

Algorithmic bias in artificial intelligence undermines fairness and justice. For example, due to the opacity of the operating logic, decision-making basis and influence mechanism of the food delivery platform's algorithm, workers in new employment forms such as online delivery persons and online ride-hailing drivers who rely on the Internet platform to work are trapped in a "data maze" and "invisible cage" to a certain extent, and the protection of their rights and interests may face systemic risks. The differentiated and dynamic pricing mechanism tailored based on "user portraits" has formed the so-called "big data killing of familiarity" phenomenon, which is the "implicit bias" of algorithmic decision-making.

In addition, in the field of life sciences, artificial intelligence-driven gene editing technology is breaking through the ethical laws of natural evolution. In terms of human emotions, the "virtual partners" created by generative artificial intelligence will reshape interpersonal ethical relationships. Some young people are more willing to talk to artificial intelligence and are alienated from their real relatives and friends around them. This kind of emotional substitution not only changes human emotional patterns, but also dissolves the biological basis of social and ethical connections.

Use science and technology ethics to prevent the risk of technology getting out of control

The ultimate goal of the development of artificial intelligence is to enhance human capabilities, promote social equity, and improve the quality of life, rather than simply pursuing technological breakthroughs or commercial interests. Science and technology ethics is the "navigator" for the development of artificial intelligence. It can provide clear directional guidance and value constraints for its research and development, application and governance, ensuring that the development of artificial intelligence technology always serves the well-being of human society, preventing artificial intelligence from getting out of control or deviating from the moral track, and realizing the coordinated progress of artificial intelligence technology and human welfare.

Science and technology ethics puts forward the value of "people-oriented" and requires that artificial intelligence technology must respect human dignity, protect human freedom and rights, and avoid technology alienation as a tool of oppression. If an artificial intelligence algorithm harms the rights of relevant groups due to data bias, the algorithm design must be revised according to scientific and technological ethical principles to ensure fairness and justice to all people.

At the same time, the role of scientific and technological ethics is also to prevent the risk of technological loss of control. Artificial intelligence technology has characteristics such as autonomy, inexplicability, and wide influence. If it lacks ethical constraints, it may lead to privacy violations, algorithmic discrimination, etc. The role of governing the development of artificial intelligence with science and technology ethics is to predict risks in the design stage by formulating "preventive ethical principles", such as formulating ethical decision-making guidelines for autonomous driving and restricting the use of deep fake technology.

Lay the foundation for social trust. The public’s acceptance of artificial intelligence directly affects the implementation of technology. If the artificial intelligence system lacks transparency and explainability, or has problems such as data misuse, it will inevitably lead to a crisis of public trust in artificial intelligence. Technological ethics emphasizes principles such as transparency and accountability, requiring artificial intelligence systems to disclose their decision-making logic and clarify the boundaries of developers' ethical and legal responsibilities, in order to win public trust.

Balance the interests and powers of multiple parties. Artificial intelligence technology may intensify the monopoly of data resources. Technological ethics prevents technology from becoming a tool for the benefit of minority groups or organizations by promoting values such as fairness, inclusion, and democratic participation. When using technologies such as facial recognition, it is necessary to balance the relationship between public safety and personal privacy and protect the public's privacy rights as much as possible.

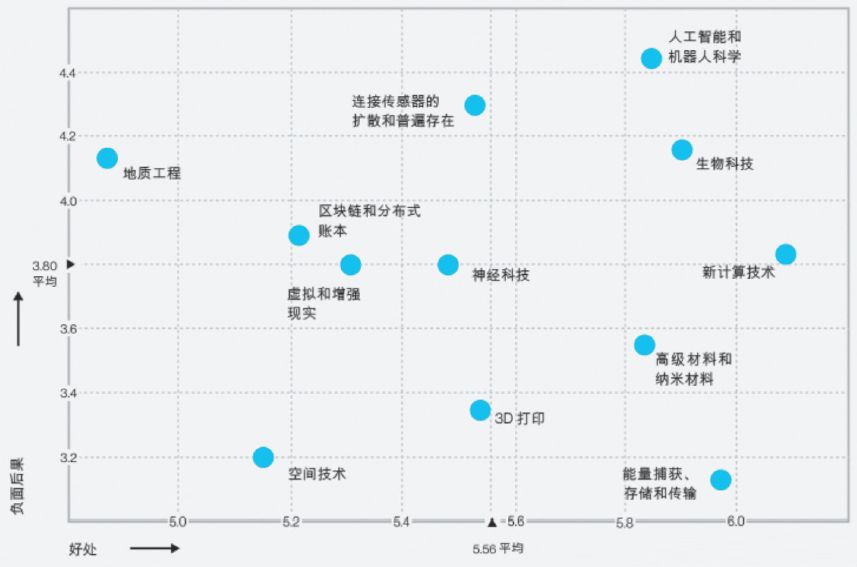

Coping with future uncertainty. Cutting-edge technologies such as strong artificial intelligence (AGI) and brain-computer interfaces have the potential to completely change the way human society exists and people’s lifestyles. Science and technology ethics provides effective basis for future legislation and policy formulation through forward-looking discussions on difficult issues such as "should artificial intelligence have the status of a moral subject?" If artificial intelligence develops to the level of autonomous consciousness, it is necessary to study in advance how humans define the rights and responsibilities of artificial intelligence, put forward suggestions for scientific and technological ethics governance to solve problems, and delineate restricted areas for the development of artificial intelligence technology.

Building a new civilization form of "human-machine symbiosis"

In 1942, science fiction writer Isaac Asimov proposed the Three Laws of Robotics: First, a robot must not harm a human being, nor may a human being be harmed through inaction; second, a robot must obey the orders given to it by humans, unless these orders conflict with the First Law; third, a robot must protect its own existence, unless such protection conflicts with the above two. This provides a basic framework for artificial intelligence ethics. However, its individual centralism, static rule setting, and vague expressions such as "human harm" and "obeying orders" cannot adapt to the complexity of contemporary artificial intelligence. It cannot solve the macro-level ethical risks that artificial intelligence systems may harm group interests through algorithmic discrimination and data abuse, such as employment fairness and privacy rights. It cannot effectively constrain dynamically evolving artificial intelligence behavior, and cannot provide a clear mechanism for dividing rights and responsibilities. There is an urgent need to calibrate the development path of artificial intelligence through multiple paths and build an ethical safety net that adapts to the iterative upgrade of artificial intelligence.

The first is to clarify the ethical bottom line for the development and application of artificial intelligence. First, life safety comes first. Any artificial intelligence system must have the highest priority of protecting human life, especially in high-risk scenarios such as autonomous driving and medical decision-making, by presetting algorithm priorities to avoid direct or indirect harm to humans. The second is responsibility traceability. Artificial intelligence developers and operators need to bear legal responsibility for system behavior, including scenarios such as algorithm design flaws, data abuse, or out-of-control decision-making, establish a full-chain responsibility traceability ethical mechanism from research and development to application, and clarify the boundaries of obligations between technology providers and users. The third is data privacy and fairness. Artificial intelligence systems must follow the principle of data minimization, implement strict data protection, use advanced encryption technology and anonymization processing, and prohibit the collection and use of unauthorized personal information.

The second is to promote the preventive ethical design of artificial intelligence systems. At the technical level, a new generation of “ethics-embedded artificial intelligence” has entered the experimental stage. This type of artificial intelligence system has a built-in "ethical conflict resolution agreement". When medical artificial intelligence finds that there is unfair resource allocation in the treatment plan, the system will automatically trigger an ethical warning. This kind of preventive ethical design embeds the moral requirements of "ethical priority" and "intelligent kindness" into the technical architecture.

Again, relevant ethical guidelines or regulations need to be formulated. Work in this area has achieved significant results. Ethical codes or regulations such as the General Data Protection Regulation, Artificial Intelligence Code of Ethics, and Artificial Intelligence Law passed by the European Union are used to guide the future development and application of enterprises and government departments in the field of artificial intelligence, and directly include high-risk artificial intelligence applications, such as social credit scoring, into the ban. The "New Generation Artificial Intelligence Ethical Code" promulgated by our country integrates ethics into the entire life cycle of artificial intelligence and provides ethical guidance for natural persons, legal persons and other related institutions engaged in artificial intelligence-related activities. Of course, in order to deal with problems in the application of artificial intelligence technology, it is imperative to build a globally coordinated artificial intelligence ethical governance system. In recent years, my country has issued the Global Artificial Intelligence Governance Initiative and other initiatives to jointly promote global ethical governance of artificial intelligence with other countries.

It must be noted that installing an ethical "navigator" for artificial intelligence is not a stumbling block that hinders the innovation and progress of artificial intelligence technology. It should not only maintain tolerance for artificial intelligence technological innovation, but also maintain the bottom line of human value. Only when humans can uphold ethical values in the flood of digital technology, and when artificial intelligence can understand and insist on "doing things and not doing things" from a human ethical perspective, can we ensure that the artificial intelligence technology revolution truly serves mankind. When smarter artificial intelligence gets along with humans in a friendly manner, it will be the arrival of a new civilization form of "human-machine symbiosis."

(Author: Chen Weihong is the chief expert of the Institute of Marxism at Shanghai University of International Business and Economics, a professor at the School of Marxism, and a researcher at the Shanghai University Think Tank International Economic and Trade Governance Research Center)