Ethical Challenges Of Artificial Intelligence

Ethical Challenges Of Artificial Intelligence

Author: Lan Jiang Wiener, the father of cybernetics, in his famous book "The Usefulness of Everyone" once talked about automation technology and intelligent machines, and came to an alarmist conclusion.

Wiener, the father of cybernetics, once talked about automation technology and intelligent machines in his famous book "The Usefulness of Man" and came to an alarming conclusion: "The trend of these machines is to replace humans at all levels, rather than just replacing human energy and power with machine energy and power. Obviously, this new substitution will have a profound impact on our lives." This prophecy of Wiener may not become a reality today, but it has become the subject of many literary and film and television works. Movies such as "Blade Runner", "Immortal" and "Westworld" feature artificial intelligence rebelling against and surpassing humans. A painting of a robot giving alms to begging humans appeared on the cover of The New Yorker magazine on October 23, 2017... People are increasingly inclined to discuss when artificial intelligence will form its own consciousness and surpass humans, leaving humans as their slaves.

Wiener's radical rhetoric and today's ordinary people's concerns about artificial intelligence are exaggerated. However, the rapid development of artificial intelligence technology does bring a series of challenges to the future. Among them, the biggest problem in the development of artificial intelligence is not the technical bottleneck, but the relationship between artificial intelligence and humans, which has given rise to the ethics of artificial intelligence and the ethics of transhumanism. To be precise, this kind of ethics has deviated greatly from the purpose of traditional ethics. The reason is that the ethics of artificial intelligence no longer discusses the relationship between people, nor the relationship with established facts in nature (such as animals, ecology), but the relationship between humans and a product structure invented by themselves. Because this special product - according to the futurist Kurzweil in "The Singularity is Near" - once it exceeds a certain singularity, there is the possibility of completely overwhelming human beings. In this case, can the ethics between people still constrain the relationship between human beings and this existence beyond the singularity?

In fact, the study of the ethical relationship between artificial intelligence and humans cannot be separated from the discussion of artificial intelligence technology itself. In the field of artificial intelligence, from the beginning, to be precise, it followed two completely different paths.

First, there is the path to artificial intelligence in the true sense. In 1956, a special seminar was held at Dartmouth College. John McCarthy, the organizer of the conference, gave this conference a special name: Artificial Intelligence (AI for short) Summer Symposium. This is the first time that the name "artificial intelligence" has been used in an academic context, and people such as McCarthy and Minsky who participated in the Dartmouth meeting directly used this term as the name of a new research direction. In fact, McCarthy and Minsky were thinking about how to turn our various human senses, including vision, hearing, touch, and even brain thinking, into information in the sense of Shannon, known as the "father of information theory", and to control and apply them. The development of artificial intelligence at this stage is still a simulation of human behavior to a large extent. Its theoretical basis comes from the idea of the German philosopher Leibniz, that is, various human feelings can be converted into quantified information data. In other words, we can regard various human sensory experiences and thinking experiences as a complex formal symbol system. If we have strong information collection capabilities and data analysis capabilities, we can completely simulate human feelings and thinking. This is why Minsky confidently declared: "The human brain is just a computer made of meat." McCarthy and Minsky not only successfully simulated visual and auditory experiences, but later Terry Sheinowski and Jeffrey Hinton also invented a "" program based on the latest advances in cognitive science and brain science, simulating a network similar to human "neurons", so that the network can learn like the human brain and make simple thinking.

However, at this stage, the so-called artificial intelligence is simulating human feelings and thinking to a greater extent, allowing the birth of a more human-like thinking machine. The famous Turing test is also conducted on the criterion of whether one can think like a human being. The principle of the Turing test is very simple. Let the testing party and the tested party be separated from each other. Only a simple dialogue is used to let the people on the testing party judge whether the tested party is a human or a machine. If 30% of the people cannot judge whether the other party is a human or a machine, it means the Turing test has been passed. Therefore, the purpose of the Turing test is still to test whether artificial intelligence is more like humans. However, the question is, does machine thinking need the intermediary of human thinking when making its own judgments? In other words, does the machine need to take a detour first, that is, dress up its thinking like a human before making a judgment? Obviously, for artificial intelligence, the answer is no, because if artificial intelligence is used to solve certain practical problems, they do not need to let themselves go through the intermediary of human thinking to think and solve problems. Human thinking has certain limitations and shortcomings. Forcibly simulating the way the human brain thinks is not a good choice for the development of artificial intelligence.

Therefore, the development of artificial intelligence has gone in another direction, namely intelligence augmentation (IA). If simulating a real human brain and thinking direction is no longer important, can artificial intelligence develop a pure machine learning and thinking style? If a machine can think, can it do so in the same way as the machine itself? This gives rise to the concept of machine learning. The concept of machine learning has actually become a learning method developed by the machine itself. Through the collection of massive information and data, the machine can come up with its own abstract concepts from this information. For example, after browsing tens of thousands of cat pictures, the machine can extract the concept of cats from the picture information. At this time, it is difficult to say whether there is a difference between the concept of cat abstracted by the machine and the concept of cat understood by humans. However, the most important thing is that once the machine refines its own concepts and concepts, these abstract concepts and concepts will become the basis of the machine's own way of thinking. These abstract concepts by the machine itself will form a network of thinking patterns that do not rely on humans. When we discussed the Alpha Dog that defeated Lee Sedol, we have already seen the power of this machine-like thinking. This kind of machine-learning thinking has made the Go game in the usual sense lose its power, causing chess players accustomed to human thinking to instantly collapse. A machine that no longer thinks like a human may bring greater panic to humans. After all, artificial intelligence that simulates the human brain and thinking still has a certain degree of controllability, but with artificial intelligence based on machine thinking, we obviously cannot make the above simple conclusion, because according to chess players who have played against artificial intelligence, even after multiple replays, they still cannot understand how an artificial intelligence like AlphaGo will make the next move.

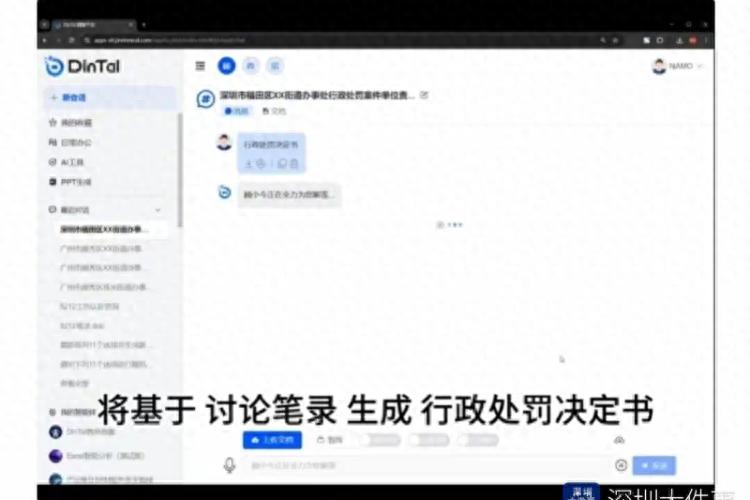

However, it seems too early to say that intelligence enhancement technology is a replacement for humans. At least Engelbart, the engineer who first proposed "intelligence enhancement", does not think so. For Engelbart, the direction of McCarthy and Minsky aims to establish the homogeneity of machines and humans. The establishment of this homogeneous thinking mode is instead in a competitive relationship with humans, just like the robots in "Westworld" who always regard themselves as humans, and they seek a relationship on an equal footing with humans. The purpose of intelligence enhancement technology is completely different. It is more concerned with the complementarity between humans and intelligent machines, and how to use intelligent machines to make up for the deficiencies in human thinking. For example, autonomous driving technology is a typical intelligence enhancement technology. The realization of autonomous driving technology requires not only installing an autonomous driving program on the car, but more importantly, it also requires the collection of a large amount of map and landform information. It also requires the autonomous driving program to be able to judge some moving accidental factors on the image data, such as a person suddenly crossing the road. Autonomous driving technology can replace drivers who are prone to fatigue and distraction, freeing humans from arduous driving tasks. Similarly, robots that sort express delivery and automatically assemble in automobile factories also belong to intelligence-enhanced intelligence. They do not care about how to be more like humans, but how to solve problems in their own way.

In this way, since intelligence enhancement technology brings two planes, one is the plane of human thinking, and the other is the plane of machines, so an interface technology is also needed between the two planes. Interface technology makes it possible for people to communicate with intelligent machines. When Felsenstein, the main pioneer of interface technology, came to Berkeley, it had been 10 years since Engelbart had been there to discuss intelligence enhancement technology. Felsenstein used an image from Jewish mythology - the earth golem - to describe the relationship between humans and intelligent machines under today's interface technology. Rather than saying that today's artificial intelligence aims to surpass and replace humans when the singularity is approaching, it is better to say that today's artificial intelligence technology is increasingly leaning toward anthropocentric puppetry. Under the guidance of this concept, the development goal of today's artificial intelligence is not to produce an independent consciousness, but how to form an interface technology to communicate with humans. In this sense, we can re-understand the ethics of the relationship between artificial intelligence and humans from the perspective of Felsenstein’s puppetology. That is to say, the relationship between humans and intelligent machines is neither a pure utilization relationship, because artificial intelligence is no longer a machine or software, nor does it replace humans and become their masters, but a symbiotic partnership. When Apple developed Siri, the intelligent software for communicating with humans, Steve Jobs proposed that Siri was the simplest and most elegant model for cooperation between humans and machines. In the future, we may see that when some countries gradually fall into an aging society, whether it is front-line production or the care of these elderly people who are unable to move due to aging, they may face such technical problems in the interface between humans and intelligent machines. This is a new ethics between humans and artificial intelligence. They will constitute a kind of transhumanism. Perhaps, what we see in this scene is not necessarily an ethical disaster, but a new hope.

"Guangming Daily" (page 15, April 1, 2019)