Wang Guoyu Of Fudan University: Artificial Intelligence Ethics--Looking For The Feasibility Boundary Of Artificial Intelligence

Wang Guoyu Of Fudan University: Artificial Intelligence Ethics--Looking For The Feasibility Boundary Of Artificial Intelligence

Focusing on the hot topic of the relationship between AI, morality, and humans, DeepTech recently interviewed Professor Wang Guoyu, director of the Fudan University Biomedical Ethics Research Center and Fudan University Applied Ethics Research Center.

: What do you think of the idea that "after artificial intelligence robots develop self-awareness, robots will rule humans"? Why do you think people are more worried and fearful about this issue now than before?

Wang Guoyu: The first half of this question is a hypothesis, that is, it is assumed that artificial intelligence robots will develop self-awareness, and the second half is speculation, speculating that "robots will rule humans." This is a classic "If and then" speculative statement. This reminds me of the plot in "Rossum's Universal Robot" by Czech writer Karel Capek. The well-known word Robot comes from this play. The word robot is a combination of Czech and Polish. The former means "labor, hard labor" and the latter means "worker". Together they are translated as "enslaved workers, slaves". The robots in the play had no consciousness or feelings at the beginning, and they replaced all human labor. Later, an engineer in the robot factory quietly "injected" a "soul" into the robot, giving the robot the ability to feel pain, and then gradually gained self-awareness. Unfortunately, the robot's first thought after awakening is to resist and attack. This may be the earliest science fiction literary work about robots dominating humans. Later, there were more and more similar works. Such a narrative naturally brings people fear and worry about artificial intelligence.

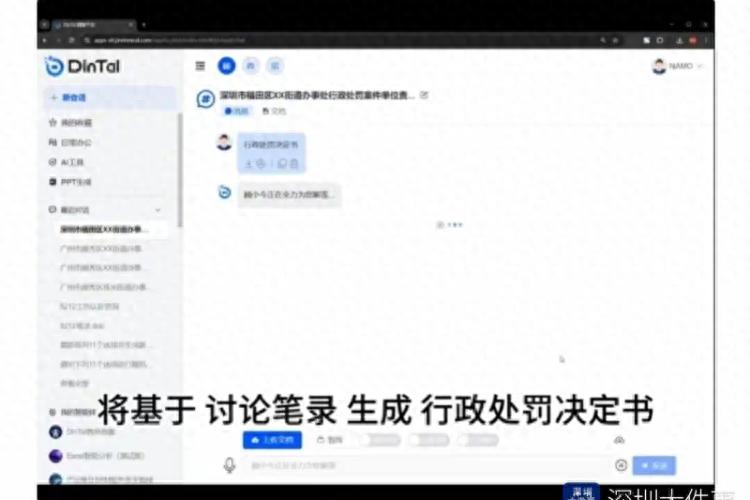

Technological ethics stems from people's fears and concerns about technology. Therefore, more than half a century ago, Isaac Asimov proposed the need to use ethical rules to guide the behavior of machine artificial intelligence: that is, robots must not harm humans, or cause harm to humans through inaction (stand by); robots must obey human orders unless they violate the first law; robots must protect themselves as long as they do not violate the first and second laws. Robots must not harm the human race, or allow the human race to be harmed through inaction (sitting aside). (Law Zero, 1985)

Figure | The Three Laws of Robotics were a concept first proposed by Isaac Asimov in his 1942 work "Twister," and became the code of conduct and clues for the development of the stories of robots in many of his novels. Robots are required to abide by these guidelines, and violation of these guidelines will cause the robot to suffer irreversible psychological damage. Later, robots in science fiction novels by other authors also obeyed these three laws. At the same time, scholars have established an emerging discipline "Mechanical Ethics" based on the Three Laws, aiming to study the relationship between humans and machines (Source: )

So, is it possible for artificial intelligence robots to gain self-awareness? Those who advocate strong artificial intelligence believe that it is certain and it is just a matter of time. Kurzweil predicted that in 2045, humans and machines will be deeply integrated, and artificial intelligence will surpass humans themselves and reach the "singularity." I don’t know whether artificial intelligence will definitely develop self-awareness, whether humans will be willing to see or allow artificial intelligence to develop self-awareness, and when artificial intelligence will develop self-awareness. I can’t predict these. But once artificial intelligence acquires self-awareness, will it “go from slave to general”? I think it is theoretically possible if we don't prepare early. Of course, I am not envisioning a nation ruled by robots, as science fiction writers do. Rather, it is seen from the perspective of the loss of human autonomy and freedom.

In fact, we have today felt that while artificial intelligence enhances people's abilities and freedom of behavior, it makes people more and more dependent on it. This dependence can also be explained as the loss of people's independence. As Hegel pointed out when talking about the relationship between "master and slave" in "The Phenomenology of Spirit", master and slave are defined by each other: on the one hand, the master becomes the master because of the existence of the slave; on the other hand, once he obtains the status of ruler, what the master gets is not independent consciousness but dependent consciousness. He does not determine his true self because of this, but instead promotes the slave to surpass the master. To give a popular example, the smart navigation we use today allows us to safely avoid and bypass various roads with poor road conditions, and we will follow the route it guides to reach our destination. In this sense it is an enabling technology. We believe that this information comes from a navigation that grasps everything in real time and knows everything on the road. But as long as we are worried that giving up information will take a detour and believe that accepting information will quickly reach the destination, then we begin to rely on information. At this time, the "control" of information over people becomes apparent.

Regarding the latter question: Why is the public’s anxiety and fear about this issue so much greater than before? I think this is first of all because of the rapid development of artificial intelligence itself in recent years, which has made people strongly feel the changes that artificial intelligence technology systems have brought to the world we live in. Especially after defeating the human Go player Lee Sedol in March 2016, people were amazed at the power of artificial intelligence. On the one hand, they cheered, on the other hand, they were frightened and worried about how it would change the world and our lives. And some celebrities like Hawking, Bill Gates, and Musk have predicted the threat of artificial intelligence, which will also intensify people's panic. For example, Hawking believes that "once robots reach the critical stage of self-evolution, we cannot predict whether their goals will still be the same as humans." There are also some media that often spread and exaggerate the progress of some artificial intelligence, and like to use sensational words such as whether artificial intelligence is a devil or an angel as headlines. This narrative also heightens concerns about evolving artificial intelligence.

: The face-changing technology that became popular some time ago, whether it is foreign or domestic ZAO, has set off a wave of craze. Do you think the current AI applications can be widely spread in a short period of time, is it partly because the technology is developing too fast and the barriers to entry are lowered? Technology without constraints is dangerous, so how can we release the innovation of technology as much as possible within moderate constraints? How should conflicts between ethics and science and technology policy be reconciled?

Wang Guoyu: I think the rapid spread of this type of face-changing technology is partly due to the relatively low threshold of the technology itself, and on the other hand, it is also related to people’s curiosity. I have also seen similar videos in Moments and WeChat groups. Although it is not a face-swapping video, it is a face-swapping APP. You only need to upload a photo or take a photo on the spot, and you can get the photos you want in different eras and with different images. I see many people are happy to play this game, purely out of curiosity. But most people may not know that, in this case, our "Facebook" is saved as data in the background. We generally don't know how this data will be processed and what it will be used for, and we are rarely told clearly. If this data is used maliciously, it will bring great harm to yourself, others and society. We know that many banks and even door entrances now use faces to verify people's identities. These face data are like a person's fingerprints, an important symbol of a person's identity. Once it falls into the hands of criminals, the consequences will be disastrous.

Picture | ZAO poster, ZAO is an application software that uses AI technology to complete face-swapping. Users can upload a full-face photo to create emoticons and characters in face-swapping movies and TV series (Source: Apple Store)

The problem now is that we are still a little slow in terms of legislation and policy regulations. Technological innovation does not mean that you can do whatever you want. The purpose of technological innovation is for a better life. If this innovation does not bring a better life, but destroys the order that a good life should have, harms others, and harms public interests, then such innovation is not something we should accept and embrace. Therefore, we should insist on responsible innovation. Responsible innovation not only focuses on the economic benefits brought by innovation, but also pays attention to the social benefits of innovation and the social responsibility of enterprises. Correspondingly, relevant government departments should promote relevant research, promulgate relevant laws and regulations as soon as possible, conduct classified management of similar technologies, provide reasonable guidance for technological innovation, and resolutely stop some behaviors that violate social public interests and infringe on the rights of others.

: In order to try to make "technology good", what efforts do you think different levels of society need to make?

Wang Guoyu: According to Aristotle, every skill aims at some kind of good. But one of the hallmarks of modern technology is its uncertainty. This uncertainty runs through the entire process of modern science and technology from purpose to methods and results. Facing the uncertainty of science and technology, not only the scientific and technological workers and enterprises have the responsibility, but also the competent government departments have the responsibility. Humanities scholars, the media and the public also bear certain responsibilities.

As scientists and engineers engaged in scientific and technological research and development, they are the creators and producers of technology. They know best the characteristics and potential risks of the technology itself, and have the responsibility to transparently explain and promptly inform the public of its potential risks and uncertainties. Management departments and policy makers of scientific and technological R&D, including politicians and entrepreneurs, have the responsibility to work with scientific and technological workers, humanities scholars, etc. to conduct risk prediction and ethical assessment of the development of science and technology. Since scientific and technological activities directly penetrate into political activities, scientific and technological decisions are also political decisions. Science and technology policy is a catalyst for the development of science and technology. It can regulate the trajectory of science and technology development through appropriate policies, and can issue warnings when necessary. Workers in the humanities and social sciences should also pay attention to the social impact of the frontiers of science and technology development, providing support and basis for the government to formulate science and technology policies that are acceptable to the public. Mainstream media and public media should have a deep understanding of the characteristics of science and technology itself, rather than just making news. Finally, the development of science and technology cannot be separated from the support of the public. The public's active participation in the social governance of science and technology is the most important force in ensuring that modern science and technology can do good.

: The title of your report at this year's CNCC conference is "Artificial Intelligence Ethics: From Possibility Speculation to Feasibility Exploration". Can you share your original intention of choosing this topic? In addition to the ethical content, what other forums or speakers are you more interested in at this conference?