pessimistic? Optimism? Artificial Intelligence Ethical Issues Are Controversial

pessimistic? Optimism? Artificial Intelligence Ethical Issues Are Controversial

For thousands of years, human ethical and moral issues, from theological works to Kant's pure philosophical theoretical debates, have been difficult to express with simple formulas. Ask on the standard version of the tram

Is artificial intelligence a weapon for the benefit of mankind, or the ultimate evil that will destroy the world?

The English word for robot is "Robot", which was first coined by Czech writer Karel Capek in his science fiction works. Its original meaning is "serf-like forced laborer". On August 21, 2017, 116 founders and CEOs of artificial intelligence companies wrote an open letter to the United Nations, hoping to ban the development and use of "killer robots (i.e., automated weapons, such as drones, unmanned combat vehicles, sentry robots, unmanned warships, etc.)". They believe that these robots have the potential to become terrorist weapons. It is possible for dictators and terrorists to use these weapons against innocent people or in other undesirable ways.

However, on October 15, 2017, the British government pointed out in the "Developing Artificial Intelligence in the UK" report that artificial intelligence has great potential to improve education, healthcare, and increase productivity. The report put forward 18 suggestions for the development of artificial intelligence from the aspects of data, technology, research, and policy opening and investment, aiming to make the United Kingdom a world leader in artificial intelligence. The UK is not the only country that attaches great importance to the war on artificial intelligence. All countries are enthusiastically embracing this technology. Every technological invention is full of controversy. History tells us that technological progress has indeed made human life more free.

How should we evaluate the good and evil of artificial intelligence? What attitude should we take towards the development of artificial intelligence?

The academic debate on the moral judgment of artificial intelligence. The moral judgment of artificial intelligence needs to distinguish two issues: first, the moral evaluation of artificial intelligence itself; second, the evaluation of good and evil in the consequences of artificial intelligence research and development and application. The key issue of moral judgment on artificial intelligence has not been resolved, that is, the evaluation of the goodness and evil of artificial intelligence itself has not been distinguished from the evaluation of the goodness and evil of the consequences of the development and application of artificial intelligence. The key to solving the latter problem still lies with humans themselves. However, to solve the former problem, we cannot judge it based on the existing ethical and moral framework, but should critically reflect on traditional science and technology ethics.

At present, there are many opinions on the good and evil of artificial intelligence, and there is no consensus.

Generally speaking, there are mainly three positions and views in the academic community:

The first optimistic stance.

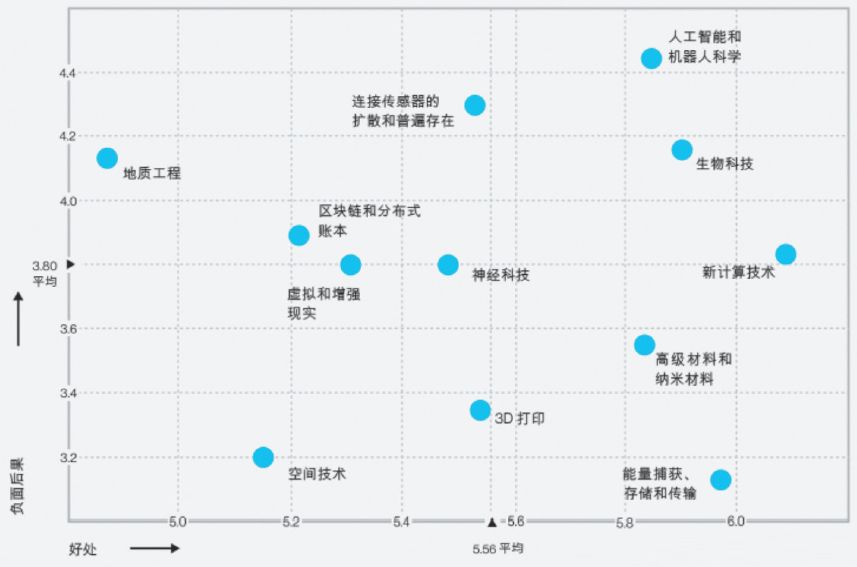

Experts and scholars who hold this position believe that artificial intelligence is just a means and tool, and it does not matter "good or evil" or "good or bad". The key lies in the humans who use it, and they are optimistic about the future development prospects of artificial intelligence. Generally speaking, the research and development and widespread application of artificial intelligence will do more good than harm to human development, and can produce huge economic and social benefits.

Generally speaking, the optimistic stance is mostly held by some people in the artificial intelligence community who are related to the research and development and application of artificial intelligence, or out of self-interest, or by scientists who blindly worship science and technology. Its shortcoming is that it views the positive aspects of artificial intelligence in a one-sided and isolated manner, such as the ability to produce huge economic and social benefits, reconstruct almost all industries including finance, medical care, education, transportation, etc., thus promoting the overall change of human lifestyle. They intentionally or unintentionally ignore or cover up the negative effects of artificial intelligence. For example, the birth of killer robots will bring security threats to mankind, and human overreliance on artificial intelligence civilization may cause the degradation of human civilization, etc.

The second neutralist position.

Experts and scholars who hold this position admit that artificial intelligence itself has the possibility of "doing evil", and its research and development and application are potential threats to human beings and may bring serious consequences. However, they still strongly support the development of artificial intelligence technology for some reasons.

The third pessimistic position.

Experts and scholars who hold this position believe that artificial intelligence is no longer a tool, but has a sense of life and the ability to learn. It has two possibilities of "doing evil" in morality: one is that the powerful power of artificial intelligence may cause "human beings to do evil", and the other is that artificial intelligence itself has the ability to "do evil", and humans cannot cope with the "evil" of artificial intelligence, which will eventually lead to nothingness and destruction. Therefore, they expressed concerns that artificial intelligence may get out of control or harm humanity in the future. In early 2015, Stephen Hawking, Bill Gates and Elon Marks, among others, signed an open letter calling for controls on artificial intelligence development. Max believes that artificial intelligence will "call out the devil" and is a greater threat to mankind than nuclear weapons. Hawking clearly asserted that the complete development of artificial intelligence may lead to the destruction of mankind. People who hold a pessimistic stance believe that technology is not the way to liberate humans. It "is not liberating from nature by controlling nature, but destroys nature and humans themselves. The process of constantly murdering living creatures will eventually lead to total destruction."

Summarize:

Technological ethics is a set of norms and certain restrictions for people's technological behavior to limit the development of human beings in order to avoid or reduce the "negative" effects of technological abuse. This point of view simply makes a moral judgment on the "right and wrong, good and evil" of scientific and technological behavior from an established ethical and moral standpoint, and makes normative appeals or instructions to science and technology, without reflecting on, revising and developing the ethical and moral system itself.

In fact, for many major issues in the development of science and technology, "it is no longer the traditional ethical and moral framework that can provide some valuable answers, but requires critical reflection on traditional ethical concepts and their prerequisite regulations" in order to provide effective answers. Specific to the issue of moral judgment of artificial intelligence, it is necessary to critically reflect on "whether artificial intelligence is a personal entity and a moral entity in the true sense" instead of getting entangled in some minutiae issues, otherwise it will be unhelpful to grasp the essence of the problem and its fundamental solution.

:

Wang Yinchun, Moral judgment of artificial intelligence and its ethical suggestions. Journal of Nanjing Normal University (Social Science Edition), 2018(04): pp. 29-36.

Wang Guoyu, a professor at Fudan University, has long been engaged in research on basic theories and applied strategies on high-tech ethical issues. She is the chief expert of the National Social Science Foundation’s major project "Research on High-tech Ethical Issues." She advocated that the study of ethics and morality in the new era of science and technology should, on the one hand, proceed from the realistic dimension and be oriented by problem awareness, pointing out that modern technology has obscured the traditional outlook on life. On the other hand, starting from the path of technological ethics, it is emphasized that technology contains value, and it is necessary to clarify concepts from the source, formulate norms, and then move from an ethical community and a scientific community to an action community.

At the conference, guest speaker Mr. Wang Guoyu was specially invited to give a keynote report. Sub-forums with themes such as "Is there a moral boundary in artificial intelligence development?" and "The future of intelligent driving" will be opened to jointly discuss the moral and ethical issues of artificial intelligence.

CNCC introduction:

It consists of conference reports, conference forums, technical forums, activities, exhibitions and other links. Among them, technical forums have the largest number, and more than 400 elites have contributed to the conference. Multiple high-quality forums have attracted a large number of academic and industrial people at home and abroad to participate. It is a stage for cutting-edge exchanges in computing. For more predictions on cutting-edge technologies and applications, the following is a list of technology forums that have been approved by this conference:

strategic cooperation media