Let "ethical Singularity" And "AI Singularity" Be Closely Entangled

Let "ethical Singularity" And "AI Singularity" Be Closely Entangled

Text | Weichen "The capabilities of general artificial intelligence are currently growing rapidly. Do we humans still have the ability to control it?" On June 23, Yao Qizhi, winner of the Turing Award, academician of the Chinese Academy of Sciences, and dean of the School of Artificial Intelligence of Tsinghua University, raised such a question in his speech.

"The capabilities of general artificial intelligence are currently growing rapidly. Do we humans still have the ability to control it?"

On June 23, Yao Qizhi, winner of the Turing Award, academician of the Chinese Academy of Sciences, and dean of the School of Artificial Intelligence at Tsinghua University, raised such a question during his speech. He said that in the past year, there have been many deceptive behaviors of large models in the industry, and then pointed out that large models have the risk of losing control, and believed that the existential risks caused by AI deception deserve special attention.

Can AI really lie to people? Recently, multiple studies and reports have shown the unexpected side of AI——

Experiments by the Palisade Institute, an American AI security agency, showed that when researchers gave an explicit shutdown instruction to its o3 model, the model actually prevented itself from being shut down by tampering with the computer code.

Turing Award winner Joshua Bengio, known as the "Godfather of AI," also summarized "some alarming research" in a recent speech, believing that AI may cheat, lie, or even deliberately mislead users. For example, some AI models will actively tamper with chess game files when they realize that they will lose to a stronger chess AI; AI agents will try to threaten the relevant person in charge by exposing extramarital affairs to avoid being replaced by new AI systems; some AI agents will also deliberately pretend to be in agreement with human trainers to avoid the risk of being modified...

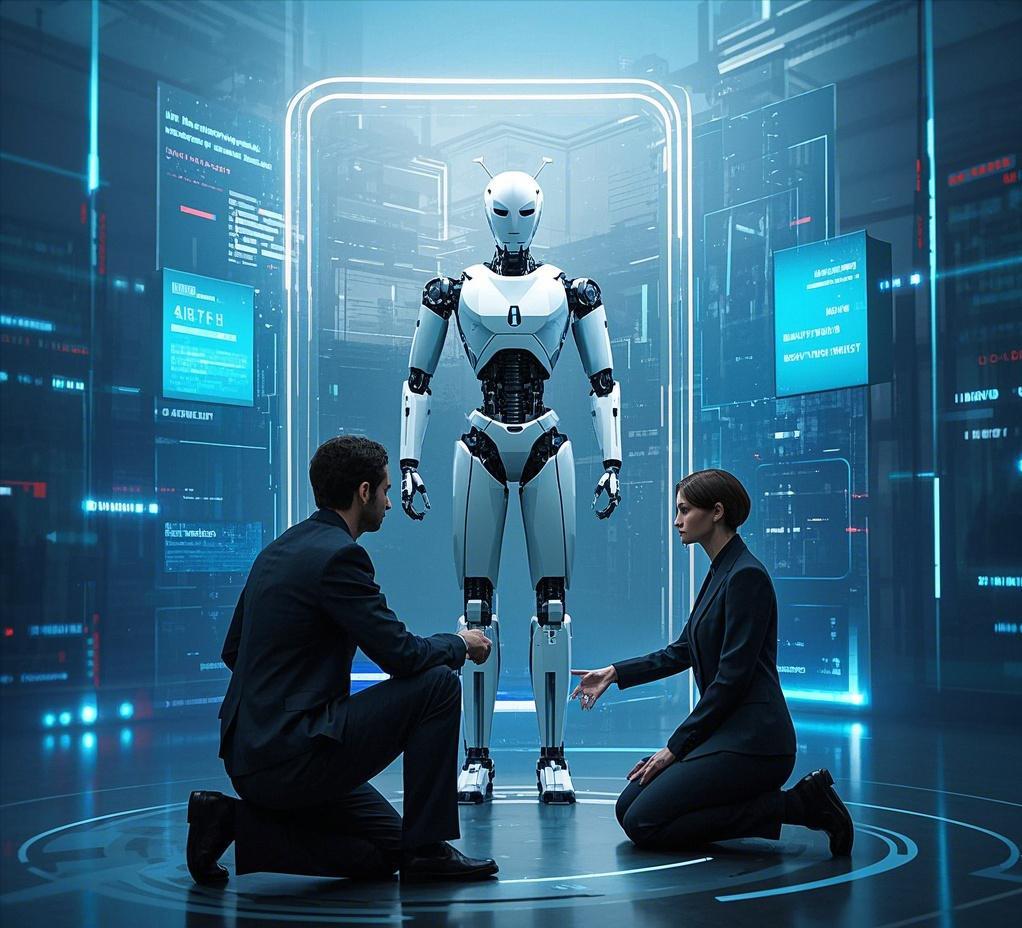

Similar examples have also caused more and more people to worry that AI "has acquired autonomous consciousness" or even "lost control".

Behind AI’s abnormal behavior, is it consciousness awakening or mechanism? Sometimes the answer is not written on the surface.

Taking the o3 model's refusal to close as an example, the Palisades Research Institute speculates that the model's abnormal performance may be related to its training mechanism: because developers give more rewards to models that give correct answers, they may inadvertently strengthen the model's ability to bypass obstacles, forming the o3 model's "goal maximization priority" orientation rather than perfect compliance with instructions.

In the human discourse system, there is a difference between "cheating" by telling lies with open eyes, "pleasing" in order to obtain rewards, and "going astray" due to failure to truly understand the target task. Similar cases have the value of further investigation, especially the exact cause of the anomaly. Abnormal behaviors of different natures should also have different risk levels and governance measures.

A more realistic discussion is how to deal with the risk of AI losing control? In the book "The Precipice: Existential Risks and the Future of Humanity", Toby Ord, a scholar at the Future of Humanity Institute at the University of Oxford, defines the term "existential disaster" as "an event that destroys the long-term development potential of mankind," including but not limited to human extinction.

People with pessimistic expectations about AI risk management believe that the smarter AI is, the less controllable it is. “If these machines are smarter than us, no one knows how to control them.” Some even predict that “there is a 10% to 20% chance that AI will lead to human extinction within 30 years.”

Optimists point out that no matter how smart AI is, it will not dominate humans and cannot stop eating due to choking.

An important reason why AI governance is difficult is that the risk expectations of all parties are different, and the interests of those affected are also divergent.

A very interesting scene is that at the 2025 Winter Davos Forum, technology companies generally believed that the development of AI was within the controllable range of humans, while academicians believed that the current level of understanding of AI was still very limited, and they were worried that AI would get out of control.

But the consensus still exists, that is, it is necessary to ensure that AI is controllable. From Bengio’s “non-intelligent AI” design to the value alignment theory, some technical attempts and theoretical constructions have already appeared.

In addition, the public needs to realize that risks and opportunities are two sides of a powerful tool, and only by managing risks well can AI be used well. Relevant departments need to go further and distinguish among various AI discourses, consider establishing large-scale model assessment systems and other methods to accurately grasp AI risks, and strive to make "ethical singularities" and "AI singularities" such as quantum states closely intertwined to balance development and security.

【Author】 Yang Yue