Frontier Talk | Is Artificial Intelligence Ethics A "moral Show" Or "action Manual"? (superior)

Frontier Talk | Is Artificial Intelligence Ethics A "moral Show" Or "action Manual"? (superior)

Is the ethical principles we formulate for AI a "moral show" or a "action manual"? This issue is the second issue of "AI Ethics Frontier" column in 2025. Author Bloomberg, proofreader Xie Youyou

Editor's note: Is the ethical principles we formulate for AI a "moral show" staged by enterprises and institutions to decorate their appearance and calm public doubts, or is it a "action manual" that can truly guide us to responsibly develop and apply AI? This issue is the 2025 issue of the "Frontier Talk on AI Ethics" column (Part 1). Applied ethics scholars will deeply explore all aspects of artificial intelligence ethics, from its origin to its core principles, from games from various parties to the many difficulties in practice, trying to clarify the true face of AI ethics, and explore its possible path from theory to practice.

Table of contents

[The reason for the length is divided into two articles published]

(superior)

1. The "past and present" of artificial intelligence ethics: from science fiction to reality

2. Consensus and differences in AI ethical principles

3. Moral show: The difficulty in implementing AI ethics

(Down)

4. From principles to code—the "last mile" of developers in ethical practice

5. Action Manual: Moving to Responsible AI

6. Conclusion

1. The "past and present" of artificial intelligence ethics: from science fiction to reality

The germination of the idea of artificial intelligence ethics is almost synchronized with the birth of the concept of artificial intelligence. As early as 1956, at the Dartmouth Conference initiated by John McCarthy and others, the term "artificial intelligence" (AI) was officially proposed, and philosophical and ethical thinking about machine intelligence had quietly begun.

Interestingly, science fiction played an important role in the birth of early AI ethics. For example, in the 1942 work "Robot" (a short story in "I, Robot") published by science fiction novelist Isaac, for the first time, proposed the "Three Laws of Robot" (the Three Rules of Robots), and in 1985, the three laws were expanded into four laws.

Asimov's three laws of robots

Asimov's four laws of robots

Although the law of robots originated from fictional novels, it has become an important ideological experiment and concept starting point for early discussions on how to regulate AI behavior. These laws provide latecomers with a preliminary framework for thinking about AI control and human security, and reveal a law that in the early discussions of complex technological ethics, fascinating narratives tend to be more influential than difficult academic works and are easier to shape public and early academic cognition.

The emergence of AI ethics stems from the potential risks and challenges brought by AI technology. Initially, concerns about AI ethics focused on more concrete issues, such as the possible deceptions caused by robots with human appearance or behavior. With the heavy funding of AI research in the military field during the Cold War, the potential risk of AI abuse has also begun to attract attention.

Entering the late 20th century to the early 21st century, with the increasing maturity of AI technology and the continuous expansion of application fields, especially the widespread application of technologies such as machine learning and data analysis, the discussion of AI ethics has gradually shifted from philosophical thinking to responding to more specific and urgent social realities. Just as AI technology itself has evolved from theoretical conceptual research to strong practical applications, AI ethics concerns have evolved, and high-risk AI applications (such as autonomous driving, medical diagnosis) and some compelling AI error events (such as discriminatory results caused by algorithmic bias) further highlight the urgency of formulating and implementing an AI ethical framework. Many professional organizations, such as the Society of Electrical and Electronic Engineers (IEEE) and the Association of Computers (ACM), have also begun to develop relevant codes of conduct and ethical guidelines.

Finally, in the second decade of the 21st century, AI ethics officially emerged as an independent interdisciplinary field, committed to systematically studying and solving complex moral and social problems brought about by AI development. With the continuous enhancement of AI systems' capabilities and increasing autonomy, they have brought welfare to society while introducing many emerging and complex potential risks. These risk considerations are not alarmist, and many of them have gradually emerged in real applications, transforming AI ethics from academic issues in an ivory tower to realistic issues of general concern to the public. These risks and challenges mainly include:

Prejudice and discrimination

(Bias and )

AI systems may learn and amplify the human biases hidden in training data during training, resulting in unfair results in key areas involving major personal interests and social well-being, such as corporate recruitment, credit approval, criminal justice and even medical diagnosis. For example, Amazon was forced to deactivate AI recruitment tools because they had bias against female job seekers.

Privacy violation

( )

The power of AI is often built on the analysis of massive data, which has raised concerns about personal privacy leaks and data abuse. Among them, the abuse of facial recognition technology and large-scale government-led surveillance projects are particularly disturbing.

Lack of transparency and accountability

(Lack of and )

At present, many advanced AI systems, especially models based on deep learning, have the decision-making process like a "black box" and are difficult to understand by humans. This opacity makes it difficult to hold accountable and build public trust when AI systems make mistakes or damages.

Unemployment and economic inequality

(Job and )

AI-driven automation may lead to job losses in some industries, exacerbating economic structural imbalances and social inequality.

Safety and security risks

(and Risks)

Autonomous systems such as autonomous vehicles may cause accidents due to technical failures or unexpected situations (such as Uber’s self-driving car deaths), and AI technology may also be used for malicious purposes such as cyber attacks and manufacturing autonomous weapons.

Disinformation and manipulation

( and )

AI can generate highly realistic and false content ("deep forgery") to spread rumors, slander others or interfere with public opinion, seriously undermining the information ecology and social trust.

Erosion of human autonomy

( of Human )

AI systems may subtly influence and even manipulate human choices and decisions through personalized recommendations and precise pushes, weakening individuals' independent judgment ability.

These risks do not exist in isolation, but are related to each other and reinforce each other. For example, the opacity of AI systems (the “black box” problem) makes it more difficult to identify and correct algorithmic biases, and once wrong decisions caused by bias cause harm, opacity can hinder accountability. This interaction means that coping with AI risks requires a holistic ethical perspective because the various ethical principles are interdependent.

At a deeper level, many AI risks stem from the misalignment between the optimization goals of the AI system itself and the broader human value and social well-being. AI systems are often designed to optimize specific, quantifiable technical indicators, such as prediction accuracy or task completion efficiency. However, these technical indicators themselves often cannot fully cover complex human values such as fairness, dignity, and social justice. For example, an AI recruitment tool may “accurately” predict which candidates are more likely to succeed based on historical data, but if the historical data itself contains gender or racial bias, then even if AI is technically “accurate”, it may continue ethically injustice. Therefore, the core challenge of AI ethics is not only to prevent malicious use of AI, but also to ensure that the original design intention and core goals of the AI system are consistent with people-oriented values, which is far from being solved by simple technical means.

It is worth noting that the rapid development of artificial intelligence (especially generative AI) is emitting new ethical risks at an astonishing rate and exacerbating existing contradictions. For example, the maturity of deep forgery technology has led to the exponential growth of the ability to manufacture and disseminate false information, and the debate over the copyright of AI-generated content has become increasingly fierce with the popularity of AIGC applications. This "governance gap" between the speed of technological development, ethical norms and legal supervision is increasingly widening, posing a severe test for our ability to establish and implement an effective AI ethical framework. This requires us not only to pay attention to existing ethical principles, but also to have forward-looking thinking, predict new problems that new technologies may bring, and explore more agile and adaptable ethical governance methods.

2. Consensus and differences in AI ethical principles

Faced with the opportunities and challenges brought by AI, all sectors around the world are actively exploring and building the ethical principles of AI. Although these principles are expressed in different ways, their core often revolves around some common ideas. However, behind these seemingly convergent principles, there are also subtle differences and struggles between different actors of action (such as governments, international organizations, and enterprises) due to differences in positions, goals and values.

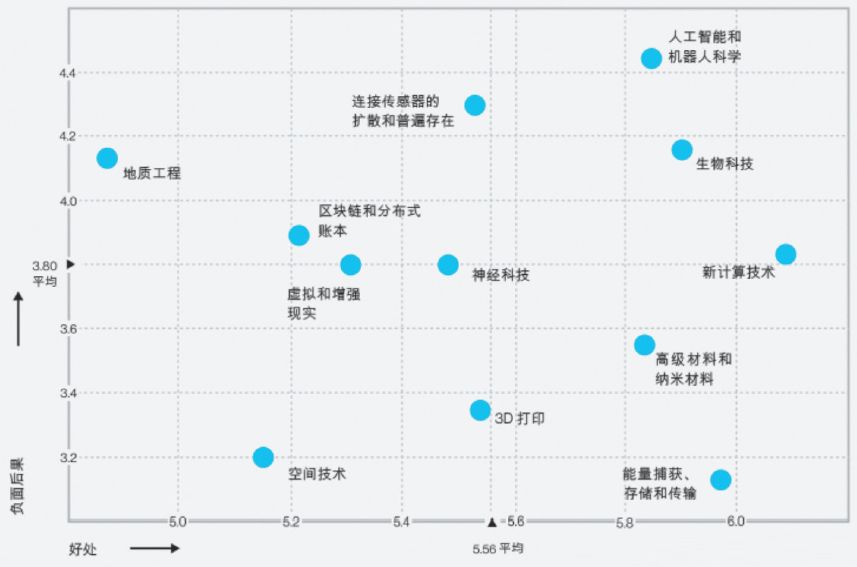

Although AI ethics is still developing and improving, some core principles have gained widespread consensus and have become an important cornerstone for guiding AI research and development and application. Many of these principles are not new inventions in the AI era, but the extension and reshaping of the ethical wisdom formed by human society in the fields of philosophy, law, medicine, etc. for a long time in the emerging technology field of AI. The latest research shows that the ethical principles of artificial intelligence have shown a convergence trend, and their core framework is highly similar to the four classical principles of medical ethics. The European Commission's High-Level Expert Group on Artificial Intelligence (HLEG) has proposed four guiding principles for the development of "trusted artificial intelligence": respect for human autonomy, harm prevention, fairness and explanatory nature. In addition, there are the following core ethical principles:

Table 1: Core AI ethical principles Production of Bloomberg

It is worth noting that these core principles do not always coexist harmoniously in practical applications. There are often internal tensions between them, which makes AI ethical practice full of trade-offs and trade-offs. For example, pursuing extreme transparency requires AI systems to interpret each of its decision-making steps, which may sometimes expose sensitive personal information in its training data, thus conflicting with the principles of privacy protection. Similarly, in order to improve the accuracy of AI models (serving human welfare or security principles), developers may adopt more complex algorithms, but this often reduces the interpretability of the model and goes against the transparency principle. For example, when pursuing algorithm fairness, if too strict fairness constraints are imposed on the model, it may sometimes affect the overall prediction utility or accuracy of the model to a certain extent.

The inherent tension between different principles shows that AI ethics is not a simple formula. AI ethics requires detailed analysis and prudent judgment in specific application scenarios, combining specific goals, possible risks and relevant stakeholders. What we pursue is not the perfect realization of all principles, but rather find an acceptable, dynamic balance point in the conflict of multiple values. This undoubtedly increases the complexity and challenges of AI ethical practices and makes it a field that requires continuous dialogue and exploration.

Although the core principles of AI ethics have gained certain consensus at the macro level, there are still many controversial and unresolved problems at the specific understanding and application level. The formulation of AI ethical principles is not the behavior of a single subject, but the result of the joint participation and game between governments, international organizations, enterprises and other forces. Each party has its own unique motivations, perspectives and practice methods, jointly shaping the picture of global AI ethics.

Governments (especially major economies around the world) play a main role in the formulation of AI ethical rules. Its motivations usually include protecting citizens' rights, maintaining social stability, responsibly promoting technological innovation, ensuring national security, and enhancing economic competitiveness in the global AI competition. Different countries and regions show their own unique AI ethical governance paths based on their political system, cultural background and development strategies.

The "Artificial Intelligence Act" issued by the EU is the world's first attempt to comprehensively regulate AI, reflecting the core concepts of "people-oriented" and "risk-based". The bill divides AI systems into four levels of risk: unacceptable risks (such as AI that manipulates human behavior, social scoring systems), high risks (such as AI used in critical infrastructure, healthcare, recruitment, law enforcement and other fields), limited risks (such as chatbots that require identity declarations), and low/risk-free (most AI applications), and imposes different levels of regulatory requirements on AI systems at different levels. The EU emphasizes the protection of basic human rights, ensures the security, transparency and accountability of AI systems, and is committed to setting a benchmark for "trusted AI" globally through a unified legal framework. On the one hand, this approach reflects the EU's high attention to civil rights, and on the other hand, it is also regarded as a way for it to exert influence and export values in the governance of the global digital economy.

"Act"

my country's exploration of AI ethics has been deepening and has proposed a series of principles and norms to promote the healthy development of AI. For example, the "Ethical Code for the New Generation of Artificial Intelligence" puts forward six basic ethical requirements, including improving human welfare, promoting fairness and justice, protecting privacy and security, ensuring controllability and credibility, strengthening responsibility, and improving ethical literacy. It also clarifies 18 specific ethical requirements applicable to specific activities such as artificial intelligence management, research and development, supply, and use, aiming to integrate ethical ethics into the entire life cycle of artificial intelligence and provide ethical guidance for natural persons, legal persons and other related institutions engaged in artificial intelligence-related activities. Unlike the EU's horizontal unified legislation, my country currently adopts a more flexible "sector-to-block" regulatory model and has issued special management regulations for specific fields or technologies. For example, the "Interim Measures for the Management of Generative Artificial Intelligence Services" clarifies the regulatory requirements for generative artificial intelligence services, which are particularly for generative artificial intelligence services with public opinion attributes or social mobilization capabilities. It is proposed that security assessments should be carried out in accordance with relevant national regulations, and the algorithm filing, change and cancellation registration procedures should be carried out in accordance with the "Regulations on the Recommendation Management of Internet Information Service Algorithm". This method not only strives to maintain regulatory agility and adaptability in the face of the rapid development of AI technology, but also reflects the consideration of the country in maintaining social order and national strategic interests while promoting the innovative development of AI.

"The Ethical Specifications of the New Generation of Artificial Intelligence"

The United States' AI ethics legislation at the federal level is relatively more cautious and fragmented, focusing more on guiding AI development through market-driven and industry self-discipline, while encouraging innovation and maintaining technological leadership. Although institutions such as the National Institute of Standards and Technology (NIST) have issued guiding documents such as the AI risk management framework, the overall lacks the comprehensive and mandatory federal laws as the EU has. However, in recent years, as AI risks become increasingly prominent, calls for strengthening AI supervision in the United States have also increased, and relevant legislative attempts have begun to appear at some state levels.

Comparing the AI ethical strategies of China, the United States and Europe, it is not difficult to find that there are differences in the basic issue of the definition and cognition of AI risks, which directly affects their respective regulatory priorities and path choices. For example, the EU's risk classification is mainly based on the potential harm of AI to various basic human rights, while my country's supervision pays special attention to AI applications involving public opinion, social mobilization capabilities and national security while paying special attention to it. This difference is rooted in different political and cultural traditions and national priorities across all parties. Therefore, although countries may have consensus on the goals of data protection and improving transparency, their respective characteristics will still be reflected in specific regulatory measures, risk tolerance and the demarcation of "red lines". This tendency of "AI nationalism" undoubtedly poses challenges to the unification of global AI ethical standards, and may also lead to multinational companies facing complex compliance difficulties in different jurisdictions.

International organizations are the "normative shaper" and "dialogue promoter" of global governance of AI ethics. Organizations such as the Organization for Economic Cooperation and Development (OECD), UNESCO (), the United Nations (UN) have actively promoted the global consensus on AI ethics issues by issuing ethical guidelines, policy recommendations and research reports, and provided reference for countries to formulate relevant policies.

OECD's "AI Principles" (AI) is one of the early AI ethical frameworks that have gained widespread international recognition. It emphasizes that AI should serve inclusive growth, sustainable development and human well-being, respect the rule of law, human rights and democratic values (including fairness and privacy), and proposes that AI systems should be transparent and interpretable, robust, secure and accountable. These principles provide a common discussion base and policy reference point for governments and stakeholders, and their influence has penetrated into many national and enterprise-level AI ethics guidelines.

OECD official website, "AI"

The Recommendation on Ethical Issues of Artificial Intelligence is known for its more comprehensive coverage and a high focus on human rights, dignity, diversity, inclusion and sustainability. The Recommendation not only elaborates on core values and ethical principles, but also proposes specific areas of policy action aimed at helping member states transform abstract ethical ideas into actionable policy measures.

Official website, "Suggestions on the Ethical Issues of Artificial Intelligence"

Although many international organizations have made significant contributions to building a global discourse system for artificial intelligence ethical discourse and providing a common communication language, the guiding principles they publish mostly fall into the category of "soft law". Although these principles are morally binding and influential, they usually lack direct legal enforcement. Compared with the "hard laws" that guarantee the implementation of the state's mandatory force and are mandatory and binding, how to effectively transform these "soft laws" into domestic laws and regulations in various countries and put them into specific practices is still a huge challenge.

In addition, the efforts of international organizations are often constrained by geopolitical games and differences in national interests among member states. Just as there are different strategic considerations at the government level, countries may also have different views on AI ethical priorities in international settings, with repeated trade-offs between economic development, national security and human rights protection. This may lead to the inability of international norms to seek compromise in the formulation process, thereby weakening their strength, or allowing them to be adopted and implemented in different countries to varying degrees. Therefore, the effectiveness of the international AI ethics initiative depends largely on the willingness of governments (especially major economies around the world) to cooperate in the face of common challenges and the wisdom of seeking common ground while reserving differences.

Enterprises (especially technology giants) are the main force in the research and development and application of AI technology. Its motivation for formulating and implementing AI ethical principles is multifaceted, which is based on considerations of reducing legal and reputation risks, building trust from consumers and stakeholders, attracting outstanding scientific and technological talents, and coping with social pressure. Some companies do have a beautiful vision of using AI to benefit society. Many well-known companies, such as Google (), Microsoft (), IBM, SAP and Bosch (Bosch), have successively released their own AI ethical principles or frameworks.

However, under the "innovation-driven" business logic, there is a natural tension between the goal of enterprises' pursuit of profit maximization and market competitive advantages and the cost of investment required to implement ethical principles and possible development restrictions. Strict ethical review and safeguards may slow down product development and increase operating costs, which often makes companies face difficult choices between "efficiency first" and "ethical considerations."

Looking at the AI ethical practices in the business community, it can be seen that its motivations are often complex and mixed. On the one hand, companies may indeed take action out of a sincere and responsible sense of social responsibility; on the other hand, these actions are often part of corporate risk management strategies, intended to avoid reputation damage, legal litigation or user loss that may result from immoral behavior.