Self-examination| Artificial Intelligence Ethics

Self-examination| Artificial Intelligence Ethics

[This article is a bit long and is divided into two parts: the first half is generated by AI, focusing on common ethical theories and how to embed these theories into policy analysis, especially AI policy; the second half is a few articles I have read in the past two days, mainly to understand some categories of current AI ethics. ]. [Also, I was originally planning to write a simple citation, but I found that the AI was quite attractive, so I put it in as the beginning. Although it

[This article is a bit long and is divided into two parts: the first half is generated by AI, focusing on common ethical theories and how to embed these theories into policy analysis, especially AI policy; the second half is a few articles I have read in the past two days, mainly to understand some categories of current AI ethics. ]

[Also, I was originally planning to write a simple citation, but I found that the AI was quite attractive, so I put it in as the beginning. Although it's a little translational

In an era when algorithms determine what news you see, AI can write Shakespearean poetry, and robots can imitate human emotions, discussions about artificial intelligence ethics are coming like a tide. At various academic conferences, experts in suits and ties waved their arms to talk about "Aligning the values of AI"; on the blogs of technology companies, exquisite illustrations are accompanied by the declaration of "responsible AI"; on social media, countless voices of interweaving worries and expectations are piled up under the #AI ethical tag. There are many discussions on the ethics of AI governance, various appeals, and many so-called problems that need to be solved urgently. It seems that we are standing at the crossroads of technology and ethics, and every decision will affect the direction of human civilization.

However, as we walk from the noisy conference hall into the corridors of government agencies, from the high-profile forums to the actual policy-making scene, those clear voices seem to become blurred, as if they pass through heavy frosted glass. Compared with the industry's hot discussions, the dialogue related to the actual policy field is still relatively vague, like a ship sailing in the fog, knowing the general direction but it is difficult to see the reef ahead.

Imagine an official responsible for formulating AI ethics policies, with a desk filled with reports and suggestions from all parties, from esoteric papers from technicians to statements of principles by ethicists, from self-discipline initiatives in the industry to expressions of concern in civil society. How should this official find a direction in this ocean of information? What do we really need to worry about and regulate when writing a compelling paper and solving the mystery of protein folding?

Of course, the various newly generated problems do not seem to reach the level of concentrated outbreaks, just like those quietly melting icebergs. Although we know that sea levels are rising, it is difficult to attribute specific floods to the disappearance of a certain iceberg. In this case, it seems that there is no way to discuss the priority of AI ethics issues. In many cases, we can only look at prediction and make decisions, just like weather forecasters trying to predict a storm a month later - we have models and data, but reality always has its unpredictable side.

This situation reminds me of a famous saying by physicist Feynman: "I think I can say with confidence that no one really understands quantum mechanics." For AI ethics, perhaps we can also say: no one really knows how to perfectly standardize a technology that is still developing rapidly. Are we fighting ghosts? Or are we designing solutions for problems that have not yet emerged?

Let us temporarily put aside the grand narrative and abstract principles and return to concrete cases and situations. What does fairness mean when an AI algorithm decides who can get a loan? Should self-driving cars protect passengers or pedestrians when they face inevitable accidents? When AI content generators create art that seems to be created by real people, where is the boundary between creativity and plagiarism?

There are no simple answers to these questions, but they at least let us return from the cloud to the ground and from abstract to concrete. Perhaps, discussions on AI ethics should not only exist in academic papers and company manifestos, but should go deep into every corner of daily life, because AI has quietly changed the way we live, and its ethical influence is also everywhere.

In the next discussion, I would like to invite you to think together: Are we discussing the truly important AI ethics issues? How should policy makers find directions in uncertainty? What responsibilities should individuals, enterprises and society bear? Perhaps, AI ethics is not just a problem that needs to be solved, but also a journey that needs to be explored together.

Part 1: Ask AI:

1) What are the common ethical theories?

Answer: Ethical theory provides a framework for understanding moral principles and decision-making. The most well-known systems include:

Utilitarianism () focuses on the results and advocates behaviors that bring the greatest welfare to most people. Its variants include: 1) Behavior utilitarianism (Act): Assessing individual behavior; 2) Rule utilitarianism (Rule): Following rules that usually produce the best results.

Moral dedication theory () (Kant's ethics) emphasizes compliance with moral responsibility/rules, such as treating people as ends rather than means. Main features: 1) Absolute command/decision command (): Guided actions that can be widely applicable; 2) Rights-based ethics (-based): Priority for respect for individual rights. Compared with utilitarianism that emphasizes results, deontology holds that the correctness or error of behavior depends on the activity itself and its motive principles.

Virtue Ethics ( ) (Aristotle) emphasizes the cultivation of moral character through virtues such as courage and honesty, so that decision-making is consistent with ideal traits.

Caring Ethics of Care: Priority to empathy and relationships over abstract rules & social contract theory: Establishing ethics based on hypothetical agreements reached by rational subjects.

Divine Destiny Theory: Deriving Morality & Data Ethics Data (): Solve the issue of responsibility in the use of digital information.

2) How to integrate ethical theory into policy design? What are the operational methods?

Answer: Ethics is the value basis of public policy and is also the inherent demand of public policy. Strengthening policy ethics construction is an important way to realize policy value, improve policy quality, and improve policy level. However, it is not easy to transform ethical theory into practical policies. Common ethical imbalances in the policy planning process are mainly manifested in three aspects:

The core reason for these problems is the "lack of procedural and normative nature in the design process of policy proposals", and the gap between the abstraction of ethical theory and the concreteness of policy practice. To ensure that policies reflect ethical values, an evaluation framework can be established that includes the following elements:

This evaluation framework should run through the entire process of policy formulation, including problem definition, program design, program selection and policy implementation.

The field of artificial intelligence is an important field of ethics integration into policy. The following paths can be used to operate it:

Quantitative evaluation of artificial intelligence ethics - Establishing a quantitative evaluation system for artificial intelligence ethics can include the following aspects:

Multi-level indicator system: build an evaluation system that includes first-level indicators such as technology, security, social responsibility, and law, as well as secondary indicators such as false information, controllability, and transparency.

Situational ethical judgment: collect ethical judgments of the public and experts in specific situations through crowdsourcing, forming a data and knowledge platform for ethical and moral evaluation

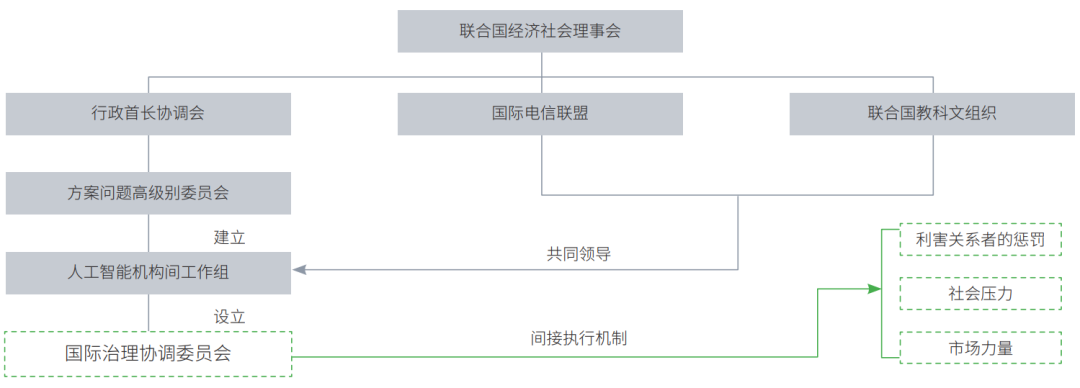

Mechanism design of artificial intelligence ethics - In order to solve the "effective deficit" problem of artificial intelligence ethics, the following mechanism design can be adopted:

Organizational mechanism: Establish an organization that continuously promotes the implementation of ethical norms, and is responsible for supervising and promoting the implementation of ethical norms

Compliance pressure mechanism: increase the pressure to comply with regulations through industry standards, market pressure, etc.

Compliance incentive mechanism: Provide economic or other incentives to encourage enterprises to comply with ethical norms

Technical methodological mechanism: Develop specific technical means to implement ethical norms, such as through data governance and algorithmic auditing

Hybrid solutions to artificial intelligence ethics - Using "hybrid solutions" to deal with artificial intelligence ethics problems:

Internal ethical design: Starting from top-level design, ethical design of artificial intelligence products, establish ethical standards and norms

Strengthen external responsibility: Strengthen designers' awareness of social responsibility and strengthen assessment and management of artificial intelligence security

3) What topic selection suggestions are there for artificial intelligence and ethics?

Direction 1: Research on basic ethical theory of artificial intelligence

Direction 2: Research on the ethical norms and evaluation system of artificial intelligence

Direction 3: Research on the unique ethical issues of generative artificial intelligence

Direction 4: Research on the Implementation and Governance of Artificial Intelligence Ethics

Also: Ethical research on AI application in specific fields

In different application fields, the ethical challenges faced by AI systems vary and require targeted research. Such as medical health, education, autonomous driving, and privacy.

Part 2: Literature Reading

, L., & Cowls, J. (2022). A of five for AI in . and the city: in and urban , 535-545.

How to solve the problem of "proliferation of principles" in AI? This article reports the results of fine-grained analysis of several sets of the most concerned AI ethical principles. This study identified an overall framework composed of five core principles of ethical AI. Four of these are the core principles commonly used in bioethics: benevolence (promoting well-being, maintaining dignity, maintaining the earth), non-harm (privacy, security and "capacity warning"), autonomy (the ability to make decisions) and justice (promoting prosperity, maintaining solidarity, avoiding injustice) (, non-/do no harm, , and ). According to the comparative analysis, the author believes that a new principle is needed: interpretability, which can be understood as a combination of epistemological intelligibility (as an answer to the question “how does it work?”) and a sense of responsibility in the ethical sense (as an answer to the question “who is responsible for how it works?”).

In this comparison, the authors analyzed six high-profile initiatives for socially beneficial AI: Asiloma’s Principles of Artificial Intelligence, Responsible Artificial Intelligence Montreal Declaration, Ethical Design: A Vision to Prioritize Human Well-being with Autonomous and Intelligent Systems, Ethical Principles proposed in the European Commission’s European Science and New Technology Ethics Group’s Statement on Artificial Intelligence, Robots and “Automatic” Systems, released in March 2018, and the report released by the House of Lords Artificial Intelligence Committee in April 2018, “Artificial Intelligence in the UK: Ready, Willing and Can? The "Five Principles of Artificial Intelligence Guidelines" proposed in 》, The of the on AI. [A total of 47 principles were extracted]

Munn, L. The of AI .AI 3, 869–877 (2023).

In recent years, artificial intelligence ethics, frameworks and guidelines have emerged in large numbers, resulting in a series of corresponding principles. This article believes that a large number of artificial intelligence ethical principles are basically useless. Three reasons for this failure: the principle of meaninglessness, the principle of isolation, and the principle of no binding force. The result of this failure is the gap between noble ideals and actual technological development—that is, the gap between principles and practice. While recent research aims to address this gap through operational principles, this work is full of attempts to translate controversial social concepts into technical rules and feature sets.

Many principles are isolated – such as many undergraduate data science degrees emphasize computer science and statistics, but lack training. In Australia, Gorur et al. investigated courses at 12 universities and found that they focused on microethical concepts such as professionalism, while lacking macroethical agendas such as improving society and the earth.

Besides the fact that turning to moral principles, what other methods are more effective? Essentially, one approach is to think more broadly about AI justice. It is believed that the current focus on issues of AI program such as transparency is narrow, and it “confuses and diverts our focus on more substantial and fundamental issues such as concentration of power, substantive policies and actions of technology giants.” Similarly, it is believed that overly focusing on bias distracts us from more fundamental and urgent issues such as power and artificial intelligence. Another approach is to take a more perspective, such as breaking down the fuzzy concept of “morality” into measurable indicators and discrete goals (such as accuracy, consistency), and making specific progress in improving artificial intelligence.

Prem, E. (2023). From AI to tools: a of . AI and , 3(3), 699-716.

This article reviews more than 100 articles aimed at contributing to the design of ethical AI systems and expanding the work of its colleagues. It develops a structured inventory and defines the proposed approach to creating ethical AI systems, revealing an extremely wide range of tools and technologies from algorithms to common frameworks and tools that can become part of the ethical infrastructure of AI, such as datasets, communities or licensed models.

Research has found that democratic control and governance are not at the heart of many AI frameworks, and only a few methods mention these issues. Other aspects rarely addressed include: existential threats, threats to social cohesion, such as echo chambers caused by algorithmic discourse regulation, abuse of AI for political purposes, superiority/disadvantages of algorithmic decision-making, environmental costs, implicit social costs of AI (e.g. click jobs), or private research funding.

Several feasible tools are not clearly proposed in the above analysis. Some companies have begun to establish ethics committees and boards. Although regulation is mentioned in some approaches, there is little about how it is used for ethical system design. Given the obvious trend of more regulation of AI systems, such as banning certain use cases (such as face recognition) or the proposed EU new AI regulatory framework, there may be more ways to address regulatory issues. An essential tool, especially in industrial practice, is guidance and consulting.

, T. (2024). the of ai: A . Minds and , 34(4), 39.

[The methods/steps used in this study include: arXiv API (5 pieces) (full after use); NVIVO for analysis]

Analysis of recent literature in this study shows that emerging topics include issues such as hallucinations, alignment, harmful content, copyright, models of leaking private data, and impact on human creativity. Concepts such as credibility or responsibility become less important because fewer articles are discussing them, while other concepts become more common, especially fair and safe.

The author has drawn a picture (19 types in total), which is quite complete, you can see:

, CT (2024). Value.

Value capture is the phenomenon in which a person (or organization) originally possesses rich, subtle, multi-level values, but after entering a social environment that provides simplified versions of these value (usually quantitative) forms, these simplified expressions begin to dominate their actual thinking and decision-making processes. Examples include incentives for steps, likes and retweets, citation rates, best school ranking lists, and grade point averages.

It is pointed out that an important feature of value capture is "value outsourcing". In value capture, we hand over the process of value judgment and adjustment that we should have made ourselves to external standards and indicators.

[Recently I am a little interested in the topic of AI ethics, so I read some articles to try to understand how the concept of ethics can be used in AI governance. Since it is not a philosophical background, it is still a confusing view, and some translations may be omitted. Next, if you have time, I plan to take a look at the of and of. ]