Li Xueyao | Interdisciplinary Construction Of Artificial Intelligence Ethical System: Ideas For Complex Adaptation Systems

Li Xueyao | Interdisciplinary Construction Of Artificial Intelligence Ethical System: Ideas For Complex Adaptation Systems

Abstract: How to make artificial intelligence ethics move from moral principles to practice is the focus of attention of all sectors of the world. In this regard, ethics, law, economics, public administration and sociology all provide rich theoretical tools. Among them, principleism is the dominant methodology in the field of artificial intelligence ethics. It has advantages such as flexibility, but it also has a series of problems such as the assumption based on complete rationality. By integrating evolutionary psychology, social and technical system theory, and behavioral economics, building

Abstract: How to make artificial intelligence ethics move from moral principles to practice is the focus of attention of all sectors of the world. In this regard, ethics, law, economics, public administration and sociology all provide rich theoretical tools. Among them, principleism is the dominant methodology in the field of artificial intelligence ethics. It has advantages such as flexibility, but it also has a series of problems such as the assumption based on complete rationality. By integrating evolutionary psychology, social and technical system theory, and behavioral economics, building "complex adaptive system ethics" can provide a theoretical framework that is dynamically adaptable and technically supportive, achieving effective integration of multi-level and multi-subject participation and interdisciplinary theories. The existing discussions on legal principles within legal philosophy, including Lumann Systematics Law, can also be used for scientific and technological ethics research and institutional construction.

Keywords: principleism; complex adaptation system; evolutionary psychology; behavioral economics; social and technical system theory

How to transform artificial intelligence ethics from a framework of moral principles into operational, predictable and technological ethical norms is a practical issue that needs to be solved urgently at the moment. As the dominant theory and policy formulation guide for scientific and technological ethics, principleism is difficult to fully cope with the rapid development of technology, and needs to be transformed based on the technical characteristics of artificial intelligence, value alignment, ethical audit and other practices. The article "The Application, Dilemma and Its Transcendence of Principleism in the Field of Artificial Intelligence Ethics" proposes the idea of using evolutionary psychology and social system theories to theoretically transform principleism. In addition to elaborating on the above ideas, we will further integrate behavioral economics and complex adaptation system theory ( ) to initially build "complex adaptation system ethics" to respond to the logical self-consistent problem between practical operation and theoretical integration in the current process of building an artificial intelligence ethical system.

1. Redefining the theoretical framework of principle

Principleism was proposed by Tom Beauchamp and James Chaidres in the late 1970s and is an important theoretical standard for the formation of biomedical ethics as a discipline. As an important methodological framework, it is widely used in the academic research and specific practice of biomedical ethics. The theoretical and institutional development of artificial intelligence ethics in recent years has basically been carried out within the framework of principled theory.

First, a non-basic framework of parallel multiplicity principles. Principleism does not provide an absolute philosophical basis for each specific principle, nor does it attempt to prove that these principles are most effective in all cases. This means they can be traded down and adjusted according to different situations. All the frameworks of ethical principles of artificial intelligence in the world today also meet this feature, and basically have the philosophical foundations of ethical schools such as deontology, consequentialism, and virtue ethics. For example, improving human welfare and accuracy come from utilitarianism, interpretability, privacy protection, and accountability come from deontology, while credibility and responsibility come from virtue ethics. As for the anti-privacy and norms such as fairness, transparency, and participatory are shared by various ethical principles.

Second, the public moral theory position based on universality. Although principledism highlights its non-basic characteristics, it also uses public moral theory to prove its universal characteristics. In the discussion on the ethics of artificial intelligence, many principles and standards were drafted from the perspective of universality. For example, the "Code of Ethics of Artificial Intelligence" compiled by the European Commission's Senior Expert Group on Artificial Intelligence, and the artificial intelligence ethical norms issued by UNESCO, the United States, etc. all try to give a universally applicable ethical framework, claiming that the principles of privacy protection, fairness and transparency are applicable to the development and application of artificial intelligence on a global scale and are general standards across cultures and across borders. This is highly consistent with the "public moral theory".

Third, there is no hierarchical sequence and the reflection balance mechanism is maintained. Within the framework of principleism, there is no fixed hierarchical sequence between principles and principles, and the reflection balance mechanism determines how to weigh different ethical considerations in specific situations. This process does not require strict reliance on external rules or theories, but gradually tends toward ethical balance through interactive corrections to specific cases and moral principles. This is very different from the mainstream theories in law, such as analytical law ideas. The latter advocates ensuring the certainty of the legal system through logical analysis. Even if it recognizes that legal principles are the authoritative starting point higher than specific rules, it does not believe that they have the symmetry of the factual and effective requirements of the rules. Therefore, it is only a supplement to the rules in application. In the process of application, restrictions on the application of principles in the legal application should be achieved through formal conditions of universality, proportionality and systematic requirements.

Fourth, emphasize practical problem-oriented behavioral orientation. Principleism focuses on how to actually apply these principles in complex and changeable environments, aiming to provide guidance for complex moral issues rather than abstract theoretical debates, that is, "principleism is not designed by academic philosophers and ethicists who focus on theoretical self-consistentness." The ethical principles of artificial intelligence are often designed to address practical problems and technical challenges. For example, privacy and data protection are to deal with the challenges of the development of technologies such as big data and machine learning to user privacy, and requirements such as fairness and transparency are to avoid prejudice and discrimination in artificial intelligence decision-making. This practice points to the "practical problem-oriented" characteristics that conform to the principledism.

2. Correction ideas of evolutionary psychology

By studying the evolutionary mechanisms of human moral behavior, evolutionary psychology can provide support for principledistance theory, help solve some key issues in cultural diversity, universality and moral intuition, and enhance its effectiveness in dealing with real moral challenges.

First, the source of moral principles is revealed through the evolutionary basis of interpretation principles. How the four core principles of autonomy, non-harm, good deeds, and fairness are applicable in different cultures and why they are universal have always been the focus of ethical debate. Evolutionary psychology can explain the universal origin of these principles in human history by studying the evolutionary basis of moral behavior, thus becoming the background of principledistance theory. For example, the principle of autonomy can be interpreted as the individual's decision autonomy and control of their own destiny in the process of human evolution, which helps increase the chance of survival and forms an evolutionary advantage. Evolutionary psychology research also shows that fair behavior helps enhance social cooperation, reduce group conflicts, and ultimately improve the overall group's viability. The focus can be on using Jonathan Hatter's "basic theory of morality" to explain why a public moral framework is needed and why it is possible to build a fusion of deontology, consequentialism, and virtue ethics.

Second, the issue of cultural diversity is addressed based on evolutionary explanation of moral diversity. For principledistance theory, dealing with moral diversity in different cultural contexts is a major challenge. Evolutionary psychology can help it better understand and cope with moral diversity and provide an explanatory framework based on evolution. Evolutionary psychology recognizes that humans adapt to different ecological and social environments during the evolution process, which leads to the emergence of different cultures. Differences in culture can be interpreted as adaptation strategies in different contexts.

Third, strengthen the reflective balance mechanism through understanding of moral intuition. The reflective balance mechanism relies on people to resolve moral conflicts through reflection and rational trade-offs in specific situations. Moral intuition and emotion play an important role in moral judgment, which is one of the research priorities of evolutionary psychology. Evolutionary psychology believes that moral intuition originates from the rapid response mechanism formed in human long-term evolution, which helps humans make rapid decisions in complex environments. Evolutionary psychology shows that moral intuition (such as instinctive aversion to unfair behavior) is based on emotional responses based on evolutionary adaptation and can help humans quickly identify behaviors that threaten group cooperation or group stability. This understanding of moral intuition can help principleism enhance the theoretical explanatory power of the reflective balance mechanism, recognize the role of moral emotions in daily moral judgment, and make it consider more about the role of emotions and intuition in moral decision-making, rather than relying solely on pure rational reflection.

Fourth, explain why certain moral principles are shared across cultures, thereby enhancing universality. Principleism emphasizes the universality of its moral principles, but this claim is often challenged when facing a multicultural context. Evolutionary psychology shows that some basic moral behaviors, such as cooperation, anti-deception, trust, and punishment of injustice, were preserved through natural selection during human evolution. These behaviors allow human groups to maintain stability and cooperation, which also explains why these behaviors can exist across cultures. Evolutionary psychology helps explain why certain moral principles can be applied across cultures, thus strengthening the principled universal proposition. This can make principleism more persuasive in global and intercultural ethics discussions.

Fifth, explain moral changes and adaptation, and dynamically adjust moral principles. As human society continues to evolve, ethical norms will change with changes in society, technology and environment. Evolutionary psychology can provide an explanatory framework for moral changes and help principleism remain flexible and adaptable in the face of rapidly changing societies. For example, emerging technologies such as artificial intelligence and gene editing have brought new ethical challenges, and evolutionary psychology can help principleism understand how morality adapts to these new changes. For example, technological changes have changed the way people cooperate and the model of moral interaction, which may require reinterpretation and adaptation to traditional moral principles. Through the dynamic understanding of social change in evolutionary psychology, principleism can develop a more flexible moral framework that adapts to changing environmental and technological challenges.

III. Correction ideas for social and technical system theory

The social and technological system theory emphasizes the interaction and coordination between technical systems and social systems. With the development of artificial intelligence and machine learning technology, machines are no longer just tools, and their behavior and decision-making abilities are becoming closer to "autonomous" systems, which requires ethical theories, including principledism, to reconsider the role of these machines in social and technological systems. Here, only the theory of social technology systems with machine behavior as the main context is developed.

First, redefine the principle of autonomy. The principle of autonomy focuses on protecting individuals' independent decision-making power, but with the widespread application of artificial intelligence systems, machine decision-making has begun to affect human decision-making autonomy. In social and technological systems, machines not only undertake information processing and data analysis tasks, but also make complex decisions. In this case, "autonomousness" no longer belongs to humans alone, but extends to the scenario of human-computer collaboration. The principle of autonomy can be redefined through the theory of social technology systems and expanded from human autonomy to collaborative autonomy between humans and machines. Machine behavior can help understand the role and limitations of machines in the decision-making process, and require artificial intelligence systems to make auxiliary decisions without damaging human autonomy.

Second, strengthen transparency and interpretability. The ethical principles of transparency and interpretability are very important in artificial intelligence systems. Machine psychology can help us understand the decision-making process of machines and develop more transparent and explainable systems so that users can clearly know why machines make a decision. This echoes the principles of interpretability and transparency in principleism, enabling these principles to adapt to new technological environments.

Third, transform the principles of fairness and anti-privacy. The principles of fairness and anti-privacy are extremely important in artificial intelligence systems, especially in data-driven algorithms. Machine behavior can help understand the behavior patterns of machines in complex environments and identify the systemic biases that algorithms may generate. Combining social technology system theory with machine behavior can enable principleism to more in-depth analysis and identification of bias problems in artificial intelligence systems. Through the analysis of machine behavior, system designers can identify and eliminate biases at the development stage to ensure the fairness of the system.

Fourth, emphasize the coordination between the principle of fairness and the social and technological system. The theory of social technology systems emphasizes the coordinated development of technology and social systems, and the principle of justice also needs to be explained in this coordination. Machine behavior can help us understand the issue of justice when machines interact with humans in social systems. The principle of justice should be extended to complex socio-technical systems, including machines, to ensure that machines do not cause unfair consequences when collaborating with humans. Machine psychology can help design artificial intelligence systems so that they consider the fair needs of different interest groups when dealing with social problems.

Fifth, clarify the allocation of responsibilities. In socio-technical systems, machines seem to be taking on increasing responsibilities, which brings new challenges in security and accountability. Machine behavior can help identify and predict potential risks of machine behavior and formulate corresponding responsibility allocation mechanisms. The principles of security and accountability should be adjusted through the theory of social technology systems to ensure that the allocation of responsibilities can be clarified in complex technical systems. Especially when decisions in artificial intelligence systems may cause adverse consequences, machine behavior can help design a responsibility allocation mechanism to clarify how to hold accountable in case of accidents or wrong decisions.

Sixth, enhance participation. The principle of participatory requires that stakeholders should have the opportunity to participate in the decision-making process in systems involving ethical decision-making. In the design and deployment of artificial intelligence systems, machine behavior can help understand how to better interact with users and participate in system development and feedback. Users should not only be passive users of the artificial intelligence system, but also participate in the system design and operation through feedback mechanisms.

4. Correction ideas of behavioral economics

Behavioral economics is a discipline that combines behavioral economics and legal analysis, focusing on the cognitive biases and irrational behaviors that humans have in decision-making, and how these behaviors affect the formulation of legal systems and policies. It can provide a new perspective for the construction of artificial intelligence ethical system, making it closer to human actual behavior and social needs.

First, by understanding and preventing cognitive bias, the reality of the reflective balance mechanism is enhanced. The reflective balance mechanism in traditional principledism is too idealized and ignores the complexity of human behavior. The four major ethical principles of biomedicine and artificial intelligence are all based on complete rational presuppositions. However, research on behavioral economics shows that human decision-making is often affected by various cognitive biases and social situations. In view of this, when applied to artificial intelligence ethics, behavioral economics can add finite rational reflection on behavioral bias. By considering behavioral economics, reflecting on the balance mechanism can be more dynamic and repeatedly adjusted to adapt to the user's behavioral patterns to ensure that ethical principles are not weakened by deviations in actual operations. In addition, the "nudge" theory in behavioral economics can help improve decision-making architectures in artificial intelligence systems. Artificial intelligence systems can be designed to guide users to make decisions that are more conducive to themselves and society without depriving them of their freedom of choice.

Second, enrich the empirical basis of public moral theory and enhance its explanatory power and practicality. Behavioral economics emphasizes the practical difficulties and obstacles that humans may face when complying with rules. Recognizing these challenges, ethical institutions designers can adjust the expression and implementation methods of principles to make them easier to understand and implement. For example, when emphasizing the principles of fairness and non-discrimination, specific operational guidelines and case analysis can be provided to help artificial intelligence developers and users better practice these principles. In addition, through the research of behavioral economics, we can understand the public's true attitudes and reactions when facing issues of artificial intelligence ethics. This helps to formulate an ethical system that reflects social consensus and improves its social acceptance and legitimacy.

Third, more effectively responding to the needs of institutional change brought about by technological iteration will help formulate more effective laws and policies. The combination of behavioral economics and public moral theory can improve the operability of the artificial intelligence ethical system in the following aspects: First, artificial intelligence systems are often embedded in complex social and organizational environments, and behavioral economics can help public moral theory understand and solve the problem of "proliferation of responsibility". In the ethics of artificial intelligence, although all parties have certain responsibilities, no party may feel fully responsible due to individual bias and the complexity of collective behavior. Through the insights of behavioral economics, a clearer framework for responsibility attribution can be designed to ensure that every actor in the collective can better assume moral and legal responsibilities. Second, behavioral economics can help understand the moral preferences and behavioral habits of different social groups, and then design artificial intelligence systems based on these public moral expectations. Third, a core issue of public moral theory in artificial intelligence ethics is how to fairly distribute risks and benefits. Behavioral economics can help study how the public perceives risks and benefits and avoid unfair phenomena in artificial intelligence systems.

5. Evolutionary psychology, social technology system

Legacy of theory and behavioral economics

Through the transformation of principleism by evolutionary psychology, social and technological system theory and behavioral economics, many problems of traditional principleism in the face of cultural diversity, technological change and practical decision-making complexity can be solved. However, even if these interdisciplinary perspectives are introduced, there will still be some problems left.

First, unpredictable and nonlinear ethical conflicts in dynamic changes of complex systems cannot be handled. Modern social technology systems are becoming more and more complex, and their operations are full of uncertainty and systemic risks, especially in areas such as artificial intelligence and financial technology. Small changes in these systems can cause huge impacts; related issues are often multi-level, multi-factorial, and dynamically change. With the rapid application of artificial intelligence systems in the fields of medical care, finance, transportation, etc., new ethical conflicts continue to emerge, and the principled fixed principle framework is difficult to fully adapt to these complex and dynamic changes. Although machine behavior can help deal with some specific problems, it lacks a systematic perspective to deal with cross-system and cross-domain moral issues. Although evolutionary psychology can explain the evolutionary basis of some core moral principles, it cannot effectively deal with emerging ethical issues in rapid technological change. The limited rationality of behavioral economics cannot explain the high degree of uncertainty and nonlinearity facing moral decisions.

Second, the issue of priority among non-basic principles cannot be solved. Although the reflective balance mechanism allows for the reconciliation of conflicts between different principles in specific situations, in practice, the priority of certain principles is difficult to clearly define, resulting in subjectivity and inconsistency in moral decision-making. For example, in the case of conflicts of autonomy and public interest, how to maximize social welfare while protecting personal privacy, this complex balance may lead to the subjectivity of decision-making due to the ambiguity of rules, and ultimately, the problem of "ethical bleaching" cannot be avoided. The integration of behavioral economics can help understand human behavior patterns in irrational situations, but there is no clear guidance on the prioritization of ethical principles. Therefore, although the application of individual ethical decision-making can be optimized through behavioral economics, it is still impossible to provide a consistent standard on principle priority.

Third, the conflict between cultural diversity and value continues. Although evolutionary psychology can explain the universality of some moral principles in human evolution, values in different cultural contexts still conflict. For example, Western individualist values and Eastern collectivist culture show huge differences in artificial intelligence ethics, and the universality of principledism is still difficult to fully meet the needs of all parties under this multicultural context. Although evolutionary psychology provides an evolutionary universal framework, it does not completely eliminate the value conflict between different cultures, especially in the context of globalization. How to form an effective set of ethical principles in a multicultural context remains an unsolved problem.

Fourth, technical iteration quickly leads to lag in ethical guidance. The development speed of technology is often faster than the improvement of ethics and laws, which leads to the inability of ethical principles to deal with the challenges brought by emerging technologies in a timely manner. In the fields of artificial intelligence, autonomous weapons, gene editing, etc., traditional moral principles may not be able to cover their complexity and risks, leaving many ethical gaps. Principleism mainly relies on case analysis in specific situations for behavioral guidance, and the lack of mechanisms to quickly adapt to new technologies may lead to lag in ethical guidance.

Fifth, the problems of systemic risks and responsibility allocation still exist. Risks in complex social and technological systems are often systematic, involving multiple subjects, principledism focuses more on individual and direct behavior, and lacks in-depth discussions on the moral response strategies of systemic and global risks. When multiple subjects participate in a system together, how to allocate responsibilities when problems arise becomes a difficult problem. Although behavioral economics and social and technical system theory can be used to determine the factual "responsibility" through quantitative methods such as causal identification, it is still local, individual, and situational, and "theoretical patches" are required to be continuously applied.

6. Comprehensive idea of complex adaptation system theory

In order to deal with the above legacy problems, we can try to introduce complex adaptive system theories with generative characteristics to integrate theoretically. Complex adaptation systems theory is a theoretical framework for understanding how systems composed of many interacting individuals form overall behavior through self-organization, nonlinear feedback, and adaptation mechanisms. Its most prominent characteristics are manifested as highlighting (emergency), adaptability and diversity. Using simulation and other technologies, complex adaptation system theory is widely used in fields such as emergency management, urban planning, traffic control, public opinion risk prediction and network security. In the field of artificial intelligence, it can provide a more comprehensive and dynamic framework that integrates the advantages of evolutionary psychology, social and technological system theory, and behavioral economics, making principleism more adaptable to complex, changeable and cross-system ethical situations.

First, deal with the evolutionary process of ethical conflicts with dynamic adaptability. Complex adaptive system theory emphasizes the adaptability and dynamic change capabilities of the system. When facing ethical conflicts in complex systems, principleism can make the ethical decision-making process more flexible by introducing adaptive mechanisms in complex adaptive system theory. For example, based on adaptive mechanisms, ethical decision-making can continuously adjust the priorities between different principles according to changes in the environment, avoiding the rigidity of the fixed framework. In the ethics of artificial intelligence, in the face of rapidly changing social needs and technological development, a dynamically adjusted moral framework can be designed based on complex adaptation system theories, so that decision makers can flexibly adapt to new ethical challenges at different stages and under different environments. For example, with the impact of artificial intelligence on autonomy, the meaning of "respecting autonomy" needs to be redefined and understood.

Second, use a multi-level decision-making framework to deal with the ambiguity of principle priority. Complex adaptation systems theory emphasizes multi-level and multi-factor system operation. Therefore, ethical decisions should not rely on single-level principle trade-offs, but should set the priority of principles at different system levels (such as individuals, groups, society, and technology). This can more clearly define ethical priorities at different levels and solve the problem of vague priority of principleism in different situations. In addition, by emphasizing multi-party participation, establishing relevant negotiation platforms, gathering opinions from various stakeholders, and negotiating the formulation and modification of ethical norms.

Third, integrate behavioral economics and social technology system theory through systemic risk assessment. Complex adaptation system theory can assist principleism in constructing a risk assessment mechanism suitable for complex society to deal with nonlinear relationships and systemic risks in complex systems to better deal with systemic risks and ethical uncertainties. For example, by upgrading the risk perception model in behavioral economics, combining complex adaptation system theory to develop an ethical decision-making framework with systemic risk prevention and control capabilities in high-risk fields such as finance and medical care.

Fourth, respond to the conflicts of cultural diversity through multicultural adjustment and co-evolution. The concept of co-evolution in complex adaptation systems theory can be used to explain how ethical norms in different cultural contexts form a dynamic balance through interaction and collaboration. Through the complex adaptation system theory, principledism can design a moral framework with co-evolution characteristics, so that ethical norms under different cultural backgrounds can adapt and adjust each other, and ultimately form a relatively stable ethical consensus. In the global AI ethical governance, complex adaptation system theory can help form a more flexible ethical coordination mechanism between different cultures, legal systems and moral values to avoid rigid decision-making caused by value conflicts.

Fifth, respond to practical needs with multi-level theoretical integration. The complex adaptive system's artificial intelligence ethics framework has the advantage of integrating multidimensional perspectives such as microscopic, meso and macroscopic, so as to respond to the need for penetrating theory effectively running through "principles-rules-applicable" in artificial intelligence ethics practice. At the micro level (individual), evolutionary psychology and cognitive neuroscience can be used to help understand individuals' moral decision-making processes and behavioral tendencies; at the meso-level (organization and technology), behavioral economics and social technology system theory can be used to analyze organizational behavior, technological development and social impact; at the macro level (social system), complex adaptive system theory and existing research results of computational social sciences are used to guide the overall design and dynamic evolution of ethical systems.

Sixth, improve adaptability with a multi-element theoretical mechanism. This theoretical mechanism includes both agents, interaction mechanisms, adaptation and learning mechanisms, as well as legal behavior incentive mechanisms, violation constraint mechanisms, responsible subject identification mechanisms, and adaptive legislative mechanisms. Among them, agents include individuals (developers, users), organizations (enterprises, governments), and technical entities (artificial intelligence systems); the interaction mechanism mainly refers to the interaction between agents through social, technological, legal and economic means to form a complex network; the adaptation and learning mechanism mainly refers to the agents adjusting their behavior based on feedback and environmental changes, and the ethical system also evolves.

In short, through the integration of complex adaptive system theories, the transformation ideas of evolutionary psychology, social and technological system theory and behavioral economics can be further deepened and coordinated. Complex adaptation system theory can solve the problems left over by principledism in dealing with dynamic ethical conflicts, systemic risks, multicultural conflicts, etc., and enhance its adaptability and flexibility in complex social and technological environments.

Conclusion: Luman Systems on Law in Artificial Intelligence

Position in ethical research

At this point, one question needs to be answered: Why not use the Luman system theory legal theory, which also uses interdisciplinary and complex evolutionary perspectives and has a mature research framework in legal theory, and instead uses the complex adaptive system theory that is not often borrowed by law and ethics? There are three main reasons: First, Luman's theory aims to explain the structure and function of social systems and provide a macroscopic sociological analysis tool. Principleism aims to guide moral decision-making at the individual level, focusing on specific ethical dilemmas and practical applications. Due to the different levels of concern between the two, Lumann's theory lacks direct guidance on individual moral decision-making and is not suitable for transforming principledism with moral principles as the core. Second, Lumann's theory regards law and morality as two independent systems, and the operation of the legal system is independent of the moral system. Principleism regards moral principles as the core of decision-making and believes that morality has a direct guiding role in law and practice. This different understanding of the relationship between morality and law makes it difficult for Luman's theory to be directly used to enhance principled guidance on moral decision-making. Third, Luman's system theory is descriptive, not normative. It explains how the system works, but does not provide ethical guidance on how it should act. Principleism requires specific moral norms to guide practice, so Lumann's theory cannot meet its needs in this regard.

The problems pointed out above do not mean that Luman's systematic theory jurisprudence has done nothing in the construction of artificial intelligence ethical system. By applying complex applied system theory to the field of artificial intelligence ethics, the existing achievements in using Luman Systems Theory to apply systematic thinking to discuss basic rights, morality, and procedures can be borrowed. For example, how to incorporate the system level into moral judgment, how to deal with complexity and uncertainty, and how to consider the influence of multiple levels and multiple factors. For example, how moral principles play a role in complex social systems and how they communicate and coordinate between different systems.

The theoretical debates surrounding principleism and their problem awareness in applied ethics are in a sense a parallel universe of jurisprudence. For example, the mechanism for realizing basic rights in the Constitution is very similar to the mechanism for realizing principle. The measurement of rights, proportional principles, analogical reasoning, consequence orientation, etc. in legal hermeneutics are all specific technical solutions to realize basic rights or basic principles within the legal system. In order to deal with the complexity of the external environment, internal regulations of the law need to constantly evolve and generate internal complexity that refrains from external complexity. The four core principles of basic rights and principledism in the constitutional sense are nothing more than detection and reflection devices formed internally by normative systems adapting to changes in the external environment. Therefore, the relevant thinking results at the level of legal philosophy can naturally be replicated and applied in the field of applied ethics, especially in the field of scientific and technological ethics. Unlike Dworkin, Alexi and others who regard principles as values, Lumann believes that principles and rules are only prerequisites for binding legal decisions with different degrees of abstraction, which greatly broadens the scope of application of legal principles. Similar systems theory theoretical achievements can also provide direct and profound reference for the application of complex adaptive systems theory in the construction of artificial intelligence ethical systems.

[Author Li Xueyao, professor at Kaiyuan School of Law, Shanghai Jiaotong University and director of the Law and Cognitive Intelligence Laboratory. Shanghai〕

The original text of this article was published in the 6th issue of "Zhejiang Academy of Sciences" in 2024.

Comments are omitted, please refer to the original text if necessary.

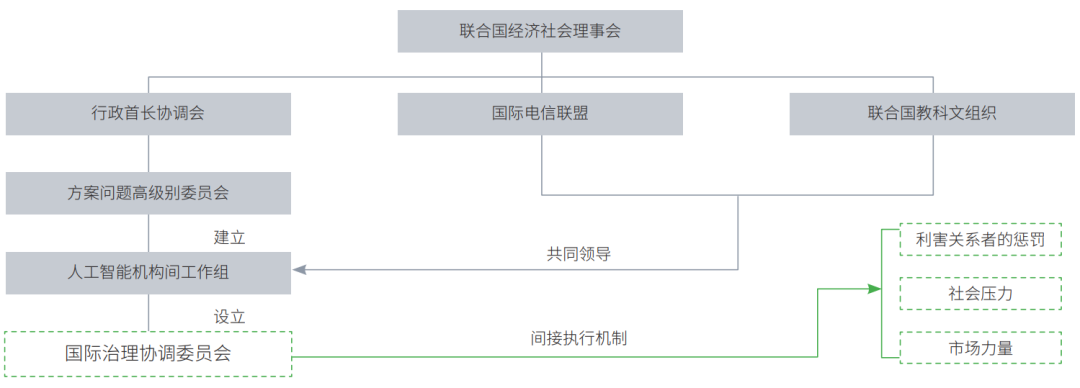

The sea of stars never stops

As the core support organization of scientific and technological ethics research of the China Academy of Information and Communications Technology, the Science and Technology Ethics Research Center of the China Academy of Information and Communications Technology adheres to the governance concept of "ethics first, intelligence for good", and establishes a full-dimensional governance research paradigm covering theoretical research, standard construction, technology research and development, and international cooperation. By building a trinity governance framework of "institution-technology-ecology", a unique scientific and technological ethical governance path with Chinese characteristics has been formed in key areas such as artificial intelligence. The center takes the lead in developing industry standards, establishing a multi-level standard system framework, and promotes the transformation and implementation of ethical principles into technical indicators and engineering standards. Establish an agile governance mechanism that collaborates between government, industry, academia and research, and form a dynamic balance between innovation and governance through regular case collection and rule iteration. Build an open ethical governance platform, jointly carry out generative artificial intelligence ethical risks research with international organizations, establish a science and technology ethics dialogue mechanism based on multiple platforms, continuously export China's governance wisdom, and contribute Chinese solutions with practical value to global science and technology ethics governance.

Contact:

Teacher Lang