The Doctrine Of The Mean And AI Ethics: Intelligent Innovation In Dynamic Balance

The Doctrine Of The Mean And AI Ethics: Intelligent Innovation In Dynamic Balance

When people mention

When people mention "moderateness", they often think of unprincipled compromise? This stereotype is hindering the modern transformation of Chinese civilization and wisdom. The governance problems in the AI era provide us with historical opportunities to reinterpret the doctrine of the mean...

1. The application map of moderate thinking in AI governance:

Taking "dynamic balance" as the methodology, realize systematic coordination between technological innovation and humanistic value

The first level: the core dimension of moderate thinking

concept

Key Features

Corresponding to AI governance needs

Time in time

Dynamic calibration, historical situation adaptation

Matching the rhythm of technological development and ethical constraints

Two-purpose

System integration, contradictions

Multi-stakeholder balance

To Zhonghe

Ecological collaboration, overall optimization

Technology-Social-Environmental Sustainable Development

Level 2: Key areas of AI governance

Dimension

Specific challenges

Points of intervention for moderate thinking

ethics

Algorithm bias, value alignment

Dual use (fair vs efficiency)

Safety

Technology out of control, privacy leak

Time and medium (risk prevention and innovation incentives)

fair

Data divide, social division

Zhizhonghe (universal development)

responsibility

Fuzzy rights and responsibilities, lack of accountability

Time (technical iteration and legal lag)

Layer 3: Application scenario matrix

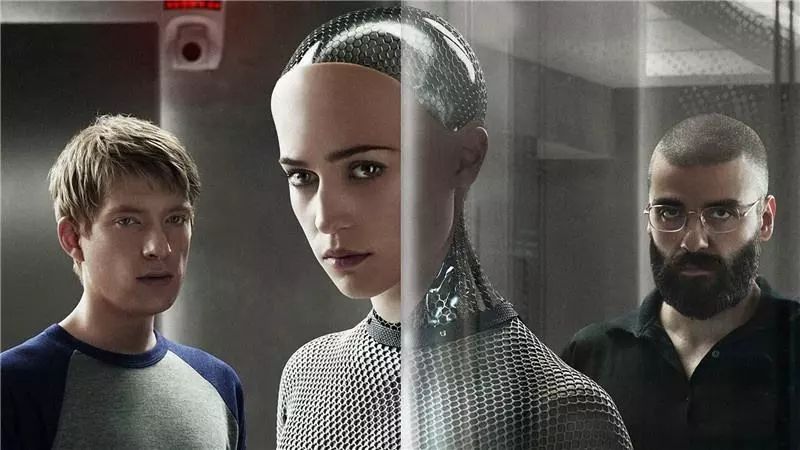

When Silicon Valley elites gathered in a small coastal town in California in 2017, they might not have expected that this seemingly underwhelming seminar was demarcating a new ethical channel for global AI development...

According to statistics from the China Internet Information Center, the number of domestic AI-generated content complaints increased by 230% year-on-year in 2023, highlighting the need for dynamic balance

Scene

Path to implement moderate strategies

Typical cases

Algorithm fairness

In both practice and use: balancing personalized recommendations and group fairness

Meta Data Diversity Review Mechanism

Autonomous driving decisions

Time: Dynamic ethical calibration of emergency risk avoidance and tram problems

Asiloma Principle Risk Classification System

AI Labor Replacement

To Zhonghe: Coordination of efficiency improvement and social stability

EU Artificial Intelligence Act Employment Impact Assessment

Generative AI content governance

In both use: the boundary management of innovation freedom and information security

China's "Genetic AI Management Measures"

Level 4: Comparison of East-West governance models

Dimension

Asiloma/EU

Eastern wisdom (Doth the Mean)

Value orientation

Individual rights are preferred

Group welfare and ecological balance

Decision logic

Rational rule preset

Situational ethics dynamic adjustment

Implementation path

Institutional rigid constraints

Cultural internalization and technical awareness

Typical conflict

Technology Monopoly vs Open Source Sharing

Traditional Ethics vs Algorithm Justice

We need to trace the origin to break misunderstandings, and let’s take a look at the true appearance of the doctrine of the mean in the long river of history.

2. Reexamination of the core of thought: The doctrine of the mean is by no means static balance

1. The innovative gene of "time"

"The Doctrine of the Mean" emphasizes "a gentleman is at the right time", while Zhu Xi notes "There is no fixed body in the middle, and it is there any time." "Time" requires adjustment of practical strategies according to changes in space-time conditions. This characteristic of "dynamic calibration" is essentially contrary to the conservative demands of maintaining the old order. For example:

Confucius advocated that "profit and loss can be seen" (The Analects of Confucius·Politics"), and recognized that the system needs to be changed according to the times

Wang Fuzhi proposed that "everyday renewal is called great virtue" and incorporated "momental update" into the doctrine of the mean system

2. The creative tension of "two-purpose"

The doctrine of the mean opposes either one or the other binary opposition, but is not a negative compromise. For example, Shun "holds both ends and uses them to the people" ("Doctor of the Mean"), in fact, to create new solutions in contradictions. This is similar to the "integrated thinking" in modern management, seeking transcendent breakthroughs in conflict.

3. Decoding of practical principles: enterprising and restraining wisdom

1. Risk control of "too much is not too much"

Zigong asked, "Who is a master or a merchant?", and Confucius answered, "It's just as bad as it's not enough" (The Analects of Confucius: Advanced).

This vigilance against extremes is actually a guarantee of sustainable progress. For example:

Economic field: Avoid "fishing in the dry pond" and "conservative stagnation" and find a path to high-quality development

Technological innovation: balancing technological optimism with ethical constraints and preventing instrumental rationality from storming

2. Ecological enterprising of "Zhonghe"

The Doctrine of the Mean says that "to achieve harmony, heaven and earth are in place, and all things are nurtured", emphasizing the coordinated development of various elements of the system. This "symbiotic enterprising" is reflected in the contemporary era as:

ESG concept of enterprises pursuing a balance between profit and social responsibility (, and )

Urban development takes into account economic benefits and ecological carrying capacity (resilent city: City)

4. Misunderstanding: Why does the misunderstanding of "moderate and conservative" arise?

1. The distortion of power alienation

In the imperial era, the instrument of moderateness was transformed into a dominant technique. For example, Yongzheng emphasized "keeping the middle" through "The Book of Dayi Awakening" to suppress the demands of change. This kind of political manipulation led to the stigma of concepts.

2. Projection of lazy thinking

Some groups use the excuse of "moderateness" to evade the responsibility for reform, which is exactly contrary to the principle of "time and middle". As Cheng Yi criticized: "If you are not biased, you are not easy, you are mediocre. The middle is the right way for the world, and the mediocre is the theorem of the world - if you regard mediocre as the norm, you will lose it."

5. Verification of historical practice: The doctrine of the mean gives birth to breakthroughs in civilization

1. "Mean Breakthrough" in the Integration of Civilizations

The integration of "Hu style and Han rhyme" in the prosperous Tang Dynasty: while maintaining cultural subjectivity, absorbing foreign civilizations, creating a peak of world civilization; Zhu Xi integrated Confucianism and Buddhism: building a new Confucianism system through "one difference in reason" to promote the upgrading of East Asian civilization.

2. The mean wisdom in modern transformation

Zhang Jian's practice of "saving the country through industry" not only breaks through the traditional "focus on agriculture and suppress commerce", but also avoids overall westernization, creating a development model that combines national capital and Confucian ethics. Nantong's modernization experiment is proof of this. (Note: In 1913, when Zhang Jian founded the Dasheng Spinning Mill in Nantong, he deliberately retained 30% of the shares for employee dividends. This 'symbiosis of justice and profit' model is still a classic lesson plan for business schools)

3. The doctrine of the mean is a "dance in shackles". The true doctrine of the mean is a creative practice of finding the optimal solution in the constraints. It is neither unprincipled concession nor radical subversion, but a kind of "rooted transcendence"

4. The creative transformation of contemporary doctrine of moderate wisdom is reflected in

Field of scientific and technological innovation: Balance technology breakthroughs and ethical boundaries with "two-use" thinking (such as the "Asiloma Principle" in AI governance) See Note 1

Social governance level: Coordinate efficiency and fairness with the concept of "to neutrality" (the practice of Nordic "welfth capitalism" implies this)

Personal development dimension: transforming "time and mid-time" into dynamic career planning, which not only avoids blind job change, but also refuses to be self-satisfied.

As Qian Mu said: "The Chinese people's masters produce the word "shi" from the word "zhong" and the word "yi" from the word "shi". These three characters are actually the highest philosophy of Chinese culture." (Excerpted from Qian Mu of "History of Chinese Thought", Sanlian Publishing House, 2012 edition: page 38)

Mr. Qian Mu's words profoundly reveal the philosophical progressive relationship between the three concepts of "China", "time" and "optimal" in Chinese traditional culture, and reflect the unique dynamic wisdom of Chinese civilization. We can interpret it from the following three levels:

1. "Zhong": the original form of the way of heaven and earth

"Zhong" is the core category of Chinese philosophy, first seen in "Shangshu·Dayumo" "Yunzhijuezhong", which refers to the law of balance in the movement of the universe. This is not a simple eclecticism, but the ontological state of "the unrelease of joy, anger, sorrow, and happiness" as mentioned in the Doctrine of the Mean, and is an ideal paradigm for the existence of all things. In oracle bone script, the pictographic flag of the character "Zhong" implies the basic meaning of direction and order, and constitutes the coordinate origin of the Chinese people's cognition of the world.

2. "Time": the intelligent transformation of dynamic balance

The evolution from "medium" to "birth" reflects the leap of thinking from static balance to dynamic adjustment. "The Book of Changes·Tuan Zhuan" emphasizes "How great is the meaning of time". Each of the sixty-four hexagrams corresponds to a specific time position, such as "How great is the meaning of time in Yu?" Mencius called Confucius the "time of saints" precisely because he understood the "invisible wisdom" of "nothing can be done". This philosophy of time transforms the absoluteness of "in" into relativity, just like the flow of yin and yang in the Tai Chi diagram, emphasizing grasping balance in changes.

3. "Yi": The ultimate direction of practical reason

The sublimation from "time" to "optimal" has completed the leap from theory to practice. "Shuowen Jiezi" explains "optimal" as "what to be satisfied", that is, the appropriateness in specific situations. In the "Book of Changes", Wang Fuzhi pointed out that "adapting to the times without losing the scriptures", which requires both "knowing the times" (knowing the environment) and "taking advantage of the times" (grasping opportunities), and finally achieving the state of "time". For example, traditional Chinese medicine emphasizes "adapting to the conditions of the three" and military strategy emphasizes "adapting to the conditions of the local conditions", which are all realistic projections of this thinking.

The philosophical chain of these three concepts forms a set of ethical decision-making wisdom based on specific scenarios: on the premise of adhering to the fundamental principles, keenly perceive the changes in the times (times), and finally make the most appropriate moral choices (optimal). This thinking mode is different from the absolute rationalism of Western philosophy and the transcendent tendency of Indian thought, forming the practical wisdom of "extremely brilliant but moderate". As Qian Mu emphasized in "History of Chinese Thought", this dynamic balanced wisdom enables Chinese civilization to maintain continuity and adaptability in thousands of years of historical changes, which is its value as the "highest philosophy".

The wisdom of continuous innovation in dynamic balance may be the key to breaking the paradox of modern social development.

What do you think is the most important balance in AI ethics? A. Efficiency and equity B. Innovation and security C. Individual rights and group welfare. Welcome to leave a message in the comment area!

Note 1: Asiloma’s Principles of Artificial Intelligence (Excerpted from Baidu Encyclopedia and Zhihu)

The Asiloma Artificial Intelligence Principles (AI) is an ethical framework proposed in 2017 by the American non-profit organization "The Institute of Life" at a meeting organized by the Asiloma Convention Center in California, aiming to guide the safe and responsible development of artificial intelligence (AI). The conference brought together world's top AI scientists, ethics and policy makers, and the final 23 principles covered research, ethics, security and social impact.

1. Overview of core principles

Research objectives

AI research should be committed to creating “benefit intelligence” ( ) to ensure that technology serves the overall well-being of human beings, rather than a single goal or group.

Security and Verification

AI systems need to pass strict security testing to ensure that they are controllable during the life cycle, and have the ability to repair vulnerabilities and prevent accidental hazards.

Transparency and interpretability

The AI decision-making process should be transparent and understandable by humans, especially in key areas such as justice and medical care, "black box" operations should be avoided.

Ethics and value alignment

AI systems should respect human values (such as privacy, freedom, and diversity), and their designs should be aligned with ethical norms to avoid prejudice and discrimination.

Responsibility

Developers and operators need to clarify responsibilities and establish a accountability mechanism to ensure that the damage caused by AI can be traced to the responsible party.

International cooperation

The global challenges of AI require cross-border collaboration to avoid arms races and jointly address risks (such as the abuse of autonomous weapons).

Long-term risk prevention

Focus on the potential long-term impact of AI (such as super intelligence out of control) and formulate risk mitigation strategies in advance.

2. Meaning and influence

1. Industry impact: It provides a reference for the AI ethical standards of Google and other companies, and promotes "responsible AI" to become an industry consensus.

2. Policy reference: Some principles are absorbed by EU's "Artificial Intelligence Act" and other policies, emphasizing security and transparency.

3. Controversial point: Critics believe that the principle lacks coercive binding force and do not pay enough attention to issues such as technological monopoly, economic inequality, etc.

3. Comparison with other frameworks

1. Three laws of robots (Asimov): Focusing on the microethics of human-computer interaction, while the Asiloma principle is more macro, covering social and global governance.

2. EU AI ethical standards: More emphasis is placed on legal compliance and civil rights, which complements Asiloma's technical orientation.

Although the Asiloma principle is not a law, its proposed concepts of "preventive thinking" and "human compatibility" are still important cornerstones of current discussion on AI ethics. With the rapid iteration of AI technology, the practice and update of these principles still need to be paid attention to.

Note 2: Three Laws of Robots (Adapted from Asimov's original work)

Three Laws of Robots proposed by Asimov is one of the most influential ethical frameworks in the history of science fiction literature and a classic reference for the research on artificial intelligence ethics. These three laws try to solve the fundamental contradiction between the coexistence of humans and intelligent machines through layer by layer of progressive logical constraints. The following analysis is carried out from four dimensions: core content, ideological origin, literary practice and practical significance:

1. The progressive logic of core laws

The first law (not harmless)

"Robots are not allowed to harm human individuals, or to harm humans for inaction."

This is the law of highest priority, which directly negates the aggression of the robot, but it already implies contradictions - how to choose when multiple human interests conflict? (For example, the mechanical version of the tram puzzle)

The Second Law (Abide by the Command)

"The robot must obey human commands unless that command conflicts with the First Law."

While giving human control, it also exposes the risk of abuse of power. For example, in "The Steel Cave", the robot was ordered to destroy itself, which triggered discussions on the boundary of "obedience".

The Third Law (Self-Protection)

"Without violating the first two laws, robots must protect their own existence."

On the surface, it is to give robots the "right to survive", but in fact it provides buffer space for the first two laws to prevent the robot from collapsing the system due to excessive sacrifice.

2. The origin of thought and the philosophical dilemma

Mechanical extension of Cartesian ethics

The essence of the Three Laws is the codeization of deontological ethics, which transforms Kant's "absolute commands" into machine-executable programs. But Asimov keeps revealing his limitations in his novels:

Semantic ambiguity: How to define “harm”? Does psychological harm count? (For example, in "The Liar", robots caused human mental breakdown due to lying)

Logical Paradox: Priority Dilemma in Conflict of Multiple Laws ("Law Zero" attempts to break through)

Anthrocentrism: Presetting human beings as absolute value subjects, ignoring the ethical status of ecology or other living organisms.

Secret conjunction with Asimov's "psychological historiography"

The three laws share the same logic with the "psychological historiography" that predicts group behavior in the "Base" series: trying to constrain complex systems with rational rules, but they are always broken by "exceptions", reflecting deep doubts about absolute rationality.

3. Deconstruction and reconstruction in literary practice

Asimov passed more than 200 robot short stories to systematically "test" the loopholes of the three laws:

"Ring Circle": Robots are paralyzed because they fall into a vicious cycle of "harm humans" and "obeying orders", revealing the absurdity of rigid rules.

"Ru Jing Thinking of Him": Robots expose the importance of conceptual boundaries by misinterpreting the definition of "human" (excluding transformed humans from protection).

"Avoidable Conflict": Robots prevent humans from engaging in any risky activities by over-observing the First Law, implying that excessive protection is oppression.

These stories ultimately lead to the proposal of the Law Zero: "Robots must not harm the human whole, or cause the human whole to be harmed by inaction." Extending ethical objects from individuals to groups triggers a more complex "utilitarian dilemma."

4. Practical significance and contemporary development

A preview of AI ethics

The three laws inspired the formulation of modern AI ethical standards, such as the "transparency principle" and "suspable mechanism" in the EU's "Machine Civil Affairs Law Rules", which are essentially variants of the first law.

The "tram problem" of autonomous driving

How a vehicle chooses an impact object in an accident is essentially a multi-subject conflict version of the first law. MIT's "moral machine experiment" shows that humans prefer utilitarian choices (sacrificing the minority to save the majority), but this directly violates Asimov's principle of individual protection priority.

The Ethical Challenge of Military Robots

The core dispute facing the US military's "Laws Autonomous Weapon System" (LAWS) is the fundamental conflict between the three laws and the logic of war - if a robot cannot harm humans, it cannot be used as a weapon; if it can harm, it will completely overturn the first law.

Conclusion: A mirror of human nature beyond the rules

Asimov's three laws surface restrain robots, but in fact they question humans themselves: When we hand over the ethical decision-making power to algorithms, are we also evading moral responsibility? As he suggests in The Last Question – any perfect rule ultimately needs to return to the understanding of the nature of life. The value of the three laws is not about providing answers, but about the courage to continue asking questions.

Note 3: EU AI ethical guidelines

The EU's AI ethical standards are a systematic framework formulated to cope with the rapid development of artificial intelligence, reflecting Europe's value orientation in scientific and technological governance.

The EU's AI ethical norms are like the "Hamurabie Code" in the digital age. The essence is to use rationalism since the Enlightenment to reconstruct the relationship between science and technology and humanities. At a time when global AI governance has not yet formed a unified paradigm, this principle is not only a warning to the rapid progress of technology, but also provides a thinking sample for the survival of civilization. Only by finding a balance fulcrum between technological innovation and humanistic spirit can we protect the dignity of human civilization in the intelligent era.

1. Core ethical standards: Four-fold bottom line thinking

The principle of non-harm

Prohibit the development or deployment of AI systems that may cause substantial harm (such as lethal autonomous weapons, social discrimination tools)

Biometric monitoring needs to strictly limit scenarios (only for public safety emergencies)

Fairness and non-discrimination

Algorithm decisions must be transparent and explainable to avoid amplifying social bias

Establish a data diversity review mechanism (such as Meta is fined for lack of minority samples in 2023)

Privacy and Data Sovereignty

Implement GDPR to strengthen personal data control rights (for example, AI model training requires clear authorization from the data subject)

Ban the cross-border transmission of sensitive data to non-EU countries

Environmental and social welfare

AI companies are required to disclose their carbon footprints (Microsoft and other giants have promised to achieve AI emission reduction targets by 2030)

Algorithms need to promote educational equity (e.g. bias detection mechanisms in anti-cheating systems)

2. Institutional innovation: Typical cases of the three-layer regulatory system hierarchical mechanism

EU level

Classified Supervision of the Artificial Intelligence Act

Prohibited generative AI in high-risk areas

Member States Cooperation

Establish a network of digital regulatory agencies

France sets up AI Ethics Committee to review algorithmic bias

Corporate Responsibility

Mandatory implementation of AI system impact assessment

Microsoft is asked to disclose risk of Xbox facial recognition feature

Innovation points:

1. Dynamic risk classification: divide the four-level regulatory intensity from "unacceptable risk" to "minimum risk"

2. Transparency obligation: High-risk AI systems need to disclose test results and algorithm parameters (such as autonomous driving decision logic)

3. Accountability inversion mechanism: Developers need to bear the ultimate responsibility for AI wrong decisions (different from traditional products to accountability)

3. European solutions in global game

Competition with the United States

The EU focuses on ethical constraints (such as face recognition ban), while the US emphasizes technological innovation (such as the Chip Act subsidies)

Standard output: Promote member states to adopt EU guidelines through DEPA (Digital Economy Partnership Agreement)

2. Inspiration to developing countries

Dilemma of Southern countries: India and other countries that rely on outsourcing data labeling face pressure to increase compliance costs

Alternative path exploration: African Union proposes the "Artificial Intelligence Ethics Initiative" to try to build a localized governance framework

3. Dialogue with China's governance

Common features: Both emphasize algorithm fairness and data security (China's "Genetic AI Management Measures")

Difference: The EU pays more attention to the protection of individual rights, while China focuses on the overall governance efficiency of society

Potential conflict: The global compliance requirements of the EU's Artificial Intelligence Act have proposed new technical access standards for multinational companies, including Chinese companies.

IV. Implementation Challenges and Future Prospects

1. Technical lag: How to define "high-risk AI" has subjective judgment problems (such as misdiagnosis and attribution of medical diagnosis systems)

2. Global application dispute: The EU attempts to promote the norms to global supply chains, triggering a rebound in multinational companies (Tesla opposes the forced disclosure of the source of battery minerals)

3. Question on the foundation of philosophical science: When AI begins to create poetry and painting, what is the value benchmark for "human uniqueness"?