"AI Civil Servant" Should Be Taken To Work, And The Issue Of Artificial Intelligence Ethics Must Be Handled Well

"AI Civil Servant" Should Be Taken To Work, And The Issue Of Artificial Intelligence Ethics Must Be Handled Well

Let's discuss

After the popularity, government affairs systems in many places were connected. On February 8, the Shenzhen Longgang District Government Services and Data Management Bureau deployed and launched the -R1 full-size model on the government affairs external network, becoming the first government department in Guangdong to deploy and launch the model in the government affairs information innovation environment. On February 16, Shenzhen officially provided model application services to all districts and departments in the city based on the government cloud, realizing the integrated empowerment and upgrading of artificial intelligence government applications based on artificial intelligence.

Artificial intelligence is widely used in the field of government affairs, covering government affairs office, urban governance, quick public opinion processing, etc. The Shenzhen Municipal Government Government Affairs Network has fully accessed the big model, marking a new stage in the intelligence of government services. But new technologies often bring new problems, and AI is no exception. Large models with certain intelligence will bring new ethical problems in government affairs systems.

Unlike the auxiliary, procedural, process-based and factual help provided by previous IT technologies, AI provides decision-making and judgmental help. For example, in the past, a web page was wrong, and it was the input person who made an error, not the computer generated an error; an OA process would make an error, but the judgment of whether it was approved was always made by people.

The current big model AI still has hallucinations. The so-called hallucination refers to the generation of seemingly reasonable but actually inaccurate or false information. This phenomenon is particularly prominent in the fields of news, law and medicine, and may lead to the spread of false news, false citations of legal documents, and incorrect medical diagnosis. AI will make mistakes, but it will not be held responsible. Moreover, based on the nature of AI's black box, it is impossible to make a single adjustment to the error. That is to say, if the first time an AI makes an error, it will still make an error next time, and it cannot be predicted.

Someone once joked that AI can never replace auditing, because no matter how smart AI is, it cannot be blamed. This is the problem in the real society. Many things are not only about work, but also about responsibilities, constraints, rewards and punishments, and AI cannot be responsible for these things.

The government affairs system, from the convenience of crossing the road in life, to the security of citizens' information and even the public safety of urban operations, involves all aspects such as people's livelihood and urban operations. After the introduction of AI into the government affairs system, who will be responsible is a new question.

After accessing AI, if something goes wrong, the government department will say: I don’t know. If I use the AI provided by the IT department according to the program, the IT department should be responsible for it; the IT department will say that AI is just an auxiliary, and the government department still needs to do the final review. . The government department may ask back: What do you need AI to do? In the end, I will still have to review it. My workload will not decrease but increase instead of falling?

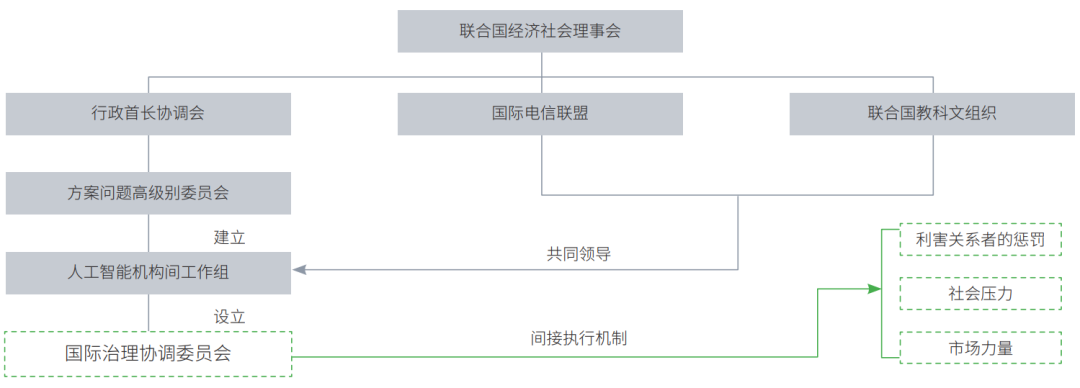

In fact, this is equivalent to the IT department not only using AI to do some of the work of the government department, but also assumes some responsibilities of the government department. But as a backend support department, can the IT department bear the responsibilities of the government department? Should the responsibilities of the government department? This requires new theories and new ethics to deal with these issues and divide responsibilities.

It is worth noting that at this point, Futian District has made new attempts. Futian District first created the "Basic Law" for government-assisted intelligent robots, taking the full-process management of government-assisted intelligent robots purchased and used by governments as the main line, building an ethical framework, and clarifying technical standards, application scope, safety management and regulatory requirements. This clarifies the boundaries for the legal, compliant and ethical operation of artificial intelligence in the government affairs field, avoids the abuse of AI or unclear definition of responsibilities, and makes a new attempt to work together with artificial intelligence and humans. To some extent, this is more important than simply connecting to the work.

In addition, it is also necessary to remind that the introduction of more artificial intelligence means collecting more data and having stronger data analysis capabilities. This also requires strict management of government data access rights to prevent the risk of privacy leakage in model training, especially in multimodal data analysis scenarios involving text, pictures, and videos.

□Liu Yuanju