Beyond The "Three Laws Of Robots" Artificial Intelligence Looking Forward To New Ethics

Beyond The "Three Laws Of Robots" Artificial Intelligence Looking Forward To New Ethics

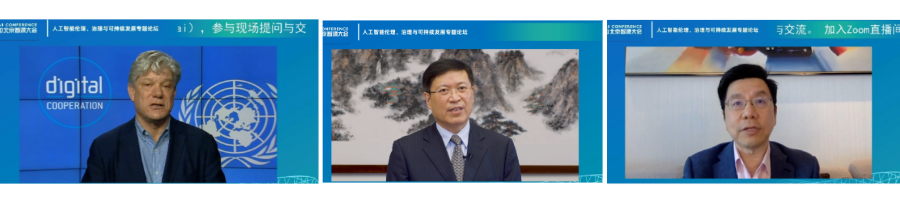

The ethical principles of artificial intelligence have attracted much attention recently. UNESCO Director-General Azulei said at the

Xinhua News Agency, Beijing, March 18

Xinhua News Agency reporter Yang Jun

The ethical principles of artificial intelligence have attracted much attention recently. UNESCO Director-General Azulei said at the "Global Conference on Promoting Humanized Artificial Intelligence" held in early March that there is currently no international ethical normative framework for all artificial intelligence development and application.

The industry has long recognized its limitations as the famous "Three Laws of Robots" designed by science fiction writer Asimov in the last century to prevent robots from getting out of control. "The Three Laws of Robots have made great contributions in history, but now it is far from enough to stay on the Three Laws." The expert said this in an interview with reporters recently.

New situation, calling for new ethical principles

Scientists have previously hoped to ensure that artificial intelligence represented by robots does not pose any threat to humans in the simplest way. The "Three Laws of Robots" stipulates that robots cannot harm humans; they must obey humans; they must protect themselves. Later, the "Law of Zero" was added: robots must not harm the human as a whole, and they must not harm the human as a whole because of inaction.

"Robots cannot harm people, but in what way will robots harm people? How much damage is this? What form of damage may occur? When will it happen? How to avoid it? These problems need to be refined today or need to be more advanced. Specific principles should be used to prevent it, and we cannot stay at the level of understanding seventy or eighty years ago." Professor Chen Xiaoping, head of the AI Ethics Professional Committee of the Chinese Society of Artificial Intelligence and director of the Robotics Laboratory of the University of Science and Technology of China, said.

Ezioni, CEO of the world-renowned Allen Institute of Artificial Intelligence, called for the update of the "Three Laws of Robots" in a speech two years ago. He also proposed that artificial intelligence systems must comply with all legal provisions applicable to their operators; artificial intelligence systems must clearly state that they are not humans; artificial intelligence systems cannot retain or disclose confidential information without the explicit permission of the information source. At that time, these innovative opinions sparked heated discussions in the industry.

The "AI Benefit Movement" promoted by Tigermark, a well-known scholar in the field of artificial intelligence ethics in the United States and a professor at MIT, proposed that new ethical principles need to ensure that future artificial intelligence and human goals are consistent. This event has been supported by many top global scientists, including Hawking and well-known IT companies.

"Artificial intelligence will become more and more powerful under the driving force of the multiplier effect, and the space for human trial and error will become smaller and smaller," said Tigmak.

People-oriented, global exploration of new ethics

At present, the research on the new ethics of artificial intelligence is becoming increasingly active worldwide. Many people have grudges about artificial intelligence, mostly due to the unknown brought about by its rapid development, and "protecting human beings" has become the first concern.

"It is necessary to ensure the direction of people-oriented development of artificial intelligence." Azulai called.

Yudkowski, founder of the American Institute of Machine Intelligence, proposed the concept of "friendly artificial intelligence", believing that "friendly" should be injected into the intelligent system of the machine from the beginning of design.

New ethical principles are constantly being proposed, but the concept of highlighting people-oriented has remained unchanged. Baidu founder Robin Li proposed the "Four Principles of Artificial Intelligence Ethics" at the 2018 China International Big Data Industry Expo, the first principle is safety and controllability.

The American Institute of Electrical and Electronic Engineers stipulates that artificial intelligence should prioritize maximizing the benefits of humans and the natural environment.

The formulation of new ethical principles is also being put on the agenda of governments.

When the Chinese government issued the "New Generation Artificial Intelligence Development Plan" in July 2017, it pointed out that artificial intelligence laws, regulations, ethical norms and policy systems should be established to form artificial intelligence security assessment and control capabilities; in April 2018, the European Commission issued the document The European Artificial Intelligence proposes that appropriate ethical and legal frameworks need to be considered to provide legal guarantees for technological innovation; on February 11 this year, US President Trump signed an executive order to launch the "American Artificial Intelligence Initiative", which is an initiative. One of the five major key points is to formulate standards for governance of artificial intelligence related to ethics.

Have worries, cross-border discussions on new issues

Chen Xiaoping called on the academic and industry of artificial intelligence, as well as all sectors of social disciplines such as ethics, philosophy, and law should participate in the formulation of principles and work closely together in order to avoid the ethical and moral risks in the development of artificial intelligence.

He believes that although there is no evidence in the short term that artificial intelligence has a major risk, there are still problems such as privacy leakage and technology abuse. The ethical principles of driverless and service robots must also be discussed and formulated as soon as possible.

The American Institute of Electrical and Electronic Engineers also stipulates that in order to solve the problem of fault and avoid public confusion, artificial intelligence systems must be accountable at the program level to demonstrate why they operate in a specific way.

"In the long run, we cannot wait until serious problems arise before making measures to prevent them." Chen Xiaoping believes that the characteristics of artificial intelligence and other technologies are different, and many artificial intelligence technologies are autonomous. For example, autonomous robots have physical action capabilities in real society. If there are no appropriate preventive measures, once serious problems arise, they may be more harmful.

Chen Xiaoping hopes that more people will participate in the research on artificial intelligence ethics, especially scientists and engineers, because they understand the root cause of the problem, the ways, details and related technological progress of the dangers are the best. "If they don't say it, the outside world can only guess, it's difficult. Get the right judgment and appropriate response measures”.

He also reminded that while preventing risks, we should pay attention to balance and avoid the side effects of curbing industrial development due to excessive restrictions or inappropriate risk reduction methods.